System Monitors

Suggest changes

Suggest changes

Data Infrastructure Insights includes a number of system-defined monitors for both metrics and logs. The system monitors available are dependent on the data collectors present on your tenant. Because of that, the monitors available in Data Infrastructure Insights may change as data collectors are added or their configurations changed.

|

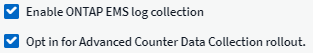

Many System Monitors are in Paused state by default. You can enable a system monitor by selecting the Resume option for the monitor. Ensure that Advanced Counter Data Collection and Enable ONTAP EMS log collection are enabled in the Data Collector. These options can be found in the ONTAP Data Collector under Advanced Configuration:

|

toc:[]

Monitor Descriptions

System-defined monitors are comprised of pre-defined metrics and conditions, as well as default descriptions and corrective actions, which can not be modified. You can modify the notification recipient list for system-defined monitors. To view the metrics, conditions, description and corrective actions, or to modify the recipient list, open a system-defined monitor group and click the monitor name in the list.

System-defined monitor groups cannot be modified or removed.

The following system-defined monitors are available, in the noted groups.

-

ONTAP Infrastructure includes monitors for infrastructure-related issues in ONTAP clusters.

-

ONTAP Workload Examples includes monitors for workload-related issues.

-

Monitors in both group default to Paused state.

Below are the system monitors currently included with Data Infrastructure Insights:

Metric Monitors

Monitor Name |

Severity |

Monitor Description |

Corrective Action |

Fiber Channel Port Utilization High |

CRITICAL |

Fiber Channel Protocol ports are used to receive and transfer the SAN traffic between the customer host system and the ONTAP LUNs. If the port utilization is high, then it will become a bottleneck and it will ultimately affect the performance of sensitive of Fiber Channel Protocol workloads.…A warning alert indicates that planned action should be taken to balance network traffic.…A critical alert indicates that service disruption is imminent and emergency measures should be taken to balance network traffic to ensure service continuity. |

If critical threshold is breached, consider immediate actions to minimize service disruption: |

Lun Latency High |

CRITICAL |

LUNs are objects that serve the I/O traffic often driven by performance sensitive applications such as databases. High LUN latencies means that the applications themselves might suffer and be unable to accomplish their tasks.…A warning alert indicates that planned action should be taken to move the LUN to appropriate Node or Aggregate.…A critical alert indicates that service disruption is imminent and emergency measures should be taken to ensure service continuity. Following are expected latencies based on media type - SSD up to 1-2 milliseconds; SAS up to 8-10 milliseconds, and SATA HDD 17-20 milliseconds |

If critical threshold is breached, consider following actions to minimize service disruption: |

Network Port Utilization High |

CRITICAL |

Network ports are used to receive and transfer the NFS, CIFS, and iSCSI protocol traffic between the customer host systems and the ONTAP volumes. If the port utilization is high, then it becomes a bottleneck and it will ultimately affect the performance of NFS, CIFS and iSCSI workloads.…A warning alert indicates that planned action should be taken to balance network traffic.…A critical alert indicates that service disruption is imminent and emergency measures should be taken to balance network traffic to ensure service continuity. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

NVMe Namespace Latency High |

CRITICAL |

NVMe Namespaces are objects that serve the I/O traffic that is driven by performance sensitive applications such as databases. High NVMe Namespaces latency means that the applications themselves may suffer and be unable to accomplish their tasks.…A warning alert indicates that planned action should be taken to move the LUN to appropriate Node or Aggregate.…A critical alert indicates that service disruption is imminent and emergency measures should be taken to ensure service continuity. |

If critical threshold is breached, consider immediate actions to minimize service disruption: |

QTree Capacity Full |

CRITICAL |

A qtree is a logically defined file system that can exist as a special subdirectory of the root directory within a volume. Each qtree has a default space quota or a quota defined by a quota policy to limit amount of data stored in the tree within the volume capacity.…A warning alert indicates that planned action should be taken to increase the space.…A critical alert indicates that service disruption is imminent and emergency measures should be taken to free up space to ensure service continuity. |

If critical threshold is breached, consider immediate actions to minimize service disruption: |

QTree Capacity Hard Limit |

CRITICAL |

A qtree is a logically defined file system that can exist as a special subdirectory of the root directory within a volume. Each qtree has a space quota measured in KBytes that is used to store data in order to control the growth of user data in volume and not exceed its total capacity.…A qtree maintains a soft storage capacity quota that provides alert to the user proactively before reaching the total capacity quota limit in the qtree and being unable to store data anymore. Monitoring the amount of data stored within a qtree ensures that the user receives uninterrupted data service. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

QTree Capacity Soft Limit |

WARNING |

A qtree is a logically defined file system that can exist as a special subdirectory of the root directory within a volume. Each qtree has a space quota measured in KBytes that it can use to store data in order to control the growth of user data in volume and not exceed its total capacity.…A qtree maintains a soft storage capacity quota that provides alert to the user proactively before reaching the total capacity quota limit in the qtree and being unable to store data anymore. Monitoring the amount of data stored within a qtree ensures that the user receives uninterrupted data service. |

If warning threshold is breached, consider the following immediate actions: |

QTree Files Hard Limit |

CRITICAL |

A qtree is a logically defined file system that can exist as a special subdirectory of the root directory within a volume. Each qtree has a quota of the number of files that it can contain to maintain a manageable file system size within the volume.…A qtree maintains a hard file number quota beyond which new files in the tree are denied. Monitoring the number of files within a qtree ensures that the user receives uninterrupted data service. |

If critical threshold is breached, consider immediate actions to minimize service disruption: |

QTree Files Soft Limit |

WARNING |

A qtree is a logically defined file system that can exist as a special subdirectory of the root directory within a volume. Each qtree has a quota of the number of files that it can contain in order to maintain a manageable file system size within the volume.…A qtree maintains a soft file number quota to provide alert to the user proactively before reaching the limit of files in the qtree and being unable to store any additional files. Monitoring the number of files within a qtree ensures that the user receives uninterrupted data service. |

If warning threshold is breached, plan to take the following immediate actions: |

Snapshot Reserve Space Full |

CRITICAL |

Storage capacity of a volume is necessary to store application and customer data. A portion of that space, called snapshot reserved space, is used to store snapshots which allow data to be protected locally. The more new and updated data stored in the ONTAP volume the more snapshot capacity is used and less snapshot storage capacity is available for future new or updated data. If the snapshot data capacity within a volume reaches the total snapshot reserve space, it might lead to the customer being unable to store new snapshot data and reduction in the level of protection for the data in the volume. Monitoring the volume used snapshot capacity ensures data services continuity. |

If critical threshold is breached, consider immediate actions to minimize service disruption: |

Storage Capacity Limit |

CRITICAL |

When a storage pool (aggregate) is filling up, I/O operations slow down and finally stop resulting in storage outage incident. A warning alert indicates that planned action should be taken soon to restore minimum free space. A critical alert indicates that service disruption is imminent and emergency measures should be taken to free up space to ensure service continuity. |

If critical threshold is breached, immediately consider the following actions to minimize service disruption: |

Storage Performance Limit |

CRITICAL |

When a storage system reaches its performance limit, operations slow down, latency goes up and workloads and applications may start failing. ONTAP evaluates the storage pool utilization for workloads and estimates what percent of performance has been consumed.…A warning alert indicates that planned action should be taken to reduce storage pool load to ensure that there will be enough storage pool performance left to service workload peaks.…A critical alert indicates that a performance brownout is imminent and emergency measures should be taken to reduce storage pool load to ensure service continuity. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

User Quota Capacity Hard Limit |

CRITICAL |

ONTAP recognizes the users of Unix or Windows systems who have the rights to access volumes, files or directories within a volume. As a result, ONTAP allows the customers to configure storage capacity for their users or groups of users of their Linux or Windows systems. The user or group policy quota limits the amount of space the user can utilize for their own data.…A hard limit of this quota allows notification of the user when the amount of capacity used within the volume is right before reaching the total capacity quota. Monitoring the amount of data stored within a user or group quota ensures that the user receives uninterrupted data service. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

User Quota Capacity Soft Limit |

WARNING |

ONTAP recognizes the users of Unix or Windows systems that have the rights to access volumes, files or directories within a volume. As a result, ONTAP allows the customers to configure storage capacity for their users or groups of users of their Linux or Windows systems. The user or group policy quota limits the amount of space the user can utilize for their own data.…A soft limit of this quota allows proactive notification to the user when the amount of capacity used within the volume is reaching the total capacity quota. Monitoring the amount of data stored within a user or group quota ensures that the user receives uninterrupted data service. |

If warning threshold is breached, plan to take the following immediate actions: |

Volume Capacity Full |

CRITICAL |

Storage capacity of a volume is necessary to store application and customer data. The more data stored in the ONTAP volume the less storage availability for future data. If the data storage capacity within a volume reaches the total storage capacity may lead to the customer being unable to store data due to lack of storage capacity. Monitoring the volume used storage capacity ensures data services continuity. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

Volume Inodes Limit |

CRITICAL |

Volumes that store files use index nodes (inode) to store file metadata. When a volume exhausts its inode allocation, no more files can be added to it.…A warning alert indicates that planned action should be taken to increase the number of available inodes.…A critical alert indicates that file limit exhaustion is imminent and emergency measures should be taken to free up inodes to ensure service continuity. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

Volume Latency High |

CRITICAL |

Volumes are objects that serve the I/O traffic often driven by performance sensitive applications including devOps applications, home directories, and databases. High volume latencies means that the applications themselves may suffer and be unable to accomplish their tasks. Monitoring volume latencies is critical to maintain application consistent performance. The following are expected latencies based on media type - SSD up to 1-2 milliseconds; SAS up to 8-10 milliseconds and SATA HDD 17-20 milliseconds. |

If critical threshold is breached, consider following immediate actions to minimize service disruption: |

Monitor Name |

Severity |

Monitor Description |

Corrective Action |

Node High Latency |

WARNING / CRITICAL |

Node latency has reached the levels where it might affect the performance of the applications on the node. Lower node latency ensures consistent performance of the applications. The expected latencies based on media type are: SSD up to 1-2 milliseconds; SAS up to 8-10 milliseconds and SATA HDD 17-20 milliseconds. |

If critical threshold is breached, then immediate actions should be taken to minimize service disruption: |

Node Performance Limit |

WARNING / CRITICAL |

Node performance utilization has reached the levels where it might affect the performance of the IOs and the applications supported by the node. Low node performance utilization ensures consistent performance of the applications. |

Immediate actions should be taken to minimize service disruption if critical threshold is breached: |

Storage VM High Latency |

WARNING / CRITICAL |

Storage VM (SVM) latency has reached the levels where it might affect the performance of the applications on the storage VM. Lower storage VM latency ensures consistent performance of the applications. The expected latencies based on media type are: SSD up to 1-2 milliseconds; SAS up to 8-10 milliseconds and SATA HDD 17-20 milliseconds. |

If critical threshold is breached, then immediately evaluate the threshold limits for volumes of the storage VM with a QoS policy assigned, to verify whether they are causing the volume workloads to get throttled |

User Quota Files Hard Limit |

CRITICAL |

The number of files created within the volume has reached the critical limit and additional files cannot be created. Monitoring the number of files stored ensures that the user receives uninterrupted data service. |

Immediate actions are required to minimize service disruption if critical threshold is breached.…Consider taking following actions: |

User Quota Files Soft Limit |

WARNING |

The number of files created within the volume has reached the threshold limit of the quota and is near to the critical limit. You cannot create additional files if quota reaches the critical limit. Monitoring the number of files stored by a user ensures that the user receives uninterrupted data service. |

Consider immediate actions if warning threshold is breached: |

Volume Cache Miss Ratio |

WARNING / CRITICAL |

Volume Cache Miss Ratio is the percentage of read requests from the client applications that are returned from the disk instead of being returned from the cache. This means that the volume has reached the set threshold. |

If critical threshold is breached, then immediate actions should be taken to minimize service disruption: |

Volume Qtree Quota Overcommit |

WARNING / CRITICAL |

Volume Qtree Quota Overcommit specifies the percentage at which a volume is considered to be overcommitted by the qtree quotas. The set threshold for the qtree quota is reached for the volume. Monitoring the volume qtree quota overcommit ensures that the user receives uninterrupted data service. |

If critical threshold is breached, then immediate actions should be taken to minimize service disruption: |

Log Monitors

Monitor Name |

Severity |

Description |

Corrective Action |

AWS Credentials Not Initialized |

INFO |

This event occurs when a module attempts to access Amazon Web Services (AWS) Identity and Access Management (IAM) role-based credentials from the cloud credentials thread before they are initialized. |

Wait for the cloud credentials thread, as well as the system, to complete initialization. |

Cloud Tier Unreachable |

CRITICAL |

A storage node cannot connect to Cloud Tier object store API. Some data will be inaccessible. |

If you use on-premises products, perform the following corrective actions: …Verify that your intercluster LIF is online and functional by using the "network interface show" command.…Check the network connectivity to the object store server by using the "ping" command over the destination node intercluster LIF.…Ensure the following:…The configuration of your object store has not changed.…The login and connectivity information is still valid.…Contact NetApp technical support if the issue persists. |

Disk Out of Service |

INFO |

This event occurs when a disk is removed from service because it has been marked failed, is being sanitized, or has entered the Maintenance Center. |

None. |

FlexGroup Constituent Full |

CRITICAL |

A constituent within a FlexGroup volume is full, which might cause a potential disruption of service. You can still create or expand files on the FlexGroup volume. However, none of the files that are stored on the constituent can be modified. As a result, you might see random out-of-space errors when you try to perform write operations on the FlexGroup volume. |

It is recommended that you add capacity to the FlexGroup volume by using the "volume modify -files +X" command.…Alternatively, delete files from the FlexGroup volume. However, it is difficult to determine which files have landed on the constituent. |

Flexgroup Constituent Nearly Full |

WARNING |

A constituent within a FlexGroup volume is nearly out of space, which might cause a potential disruption of service. Files can be created and expanded. However, if the constituent runs out of space, you might not be able to append to or modify the files on the constituent. |

It is recommended that you add capacity to the FlexGroup volume by using the "volume modify -files +X" command.…Alternatively, delete files from the FlexGroup volume. However, it is difficult to determine which files have landed on the constituent. |

FlexGroup Constituent Nearly Out of Inodes |

WARNING |

A constituent within a FlexGroup volume is almost out of inodes, which might cause a potential disruption of service. The constituent receives lesser create requests than average. This might impact the overall performance of the FlexGroup volume, because the requests are routed to constituents with more inodes. |

It is recommended that you add capacity to the FlexGroup volume by using the "volume modify -files +X" command.…Alternatively, delete files from the FlexGroup volume. However, it is difficult to determine which files have landed on the constituent. |

FlexGroup Constituent Out of Inodes |

CRITICAL |

A constituent of a FlexGroup volume has run out of inodes, which might cause a potential disruption of service. You cannot create new files on this constituent. This might lead to an overall imbalanced distribution of content across the FlexGroup volume. |

It is recommended that you add capacity to the FlexGroup volume by using the "volume modify -files +X" command.…Alternatively, delete files from the FlexGroup volume. However, it is difficult to determine which files have landed on the constituent. |

LUN Offline |

INFO |

This event occurs when a LUN is brought offline manually. |

Bring the LUN back online. |

Main Unit Fan Failed |

WARNING |

One or more main unit fans have failed. The system remains operational.…However, if the condition persists for too long, the overtemperature might trigger an automatic shutdown. |

Reseat the failed fans. If the error persists, replace them. |

Main Unit Fan in Warning State |

INFO |

This event occurs when one or more main unit fans are in a warning state. |

Replace the indicated fans to avoid overheating. |

NVRAM Battery Low |

WARNING |

The NVRAM battery capacity is critically low. There might be a potential data loss if the battery runs out of power.…Your system generates and transmits an AutoSupport or "call home" message to NetApp technical support and the configured destinations if it is configured to do so. The successful delivery of an AutoSupport message significantly improves problem determination and resolution. |

Perform the following corrective actions:…View the battery's current status, capacity, and charging state by using the "system node environment sensors show" command.…If the battery was replaced recently or the system was non-operational for an extended period of time, monitor the battery to verify that it is charging properly.…Contact NetApp technical support if the battery runtime continues to decrease below critical levels, and the storage system shuts down automatically. |

Service Processor Not Configured |

WARNING |

This event occurs on a weekly basis, to remind you to configure the Service Processor (SP). The SP is a physical device that is incorporated into your system to provide remote access and remote management capabilities. You should configure the SP to use its full functionality. |

Perform the following corrective actions:…Configure the SP by using the "system service-processor network modify" command.…Optionally, obtain the MAC address of the SP by using the "system service-processor network show" command.…Verify the SP network configuration by using the "system service-processor network show" command.…Verify that the SP can send an AutoSupport email by using the "system service-processor autosupport invoke" command. |

Service Processor Offline |

CRITICAL |

ONTAP is no longer receiving heartbeats from the Service Processor (SP), even though all the SP recovery actions have been taken. ONTAP cannot monitor the health of the hardware without the SP.…The system will shut down to prevent hardware damage and data loss. Set up a panic alert to be notified immediately if the SP goes offline. |

Power-cycle the system by performing the following actions:…Pull the controller out from the chassis.…Push the controller back in.…Turn the controller back on.…If the problem persists, replace the controller module. |

Shelf Fans Failed |

CRITICAL |

The indicated cooling fan or fan module of the shelf has failed. The disks in the shelf might not receive enough cooling airflow, which might result in disk failure. |

Perform the following corrective actions:…Verify that the fan module is fully seated and secured. |

System Cannot Operate Due to Main Unit Fan Failure |

CRITICAL |

One or more main unit fans have failed, disrupting system operation. This might lead to a potential data loss. |

Replace the failed fans. |

Unassigned Disks |

INFO |

System has unassigned disks - capacity is being wasted and your system may have some misconfiguration or partial configuration change applied. |

Perform the following corrective actions:…Determine which disks are unassigned by using the "disk show -n" command.…Assign the disks to a system by using the "disk assign" command. |

Antivirus Server Busy |

WARNING |

The antivirus server is too busy to accept any new scan requests. |

If this message occurs frequently, ensure that there are enough antivirus servers to handle the virus scan load generated by the SVM. |

AWS Credentials for IAM Role Expired |

CRITICAL |

Cloud Volume ONTAP has become inaccessible. The Identity and Access Management (IAM) role-based credentials have expired. The credentials are acquired from the Amazon Web Services (AWS) metadata server using the IAM role, and are used to sign API requests to Amazon Simple Storage Service (Amazon S3). |

Perform the following:…Log in to the AWS EC2 Management Console.…Navigate to the Instances page.…Find the instance for the Cloud Volumes ONTAP deployment and check its health.…Verify that the AWS IAM role associated with the instance is valid and has been granted proper privileges to the instance. |

AWS Credentials for IAM Role Not Found |

CRITICAL |

The cloud credentials thread cannot acquire the Amazon Web Services (AWS) Identity and Access Management (IAM) role-based credentials from the AWS metadata server. The credentials are used to sign API requests to Amazon Simple Storage Service (Amazon S3). Cloud Volume ONTAP has become inaccessible.… |

Perform the following:…Log in to the AWS EC2 Management Console.…Navigate to the Instances page.…Find the instance for the Cloud Volumes ONTAP deployment and check its health.…Verify that the AWS IAM role associated with the instance is valid and has been granted proper privileges to the instance. |

AWS Credentials for IAM Role Not Valid |

CRITICAL |

The Identity and Access Management (IAM) role-based credentials are not valid. The credentials are acquired from the Amazon Web Services (AWS) metadata server using the IAM role, and are used to sign API requests to Amazon Simple Storage Service (Amazon S3). Cloud Volume ONTAP has become inaccessible. |

Perform the following:…Log in to the AWS EC2 Management Console.…Navigate to the Instances page.…Find the instance for the Cloud Volumes ONTAP deployment and check its health.…Verify that the AWS IAM role associated with the instance is valid and has been granted proper privileges to the instance. |

AWS IAM Role Not Found |

CRITICAL |

The Identity and Access Management (IAM) roles thread cannot find an Amazon Web Services (AWS) IAM role on the AWS metadata server. The IAM role is required to acquire role-based credentials used to sign API requests to Amazon Simple Storage Service (Amazon S3). Cloud Volume ONTAP has become inaccessible.… |

Perform the following:…Log in to the AWS EC2 Management Console.…Navigate to the Instances page.…Find the instance for the Cloud Volumes ONTAP deployment and check its health.…Verify that the AWS IAM role associated with the instance is valid. |

AWS IAM Role Not Valid |

CRITICAL |

The Amazon Web Services (AWS) Identity and Access Management (IAM) role on the AWS metadata server is not valid. The Cloud Volume ONTAP has become inaccessible.… |

Perform the following:…Log in to the AWS EC2 Management Console.…Navigate to the Instances page.…Find the instance for the Cloud Volumes ONTAP deployment and check its health.…Verify that the AWS IAM role associated with the instance is valid and has been granted proper privileges to the instance. |

AWS Metadata Server Connection Fail |

CRITICAL |

The Identity and Access Management (IAM) roles thread cannot establish a communication link with the Amazon Web Services (AWS) metadata server. Communication should be established to acquire the necessary AWS IAM role-based credentials used to sign API requests to Amazon Simple Storage Service (Amazon S3). Cloud Volume ONTAP has become inaccessible.… |

Perform the following:…Log in to the AWS EC2 Management Console.…Navigate to the Instances page.…Find the instance for the Cloud Volumes ONTAP deployment and check its health.… |

FabricPool Space Usage Limit Nearly Reached |

WARNING |

The total cluster-wide FabricPool space usage of object stores from capacity-licensed providers has nearly reached the licensed limit. |

Perform the following corrective actions:…Check the percentage of the licensed capacity used by each FabricPool storage tier by using the "storage aggregate object-store show-space" command.…Delete Snapshot copies from volumes with the tiering policy "snapshot" or "backup" by using the "volume snapshot delete" command to clear up space.…Install a new license on the cluster to increase the licensed capacity. |

FabricPool Space Usage Limit Reached |

CRITICAL |

The total cluster-wide FabricPool space usage of object stores from capacity-licensed providers has reached the license limit. |

Perform the following corrective actions:…Check the percentage of the licensed capacity used by each FabricPool storage tier by using the "storage aggregate object-store show-space" command.…Delete Snapshot copies from volumes with the tiering policy "snapshot" or "backup" by using the "volume snapshot delete" command to clear up space.…Install a new license on the cluster to increase the licensed capacity. |

Giveback of Aggregate Failed |

CRITICAL |

This event occurs during the migration of an aggregate as part of a storage failover (SFO) giveback, when the destination node cannot reach the object stores. |

Perform the following corrective actions:…Verify that your intercluster LIF is online and functional by using the "network interface show" command.…Check network connectivity to the object store server by using the"'ping" command over the destination node intercluster LIF. …Verify that the configuration of your object store has not changed and that login and connectivity information is still accurate by using the "aggregate object-store config show" command.…Alternatively, you can override the error by specifying false for the "require-partner-waiting" parameter of the giveback command.…Contact NetApp technical support for more information or assistance. |

HA Interconnect Down |

WARNING |

The high-availability (HA) interconnect is down. Risk of service outage when failover is not available. |

Corrective actions depend on the number and type of HA interconnect links supported by the platform, as well as the reason why the interconnect is down. …If the links are down:…Verify that both controllers in the HA pair are operational.…For externally connected links, make sure that the interconnect cables are connected properly and that the small form-factor pluggables (SFPs), if applicable, are seated properly on both controllers.…For internally connected links, disable and re-enable the links, one after the other, by using the "ic link off" and "ic link on" commands. …If links are disabled, enable the links by using the "ic link on" command. …If a peer is not connected, disable and re-enable the links, one after the other, by using the "ic link off" and "ic link on" commands.…Contact NetApp technical support if the issue persists. |

Max Sessions Per User Exceeded |

WARNING |

You have exceeded the maximum number of sessions allowed per user over a TCP connection. Any request to establish a session will be denied until some sessions are released. … |

Perform the following corrective actions: …Inspect all the applications that run on the client, and terminate any that are not operating properly.…Reboot the client.…Check if the issue is caused by a new or existing application:…If the application is new, set a higher threshold for the client by using the "cifs option modify -max-opens-same-file-per-tree" command. |

Max Times Open Per File Exceeded |

WARNING |

You have exceeded the maximum number of times that you can open the file over a TCP connection. Any request to open this file will be denied until you close some open instances of the file. This typically indicates abnormal application behavior.… |

Perform the following corrective actions:…Inspect the applications that run on the client using this TCP connection. |

NetBIOS Name Conflict |

CRITICAL |

The NetBIOS Name Service has received a negative response to a name registration request, from a remote machine. This is typically caused by a conflict in the NetBIOS name or an alias. As a result, clients might not be able to access data or connect to the right data-serving node in the cluster. |

Perform any one of the following corrective actions:…If there is a conflict in the NetBIOS name or an alias, perform one of the following:…Delete the duplicate NetBIOS alias by using the "vserver cifs delete -aliases alias -vserver vserver" command.…Rename a NetBIOS alias by deleting the duplicate name and adding an alias with a new name by using the "vserver cifs create -aliases alias -vserver vserver" command. …If there are no aliases configured and there is a conflict in the NetBIOS name, then rename the CIFS server by using the "vserver cifs delete -vserver vserver" and "vserver cifs create -cifs-server netbiosname" commands. |

NFSv4 Store Pool Exhausted |

CRITICAL |

A NFSv4 store pool has been exhausted. |

If the NFS server is unresponsive for more than 10 minutes after this event, contact NetApp technical support. |

No Registered Scan Engine |

CRITICAL |

The antivirus connector notified ONTAP that it does not have a registered scan engine. This might cause data unavailability if the "scan-mandatory" option is enabled. |

Perform the following corrective actions:…Ensure that the scan engine software installed on the antivirus server is compatible with ONTAP.…Ensure that scan engine software is running and configured to connect to the antivirus connector over local loopback. |

No Vscan Connection |

CRITICAL |

ONTAP has no Vscan connection to service virus scan requests. This might cause data unavailability if the "scan-mandatory" option is enabled. |

Ensure that the scanner pool is properly configured and the antivirus servers are active and connected to ONTAP. |

Node Root Volume Space Low |

CRITICAL |

The system has detected that the root volume is dangerously low on space. The node is not fully operational. Data LIFs might have failed over within the cluster, because of which NFS and CIFS access is limited on the node. Administrative capability is limited to local recovery procedures for the node to clear up space on the root volume. |

Perform the following corrective actions:…Clear up space on the root volume by deleting old Snapshot copies, deleting files you no longer need from the /mroot directory, or expanding the root volume capacity.…Reboot the controller.…Contact NetApp technical support for more information or assistance. |

Nonexistent Admin Share |

CRITICAL |

Vscan issue: a client has attempted to connect to a nonexistent ONTAP_ADMIN$ share. |

Ensure that Vscan is enabled for the mentioned SVM ID. Enabling Vscan on a SVM causes the ONTAP_ADMIN$ share to be created for the SVM automatically. |

NVMe Namespace Out of Space |

CRITICAL |

An NVMe namespace has been brought offline because of a write failure caused by lack of space. |

Add space to the volume, and then bring the NVMe namespace online by using the "vserver nvme namespace modify" command. |

NVMe-oF Grace Period Active |

WARNING |

This event occurs on a daily basis when the NVMe over Fabrics (NVMe-oF) protocol is in use and the grace period of the license is active. The NVMe-oF functionality requires a license after the license grace period expires. NVMe-oF functionality is disabled when the license grace period is over. |

Contact your sales representative to obtain an NVMe-oF license, and add it to the cluster, or remove all instances of NVMe-oF configuration from the cluster. |

NVMe-oF Grace Period Expired |

WARNING |

The NVMe over Fabrics (NVMe-oF) license grace period is over and the NVMe-oF functionality is disabled. |

Contact your sales representative to obtain an NVMe-oF license, and add it to the cluster. |

NVMe-oF Grace Period Start |

WARNING |

The NVMe over Fabrics (NVMe-oF) configuration was detected during the upgrade to ONTAP 9.5 software. NVMe-oF functionality requires a license after the license grace period expires. |

Contact your sales representative to obtain an NVMe-oF license, and add it to the cluster. |

Object Store Host Unresolvable |

CRITICAL |

The object store server host name cannot be resolved to an IP address. The object store client cannot communicate with the object-store server without resolving to an IP address. As a result, data might be inaccessible. |

Check the DNS configuration to verify that the host name is configured correctly with an IP address. |

Object Store Intercluster LIF Down |

CRITICAL |

The object-store client cannot find an operational LIF to communicate with the object store server. The node will not allow object store client traffic until the intercluster LIF is operational. As a result, data might be inaccessible. |

Perform the following corrective actions:…Check the intercluster LIF status by using the "network interface show -role intercluster" command.…Verify that the intercluster LIF is configured correctly and operational.…If an intercluster LIF is not configured, add it by using the "network interface create -role intercluster" command. |

Object Store Signature Mismatch |

CRITICAL |

The request signature sent to the object store server does not match the signature calculated by the client. As a result, data might be inaccessible. |

Verify that the secret access key is configured correctly. If it is configured correctly, contact NetApp technical support for assistance. |

READDIR Timeout |

CRITICAL |

A READDIR file operation has exceeded the timeout that it is allowed to run in WAFL. This can be because of very large or sparse directories. Corrective action is recommended. |

Perform the following corrective actions:…Find information specific to recent directories that have had READDIR file operations expire by using the following 'diag' privilege nodeshell CLI command: |

Relocation of Aggregate Failed |

CRITICAL |

This event occurs during the relocation of an aggregate, when the destination node cannot reach the object stores. |

Perform the following corrective actions:…Verify that your intercluster LIF is online and functional by using the "network interface show" command.…Check network connectivity to the object store server by using the"'ping" command over the destination node intercluster LIF. …Verify that the configuration of your object store has not changed and that login and connectivity information is still accurate by using the "aggregate object-store config show" command.…Alternatively, you can override the error by using the "override-destination-checks" parameter of the relocation command.…Contact NetApp technical support for more information or assistance. |

Shadow Copy Failed |

CRITICAL |

A Volume Shadow Copy Service (VSS), a Microsoft Server backup and restore service operation, has failed. |

Check the following using the information provided in the event message:…Is shadow copy configuration enabled?…Are the appropriate licenses installed? …On which shares is the shadow copy operation performed?…Is the share name correct?…Does the share path exist?…What are the states of the shadow copy set and its shadow copies? |

Storage Switch Power Supplies Failed |

WARNING |

There is a missing power supply in the cluster switch. Redundancy is reduced, risk of outage with any further power failures. |

Perform the following corrective actions:…Ensure that the power supply mains, which supplies power to the cluster switch, is turned on.…Ensure that the power cord is connected to the power supply.…Contact NetApp technical support if the issue persists. |

Too Many CIFS Authentication |

WARNING |

Many authentication negotiations have occurred simultaneously. There are 256 incomplete new session requests from this client. |

Investigate why the client has created 256 or more new connection requests. You might have to contact the vendor of the client or of the application to determine why the error occurred. |

Unauthorized User Access to Admin Share |

WARNING |

A client has attempted to connect to the privileged ONTAP_ADMIN$ share even though their logged-in user is not an allowed user. |

Perform the following corrective actions:…Ensure that the mentioned username and IP address is configured in one of the active Vscan scanner pools.…Check the scanner pool configuration that is currently active by using the "vserver vscan scanner pool show-active" command. |

Virus Detected |

WARNING |

A Vscan server has reported an error to the storage system. This typically indicates that a virus has been found. However, other errors on the Vscan server can cause this event.…Client access to the file is denied. The Vscan server might, depending on its settings and configuration, clean the file, quarantine it, or delete it. |

Check the log of the Vscan server reported in the "syslog" event to see if it was able to successfully clean, quarantine, or delete the infected file. If it was not able to do so, a system administrator might have to manually delete the file. |

Volume Offline |

INFO |

This message indicates that a volume is made offline. |

Bring the volume back online. |

Volume Restricted |

INFO |

This event indicates that a flexible volume is made restricted. |

Bring the volume back online. |

Storage VM Stop Succeeded |

INFO |

This message occurs when a 'vserver stop' operation succeeds. |

Use 'vserver start' command to start the data access on a storage VM. |

Node Panic |

WARNING |

This event is issued when a panic occurs |

Contact NetApp customer support. |

Anti-Ransomware Log Monitors

Monitor Name |

Severity |

Description |

Corrective Action |

Storage VM Anti-ransomware Monitoring Disabled |

WARNING |

The anti-ransomware monitoring for the storage VM is disabled. Enable anti-ransomware to protect the storage VM. |

None |

Storage VM Anti-ransomware Monitoring Enabled (Learning Mode) |

INFO |

The anti-ransomware monitoring for the storage VM is enabled in learning mode. |

None |

Volume Anti-ransomware Monitoring Enabled |

INFO |

The anti-ransomware monitoring for the volume is enabled. |

None |

Volume Anti-ransomware Monitoring Disabled |

WARNING |

The anti-ransomware monitoring for the volume is disabled. Enable anti-ransomware to protect the volume. |

None |

Volume Anti-ransomware Monitoring Enabled (Learning Mode) |

INFO |

The anti-ransomware monitoring for the volume is enabled in learning mode. |

None |

Volume Anti-ransomware Monitoring Paused (Learning Mode) |

WARNING |

The anti-ransomware monitoring for the volume is paused in learning mode. |

None |

Volume Anti-ransomware Monitoring Paused |

WARNING |

The anti-ransomware monitoring for the volume is paused. |

None |

Volume Anti-ransomware Monitoring Disabling |

WARNING |

The anti-ransomware monitoring for the volume is disabling. |

None |

Ransomware Activity Detected |

CRITICAL |

To protect the data from the detected ransomware, a Snapshot copy has been taken that can be used to restore original data. |

Refer to the "FINAL-DOCUMENT-NAME" to take remedial measures for ransomware activity. |

FSx for NetApp ONTAP Monitors

Monitor Name |

Thresholds |

Monitor Description |

Corrective Action |

FSx Volume Capacity is Full |

Warning @ > 85 %…Critical @ > 95 % |

Storage capacity of a volume is necessary to store application and customer data. The more data stored in the ONTAP volume the less storage availability for future data. If the data storage capacity within a volume reaches the total storage capacity may lead to the customer being unable to store data due to lack of storage capacity. Monitoring the volume used storage capacity ensures data services continuity. |

Immediate actions are required to minimize service disruption if critical threshold is breached:…1. Consider deleting data that is not needed anymore to free up space |

FSx Volume High Latency |

Warning @ > 1000 µs…Critical @ > 2000 µs |

Volumes are objects that serve the IO traffic often driven by performance sensitive applications including devOps applications, home directories, and databases. High volume latencies means that the applications themselves may suffer and be unable to accomplish their tasks. Monitoring volume latencies is critical to maintain application consistent performance. |

Immediate actions are required to minimize service disruption if critical threshold is breached:…1. If the volume has a QoS policy assigned to it, evaluate its limit thresholds in case they are causing the volume workload to get throttled……Plan to take the following actions soon if warning threshold is breached:…1. If the volume has a QoS policy assigned to it, evaluate its limit thresholds in case they are causing the volume workload to get throttled.…2. If the node is also experiencing high utilization, move the volume to another node or reduce the total workload of the node. |

FSx Volume Inodes Limit |

Warning @ > 85 %…Critical @ > 95 % |

Volumes that store files use index nodes (inode) to store file metadata. When a volume exhausts its inode allocation no more files can be added to it. A warning alert indicates that planned action should be taken to increase the number of available inodes. A critical alert indicates that file limit exhaustion is imminent and emergency measures should be taken to free up inodes to ensure service continuity |

Immediate actions are required to minimize service disruption if critical threshold is breached:…1. Consider increasing the inodes value for the volume. If the inodes value is already at the max, then consider splitting the volume into two or more volumes because the file system has grown beyond the maximum size……Plan to take the following actions soon if warning threshold is breached:…1. Consider increasing the inodes value for the volume. If the inodes value is already at the max, then consider splitting the volume into two or more volumes because the file system has grown beyond the maximum size |

FSx Volume Qtree Quota Overcommit |

Warning @ > 95 %…Critical @ > 100 % |

Volume Qtree Quota Overcommit specifies the percentage at which a volume is considered to be overcommitted by the qtree quotas. The set threshold for the qtree quota is reached for the volume. Monitoring the volume qtree quota overcommit ensures that the user receives uninterrupted data service. |

If critical threshold is breached, then immediate actions should be taken to minimize service disruption: |

FSx Snapshot Reserve Space is Full |

Warning @ > 90 %…Critical @ > 95 % |

Storage capacity of a volume is necessary to store application and customer data. A portion of that space, called snapshot reserved space, is used to store snapshots which allow data to be protected locally. The more new and updated data stored in the ONTAP volume the more snapshot capacity is used and less snapshot storage capacity will be available for future new or updated data. If the snapshot data capacity within a volume reaches the total snapshot reserve space it may lead to the customer being unable to store new snapshot data and reduction in the level of protection for the data in the volume. Monitoring the volume used snapshot capacity ensures data services continuity. |

Immediate actions are required to minimize service disruption if critical threshold is breached:…1. Consider configuring snapshots to use data space in the volume when the snapshot reserve is full…2. Consider deleting some older snapshots that may not be needed anymore to free up space……Plan to take the following actions soon if warning threshold is breached:…1. Consider increasing the snapshot reserve space within the volume to accommodate the growth…2. Consider configuring snapshots to use data space in the volume when the snapshot reserve is full |

FSx Volume Cache Miss Ratio |

Warning @ > 95 %…Critical @ > 100 % |

Volume Cache Miss Ratio is the percentage of read requests from the client applications that are returned from the disk instead of being returned from the cache. This means that the volume has reached the set threshold. |

If critical threshold is breached, then immediate actions should be taken to minimize service disruption: |

K8s Monitors

Monitor Name |

Description |

Corrective Actions |

Severity/Threshold |

Persistent Volume Latency High |

High persistent volume latencies means that the applications themselves may suffer and be unable to accomplish their tasks. Monitoring persistent volume latencies is critical to maintain application consistent performance. The following are expected latencies based on media type - SSD up to 1-2 milliseconds; SAS up to 8-10 milliseconds and SATA HDD 17-20 milliseconds. |

Immediate Actions |

Warning @ > 6,000 μs |

Cluster Memory Saturation High |

Cluster allocatable memory saturation is high. |

Add nodes. |

Warning @ > 80 % |

POD Attach Failed |

This alert occurs when a volume attachment with POD is failed. |

Warning |

|

High Retransmit Rate |

High TCP Retransmit Rate |

Check for Network congestion - Identify workloads that consume a lot of network bandwidth. |

Warning @ > 10 % |

Node File System Capacity High |

Node File System Capacity High |

- Increase the size of the node disks to ensure that there is sufficient room for the application files. |

Warning @ > 80 % |

Workload Network Jitter High |

High TCP Jitter (high latency/response time variations) |

Check for Network congestion. Identify workloads that consume a lot of network bandwidth. |

Warning @ > 30 ms |

Persistent Volume Throughput |

MBPS thresholds on persistent volumes can be used to alert an administrator when persistent volumes exceed predefined performance expectations, potentially impacting other persistent volumes. Activating this monitor will generate alerts appropriate for the typical throughput profile of persistent volumes on SSDs. This monitor will cover all persistent volumes on your tenant. The warning and critical threshold values can be adjusted based on your monitoring goals by duplicating this monitor and setting thresholds appropriate for your storage class. A duplicated monitor can be further targeted to a subset of the persistent volumes on your tenant. |

Immediate Actions |

Warning @ > 10,000 MB/s |

Container at Risk of Going OOM Killed |

The container's memory limits are set too low. The container is at risk of eviction (Out of Memory Kill). |

Increase container memory limits. |

Warning @ > 95 % |

Workload Down |

Workload has no healthy pods. |

Critical @ < 1 |

|

Persistent Volume Claim Failed Binding |

This alert occurs when a binding is failed on a PVC. |

Warning |

|

ResourceQuota Mem Limits About to Exceed |

Memory limits for Namespace are about to exceed ResourceQuota |

Warning @ > 80 % |

|

ResourceQuota Mem Requests About to Exceed |

Memory requests for Namespace are about to exceed ResourceQuota |

Warning @ > 80 % |

|

Node Creation Failed |

The node could not be scheduled because of a configuration error. |

Check the Kubernetes event log for the cause of the configuration failure. |

Critical |

Persistent Volume Reclamation Failed |

The volume has failed its automatic reclamation. |

Warning @ > 0 B |

|

Container CPU Throttling |

The container's CPU Limits are set too low. Container processes are slowed. |

Increase container CPU limits. |

Warning @ > 95 % |

Service Load Balancer Failed to Delete |

Warning |

||

Persistent Volume IOPS |

IOPS thresholds on persistent volumes can be used to alert an administrator when persistent volumes exceed predefined performance expectations. Activating this monitor will generate alerts appropriate for the typical IOPS profile of persistence volumes. This monitor will cover all persistent volumes on your tenant. The warning and critical threshold values can be adjusted based on your monitoring goals by duplicating this monitor and setting thresholds appropriate for your workload. |

Immediate Actions |

Warning @ > 20,000 IO/s |

Service Load Balancer Failed to Update |

Warning |

||

POD Failed Mount |

This alert occurs when a mount is failed on a POD. |

Warning |

|

Node PID Pressure |

Available process identifiers on the (Linux) node has fallen below an eviction threshold. |

Find and fix pods that generate many processes and starve the node of available process IDs. |

Critical @ > 0 |

Pod Image Pull Failure |

Kubernetes failed to pull the pod container image. |

- Make sure the pod's image is spelled correctly in the pod configuration. |

Warning |

Job Running Too Long |

Job is running for too long |

Warning @ > 1 hr |

|

Node Memory High |

Node memory usage is high |

Add nodes. |

Warning @ > 85 % |

ResourceQuota CPU Limits About to Exceed |

CPU limits for Namespace are about to exceed ResourceQuota |

Warning @ > 80 % |

|

Pod Crash Loop Backoff |

Pod has crashed and attempted to restart multiple times. |

Critical @ > 3 |

|

Node CPU High |

Node CPU usage is high. |

Add nodes. |

Warning @ > 80 % |

Workload Network Latency RTT High |

High TCP RTT (Round Trip Time) latency |

Check for Network congestion ▒ Identify workloads that consume a lot of network bandwidth. |

Warning @ > 150 ms |

Job Failed |

Job did not complete successfully due to a node crash or reboot, resource exhaustion, job timeout, or pod scheduling failure. |

Check the Kubernetes event logs for failure causes. |

Warning @ > 1 |

Persistent Volume Full in a Few Days |

Persistent Volume will run out of space in a few days |

-Increase the volume size to ensure that there is sufficient room for the application files. |

Warning @ < 8 day |

Node Memory Pressure |

Node is running out of memory. Available memory has met eviction threshold. |

Add nodes. |

Critical @ > 0 |

Node Unready |

Node has been unready for 5 minutes |

Verify the node have enough CPU, memory, and disk resources. |

Critical @ < 1 |

Persistent Volume Capacity High |

Persistent Volume backend used capacity is high. |

- Increase the volume size to ensure that there is sufficient room for the application files. |

Warning @ > 80 % |

Service Load Balancer Failed to Create |

Service Load Balancer Create Failed |

Critical |

|

Workload Replica Mismatch |

Some pods are currently not available for a Deployment or DaemonSet. |

Warning @ > 1 |

|

ResourceQuota CPU Requests About to Exceed |

CPU requests for Namespace are about to exceed ResourceQuota |

Warning @ > 80 % |

|

High Retransmit Rate |

High TCP Retransmit Rate |

Check for Network congestion - Identify workloads that consume a lot of network bandwidth. |

Warning @ > 10 % |

Node Disk Pressure |

Available disk space and inodes on either the node's root filesystem or image filesystem has satisfied an eviction threshold. |

- Increase the size of the node disks to ensure that there is sufficient room for the application files. |

Critical @ > 0 |

Cluster CPU Saturation High |

Cluster allocatable CPU saturation is high. |

Add nodes. |

Warning @ > 80 % |

Change Log Monitors

Monitor Name |

Severity |

Monitor Description |

Internal Volume Discovered |

Informational |

This message occurs when an Internal Volume is discovered. |

Internal Volume Modified |

Informational |

This message occurs when an Internal Volume is modified. |

Storage Node Discovered |

Informational |

This message occurs when an Storage Node is discovered. |

Storage Node Removed |

Informational |

This message occurs when an Storage Node is removed. |

Storage Pool Discovered |

Informational |

This message occurs when an Storage Pool is discovered. |

Storage Virtual Machine Discovered |

Informational |

This message occurs when an Storage Virtual Machine is discovered. |

Storage Virtual Machine Modified |

Informational |

This message occurs when an Storage Virtual Machine is modified. |

Data Collection Monitors

Monitor Name |

Description |

Corrective Action |

Acquisition Unit Shutdown |

Data Infrastructure Insights Acquisition Units periodically restart as part of upgrades to introduce new features. This happens once a month or less in a typical environment. A Warning Alert that an Acquisition Unit has shutdown should be followed soon after by a Resolution noting that the newly-restarted Acquisition Unit has completed a registration with Data Infrastructure Insights. Typically this shutdown-to-registration cycle takes 5 to 15 minutes. |

If the alert occurs frequently or lasts longer than 15 minutes, check on the operation of the system hosting the Acquisition Unit, the network, and any proxy connecting the AU to the Internet. |

Collector Failed |

The poll of a data collector encountered an unexpected failure situation. |

Visit the data collector page in Data Infrastructure Insights to learn more about the situation. |

Collector Warning |

This Alert typically can arise because of an erroneous configuration of the data collector or of the target system. Revisit the configurations to prevent future Alerts. It can also be due to a retrieval of less-than-complete data where the data collector gathered all the data that it could. This can happen when situations change during data collection (e.g., a virtual machine present at the beginning of data collection is deleted during data collection and before its data is captured). |

Check the configuration of the data collector or target system. |

Security Monitors

Monitor Name |

Threshold |

Monitor Description |

Corrective Action |

AutoSupport HTTPS transport disabled |

Warning @ < 1 |

AutoSupport supports HTTPS, HTTP, and SMTP for transport protocols. Because of the sensitive nature of AutoSupport messages, NetApp strongly recommends using HTTPS as the default transport protocol for sending AutoSupport messages to NetApp support. |

To set HTTPS as the transport protocol for AutoSupport messages, run the following ONTAP command:…system node autosupport modify -transport https |

Cluster Insecure ciphers for SSH |

Warning @ < 1 |

Indicates that SSH is using insecure ciphers, for example ciphers beginning with *cbc. |

To remove the CBC ciphers, run the following ONTAP command:…security ssh remove -vserver <admin vserver> -ciphers aes256-cbc,aes192-cbc,aes128-cbc,3des-cbc |

Cluster Login Banner Disabled |

Warning @ < 1 |

Indicates that the Login banner is disabled for users accessing the ONTAP system. Displaying a login banner is helpful for establishing expectations for access and use of the system. |

To configure the login banner for a cluster, run the following ONTAP command:…security login banner modify -vserver <admin svm> -message "Access restricted to authorized users" |

Cluster Peer Communication Not Encrypted |

Warning @ < 1 |

When replicating data for disaster recovery, caching, or backup, you must protect that data during transport over the wire from one ONTAP cluster to another. Encryption must be configured on both the source and destination clusters. |

To enable encryption on cluster peer relationships that were created prior to ONTAP 9.6, the source and destination cluster must be upgraded to 9.6. Then use the "cluster peer modify" command to change both the source and destination cluster peers to use Cluster Peering Encryption.…See the NetApp Security Hardening Guide for ONTAP 9 for details. |

Default Local Admin User Enabled |

Warning @ > 0 |

NetApp recommends locking (disabling) any unneeded Default Admin User (built-in) accounts with the lock command. They are primarily default accounts for which passwords were never updated or changed. |

To lock the built-in "admin" account, run the following ONTAP command:…security login lock -username admin |

FIPS Mode Disabled |

Warning @ < 1 |

When FIPS 140-2 compliance is enabled, TLSv1 and SSLv3 are disabled, and only TLSv1.1 and TLSv1.2 remain enabled. ONTAP prevents you from enabling TLSv1 and SSLv3 when FIPS 140-2 compliance is enabled. |

To enable FIPS 140-2 compliance on a cluster, run the following ONTAP command in advanced privilege mode:…security config modify -interface SSL -is-fips-enabled true |

Log Forwarding Not Encrypted |

Warning @ < 1 |

Offloading of syslog information is necessary for limiting the scope or footprint of a breach to a single system or solution. Therefore, NetApp recommends securely offloading syslog information to a secure storage or retention location. |

Once a log forwarding destination is created, its protocol cannot be changed. To change to an encrypted protocol, delete and recreate the log forwarding destination using the following ONTAP command:…cluster log-forwarding create -destination <destination ip> -protocol tcp-encrypted |

MD5 Hashed password |

Warning @ > 0 |

NetApp strongly recommends to use the more secure SHA-512 hash function for ONTAP user account passwords. Accounts using the less secure MD5 hash function should migrate to the SHA-512 hash function. |

NetApp strongly recommends user accounts migrate to the more secure SHA-512 solution by having users change their passwords.…to lock accounts with passwords that use the MD5 hash function, run the following ONTAP command:…security login lock -vserver * -username * -hash-function md5 |

No NTP servers are configured |

Warning @ < 1 |

Indicates that the cluster has no configured NTP servers. For redundancy and optimum service, NetApp recommends that you associate at least three NTP servers with the cluster. |

To associate an NTP server with the cluster, run the following ONTAP command: |

NTP server count is low |

Warning @ < 3 |

Indicates that the cluster has less than 3 configured NTP servers. For redundancy and optimum service, NetApp recommends that you associate at least three NTP servers with the cluster. |

To associate an NTP server with the cluster, run the following ONTAP command:…cluster time-service ntp server create -server <ntp server host name or ip address> |

Remote Shell Enabled |

Warning @ > 0 |

Remote Shell is not a secure method for establishing command-line access to the ONTAP solution. Remote Shell should be disabled for secure remote access. |

NetApp recommends Secure Shell (SSH) for secure remote access.…To disable Remote shell on a cluster, run the following ONTAP command in advanced privilege mode:…security protocol modify -application rsh- enabled false |

Storage VM Audit Log Disabled |

Warning @ < 1 |

Indicates that Audit logging is disabled for SVM. |

To configure the Audit log for a vserver, run the following ONTAP command:…vserver audit enable -vserver <svm> |

Storage VM Insecure ciphers for SSH |

Warning @ < 1 |

Indicates that SSH is using insecure ciphers, for example ciphers beginning with *cbc. |

To remove the CBC ciphers, run the following ONTAP command:…security ssh remove -vserver <vserver> -ciphers aes256-cbc,aes192-cbc,aes128-cbc,3des-cbc |

Storage VM Login banner disabled |

Warning @ < 1 |

Indicates that the Login banner is disabled for users accessing SVMs on the system. Displaying a login banner is helpful for establishing expectations for access and use of the system. |

To configure the login banner for a cluster, run the following ONTAP command:…security login banner modify -vserver <svm> -message "Access restricted to authorized users" |

Telnet Protocol Enabled |

Warning @ > 0 |

Telnet is not a secure method for establishing command-line access to the ONTAP solution. Telnet should be disabled for secure remote access. |

NetApp recommends Secure Shell (SSH) for secure remote access. |

Data Protection Monitors

Monitor Name |

Thresholds |

Monitor Description |

Corrective Action |

Insufficient Space for Lun Snapshot Copy |

(Filter contains_luns = Yes) Warning @ > 95 %…Critical @ > 100 % |

Storage capacity of a volume is necessary to store application and customer data. A portion of that space, called snapshot reserved space, is used to store snapshots which allow data to be protected locally. The more new and updated data stored in the ONTAP volume the more snapshot capacity is used and less snapshot storage capacity will be available for future new or updated data. If the snapshot data capacity within a volume reaches the total snapshot reserve space it may lead to the customer being unable to store new snapshot data and reduction in the level of protection for the data in the LUNs in the volume. Monitoring the volume used snapshot capacity ensures data services continuity. |

Immediate Actions |

SnapMirror Relationship Lag |

Warning @ > 150%…Critical @ > 300% |

SnapMirror relationship lag is the difference between the snapshot timestamp and the time on the destination system. The lag_time_percent is the ratio of lag time to the SnapMirror Policy's schedule interval. If the lag time equals the schedule interval, the lag_time_percent will be 100%. If the SnapMirror policy does not have a schedule, lag_time_percent will not be calculated. |

Monitor SnapMirror status using the "snapmirror show" command. Check the SnapMirror transfer history using the "snapmirror show-history" command |

Cloud Volume (CVO) Monitors

Monitor Name |

CI Severity |

Monitor Description |

Corrective Action |

CVO Disk Out of Service |

INFO |

This event occurs when a disk is removed from service because it has been marked failed, is being sanitized, or has entered the Maintenance Center. |

None |

CVO Giveback of Storage Pool Failed |

CRITICAL |

This event occurs during the migration of an aggregate as part of a storage failover (SFO) giveback, when the destination node cannot reach the object stores. |

Perform the following corrective actions: |

CVO HA Interconnect Down |

WARNING |

The high-availability (HA) interconnect is down. Risk of service outage when failover is not available. |

Corrective actions depend on the number and type of HA interconnect links supported by the platform, as well as the reason why the interconnect is down. |

CVO Max Sessions Per User Exceeded |

WARNING |

You have exceeded the maximum number of sessions allowed per user over a TCP connection. Any request to establish a session will be denied until some sessions are released. |

Perform the following corrective actions: |

CVO NetBIOS Name Conflict |

CRITICAL |

The NetBIOS Name Service has received a negative response to a name registration request, from a remote machine. This is typically caused by a conflict in the NetBIOS name or an alias. As a result, clients might not be able to access data or connect to the right data-serving node in the cluster. |

Perform any one of the following corrective actions: |

CVO NFSv4 Store Pool Exhausted |

CRITICAL |

A NFSv4 store pool has been exhausted. |

If the NFS server is unresponsive for more than 10 minutes after this event, contact NetApp technical support. |

CVO Node Panic |

WARNING |

This event is issued when a panic occurs |

Contact NetApp customer support. |

CVO Node Root Volume Space Low |

CRITICAL |

The system has detected that the root volume is dangerously low on space. The node is not fully operational. Data LIFs might have failed over within the cluster, because of which NFS and CIFS access is limited on the node. Administrative capability is limited to local recovery procedures for the node to clear up space on the root volume. |

Perform the following corrective actions: |

CVO Nonexistent Admin Share |

CRITICAL |

Vscan issue: a client has attempted to connect to a nonexistent ONTAP_ADMIN$ share. |

Ensure that Vscan is enabled for the mentioned SVM ID. Enabling Vscan on a SVM causes the ONTAP_ADMIN$ share to be created for the SVM automatically. |

CVO Object Store Host Unresolvable |

CRITICAL |

The object store server host name cannot be resolved to an IP address. The object store client cannot communicate with the object-store server without resolving to an IP address. As a result, data might be inaccessible. |

Check the DNS configuration to verify that the host name is configured correctly with an IP address. |

CVO Object Store Intercluster LIF Down |

CRITICAL |

The object-store client cannot find an operational LIF to communicate with the object store server. The node will not allow object store client traffic until the intercluster LIF is operational. As a result, data might be inaccessible. |

Perform the following corrective actions: |

CVO Object Store Signature Mismatch |

CRITICAL |

The request signature sent to the object store server does not match the signature calculated by the client. As a result, data might be inaccessible. |

Verify that the secret access key is configured correctly. If it is configured correctly, contact NetApp technical support for assistance. |

CVO QoS Monitor Memory Maxed Out |

CRITICAL |

The QoS subsystem's dynamic memory has reached its limit for the current platform hardware. Some QoS features might operate in a limited capacity. |

Delete some active workloads or streams to free up memory. Use the “statistics show -object workload -counter ops” command to determine which workloads are active. Active workloads show non-zero ops. Then use the “workload delete <workload_name>” command multiple times to remove specific workloads. Alternatively, use the “stream delete -workload <workload name> *” command to delete the associated streams from the active workload. |

CVO READDIR Timeout |

CRITICAL |

A READDIR file operation has exceeded the timeout that it is allowed to run in WAFL. This can be because of very large or sparse directories. Corrective action is recommended. |

Perform the following corrective actions: |

CVO Relocation of Storage Pool Failed |

CRITICAL |

This event occurs during the relocation of an aggregate, when the destination node cannot reach the object stores. |

Perform the following corrective actions: |

CVO Shadow Copy Failed |

CRITICAL |

A Volume Shadow Copy Service (VSS), a Microsoft Server backup and restore service operation, has failed. |

Check the following using the information provided in the event message: |

CVO Storage VM Stop Succeeded |

INFO |

This message occurs when a 'vserver stop' operation succeeds. |

Use 'vserver start' command to start the data access on a storage VM. |

CVO Too Many CIFS Authentication |

WARNING |

Many authentication negotiations have occurred simultaneously. There are 256 incomplete new session requests from this client. |

Investigate why the client has created 256 or more new connection requests. You might have to contact the vendor of the client or of the application to determine why the error occurred. |

CVO Unassigned Disks |

INFO |

System has unassigned disks - capacity is being wasted and your system may have some misconfiguration or partial configuration change applied. |

Perform the following corrective actions: |

CVO Unauthorized User Access to Admin Share |

WARNING |

A client has attempted to connect to the privileged ONTAP_ADMIN$ share even though their logged-in user is not an allowed user. |

Perform the following corrective actions: |

CVO Virus Detected |

WARNING |

A Vscan server has reported an error to the storage system. This typically indicates that a virus has been found. However, other errors on the Vscan server can cause this event. |

Check the log of the Vscan server reported in the "syslog" event to see if it was able to successfully clean, quarantine, or delete the infected file. If it was not able to do so, a system administrator might have to manually delete the file. |

CVO Volume Offline |

INFO |

This message indicates that a volume is made offline. |

Bring the volume back online. |

CVO Volume Restricted |

INFO |

This event indicates that a flexible volume is made restricted. |

Bring the volume back online. |

SnapMirror for Business Continuity (SMBC) Mediator Log Monitors

Monitor Name |

Severity |

Monitor Description |

Corrective Action |

ONTAP Mediator Added |

INFO |

This message occurs when ONTAP Mediator is added successfully on a cluster. |

None |

ONTAP Mediator Not Accessible |

CRITICAL |

This message occurs when either the ONTAP Mediator is repurposed or the Mediator package is no longer installed on the Mediator server. As a result, SnapMirror failover is not possible. |

Remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

ONTAP Mediator Removed |

INFO |

This message occurs when ONTAP Mediator is removed successfully from a cluster. |

None |

ONTAP Mediator Unreachable |

WARNING |

This message occurs when the ONTAP Mediator is unreachable on a cluster. As a result, SnapMirror failover is not possible. |

Check the network connectivity to the ONTAP Mediator by using the "network ping" and "network traceroute" commands. If the issue persists, remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

SMBC CA Certificate Expired |

CRITICAL |

This message occurs when the ONTAP Mediator certificate authority (CA) certificate has expired. As a result, all further communication to the ONTAP Mediator will not be possible. |

Remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Update a new CA certificate on the ONTAP Mediator server. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

SMBC CA Certificate Expiring |

WARNING |

This message occurs when the ONTAP Mediator certificate authority (CA) certificate is due to expire within the next 30 days. |

Before this certificate expires, remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Update a new CA certificate on the ONTAP Mediator server. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

SMBC Client Certificate Expired |

CRITICAL |

This message occurs when the ONTAP Mediator client certificate has expired. As a result, all further communication to the ONTAP Mediator will not be possible. |

Remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

SMBC Client Certificate Expiring |

WARNING |

This message occurs when the ONTAP Mediator client certificate is due to expire within the next 30 days. |

Before this certificate expires, remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

SMBC Relationship Out of Sync |

CRITICAL |

This message occurs when a SnapMirror for Business Continuity (SMBC) relationship changes status from "in-sync" to "out-of-sync". Due to this RPO=0 data protection will be disrupted. |

Check the network connection between the source and destination volumes. Monitor the SMBC relationship status by using the "snapmirror show" command on the destination, and by using the "snapmirror list-destinations" command on the source. Auto-resync will attempt to bring the relationship back to "in-sync" status. If the resync fails, verify that all the nodes in the cluster are in quorum and are healthy. |

SMBC Server Certificate Expired |

CRITICAL |

This message occurs when the ONTAP Mediator server certificate has expired. As a result, all further communication to the ONTAP Mediator will not be possible. |

Remove the configuration of the current ONTAP Mediator by using the "snapmirror mediator remove" command. Update a new server certificate on the ONTAP Mediator server. Reconfigure access to the ONTAP Mediator by using the "snapmirror mediator add" command. |

SMBC Server Certificate Expiring |

WARNING |