Deployment Procedures

Suggest changes

Suggest changes

This document provides details for configuring a fully redundant, highly available FlexPod Express system. To reflect this redundancy, the components being configured in each step are referred to as either component A or component B. For example, controller A and controller B identify the two NetApp storage controllers that are provisioned in this document. Switch A and switch B identify a pair of Cisco Nexus switches. Fabric Interconnect A and Fabric Interconnect B are the two Integrated Nexus Fabric Interconnects.

In addition, this document describes steps for provisioning multiple Cisco UCS hosts, which are identified sequentially as server A, server B, and so on.

To indicate that you should include information pertinent to your environment in a step, <<text>> appears as part of the command structure. See the following example for the vlan create command:

Controller01>vlan create vif0 <<mgmt_vlan_id>>

This document enables you to fully configure the FlexPod Express environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and virtual local area network (VLAN) schemes. The table below describes the VLANs required for deployment, as outlined in this guide. This table can be completed based on the specific site variables and used to implement the document configuration steps.

|

If you use separate in-band and out-of-band management VLANs, you must create a layer 3 route between them. For this validation, a common management VLAN was used. |

| VLAN name | VLAN purpose | ID used in validating this document |

|---|---|---|

Management VLAN |

VLAN for management interfaces |

18 |

Native VLAN |

VLAN to which untagged frames are assigned |

2 |

NFS VLAN |

VLAN for NFS traffic |

104 |

VMware vMotion VLAN |

VLAN designated for the movement of virtual machines (VMs) from one physical host to another |

103 |

VM traffic VLAN |

VLAN for VM application traffic |

102 |

iSCSI-A-VLAN |

VLAN for iSCSI traffic on fabric A |

124 |

iSCSI-B-VLAN |

VLAN for iSCSI traffic on fabric B |

125 |

The VLAN numbers are needed throughout the configuration of FlexPod Express. The VLANs are referred to as <<var_xxxx_vlan>>, where xxxx is the purpose of the VLAN (such as iSCSI-A).

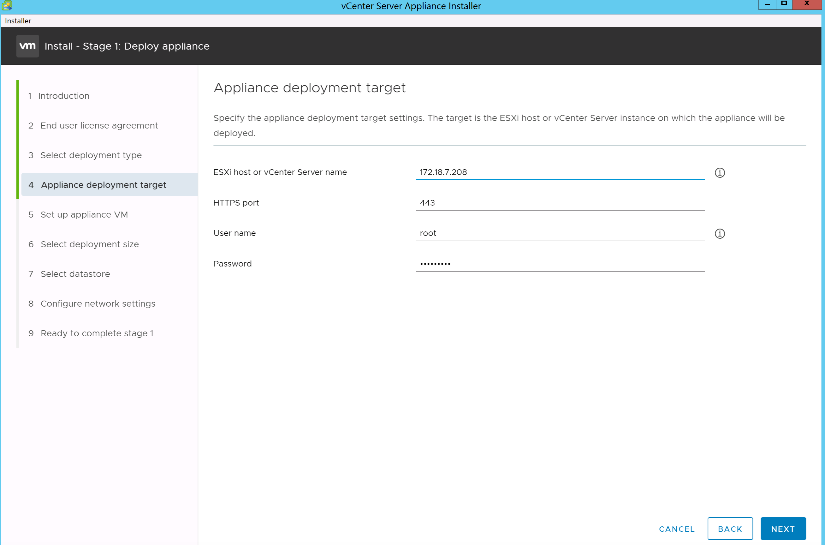

The following table lists the VMware VMs created.

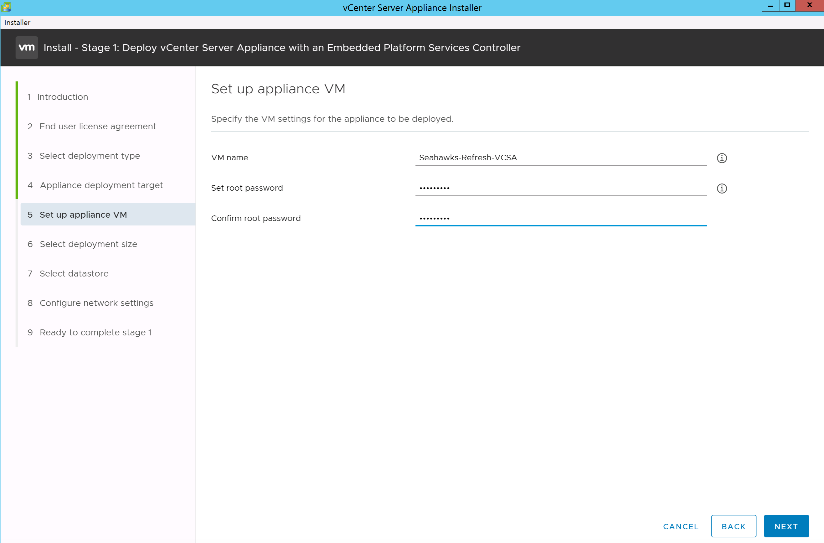

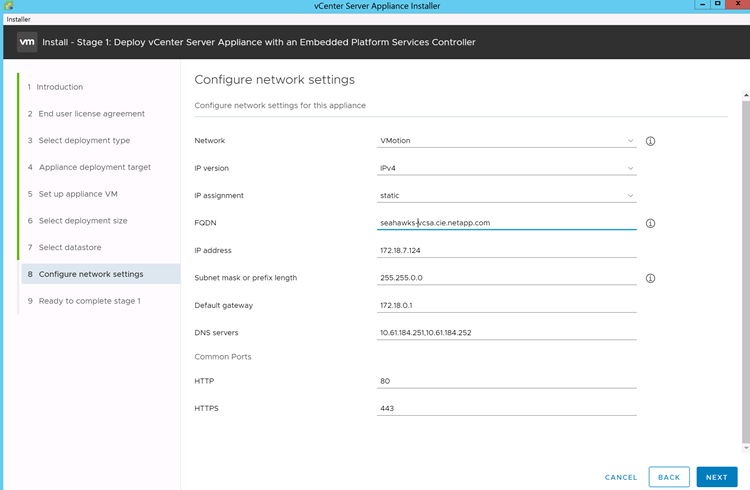

| VM Description | Host Name |

|---|---|

VMware vCenter Server |

Seahawks-vcsa.cie.netapp.com |

Cisco Nexus 31108PCV deployment procedure

This section details the Cisco Nexus 31308PCV switch configuration used in a FlexPod Express environment.

Initial setup of Cisco Nexus 31108PCV switch

This procedures describes how to configure the Cisco Nexus switches for use in a base FlexPod Express environment.

|

This procedure assumes that you are using a Cisco Nexus 31108PCV running NX-OS software release 7.0(3)I6(1). |

-

Upon initial boot and connection to the console port of the switch, the Cisco NX-OS setup automatically starts. This initial configuration addresses basic settings, such as the switch name, the mgmt0 interface configuration, and Secure Shell (SSH) setup.

-

The FlexPod Express management network can be configured in multiple ways. The mgmt0 interfaces on the 31108PCV switches can be connected to an existing management network, or the mgmt0 interfaces of the 31108PCV switches can be connected in a back-to-back configuration. However, this link cannot be used for external management access such as SSH traffic.

In this deployment guide, the FlexPod Express Cisco Nexus 31108PCV switches are connected to an existing management network.

-

To configure the Cisco Nexus 31108PCV switches, power on the switch and follow the on-screen prompts, as illustrated here for the initial setup of both the switches, substituting the appropriate values for the switch-specific information.

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

*Note: setup is mainly used for configuring the system initially, when no configuration is present. So setup always assumes system defaults and not the current system configuration values. Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs. Would you like to enter the basic configuration dialog (yes/no): y Do you want to enforce secure password standard (yes/no) [y]: y Create another login account (yes/no) [n]: n Configure read-only SNMP community string (yes/no) [n]: n Configure read-write SNMP community string (yes/no) [n]: n Enter the switch name : 31108PCV-A Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: y Mgmt0 IPv4 address : <<var_switch_mgmt_ip>> Mgmt0 IPv4 netmask : <<var_switch_mgmt_netmask>> Configure the default gateway? (yes/no) [y]: y IPv4 address of the default gateway : <<var_switch_mgmt_gateway>> Configure advanced IP options? (yes/no) [n]: n Enable the telnet service? (yes/no) [n]: n Enable the ssh service? (yes/no) [y]: y Type of ssh key you would like to generate (dsa/rsa) [rsa]: rsa Number of rsa key bits <1024-2048> [1024]: <enter> Configure the ntp server? (yes/no) [n]: y NTP server IPv4 address : <<var_ntp_ip>> Configure default interface layer (L3/L2) [L2]: <enter> Configure default switchport interface state (shut/noshut) [noshut]: <enter> Configure CoPP system profile (strict/moderate/lenient/dense) [strict]: <enter>

-

A summary of your configuration is displayed and you are asked if you would like to edit the configuration. If your configuration is correct, enter

n.Would you like to edit the configuration? (yes/no) [n]: no

-

You are then asked if you would like to use this configuration and save it. If so, enter

y.Use this configuration and save it? (yes/no) [y]: Enter

-

Repeat steps 1 through 5 for Cisco Nexus switch B.

Enable advanced features

Certain advanced features must be enabled in Cisco NX-OS to provide additional configuration options.

-

To enable the appropriate features on Cisco Nexus switch A and switch B, enter configuration mode by using the command

(config t)and run the following commands:feature interface-vlan feature lacp feature vpc

The default port channel load-balancing hash uses the source and destination IP addresses to determine the load-balancing algorithm across the interfaces in the port channel. You can achieve better distribution across the members of the port channel by providing more inputs to the hash algorithm beyond the source and destination IP addresses. For the same reason, NetApp highly recommends adding the source and destination TCP ports to the hash algorithm. -

From configuration mode

(config t), run the following commands to set the global port channel load-balancing configuration on Cisco Nexus switch A and switch B:port-channel load-balance src-dst ip-l4port

Perform global spanning-tree configuration

The Cisco Nexus platform uses a new protection feature called bridge assurance. Bridge assurance helps protect against a unidirectional link or other software failure with a device that continues to forward data traffic when it is no longer running the spanning-tree algorithm. Ports can be placed in one of several states, including network or edge, depending on the platform.

NetApp recommends setting bridge assurance so that all ports are considered to be network ports by default. This setting forces the network administrator to review the configuration of each port. It also reveals the most common configuration errors, such as unidentified edge ports or a neighbor that does not have the bridge assurance feature enabled. In addition, it is safer to have the spanning tree block many ports rather than too few, which allows the default port state to enhance the overall stability of the network.

Pay close attention to the spanning-tree state when adding servers, storage, and uplink switches, especially if they do not support bridge assurance. In such cases, you might need to change the port type to make the ports active.

The Bridge Protocol Data Unit (BPDU) guard is enabled on edge ports by default as another layer of protection. To prevent loops in the network, this feature shuts down the port if BPDUs from another switch are seen on this interface.

From configuration mode (config t), run the following commands to configure the default spanning-tree options, including the default port type and BPDU guard, on Cisco Nexus switch A and switch B:

spanning-tree port type network default spanning-tree port type edge bpduguard default

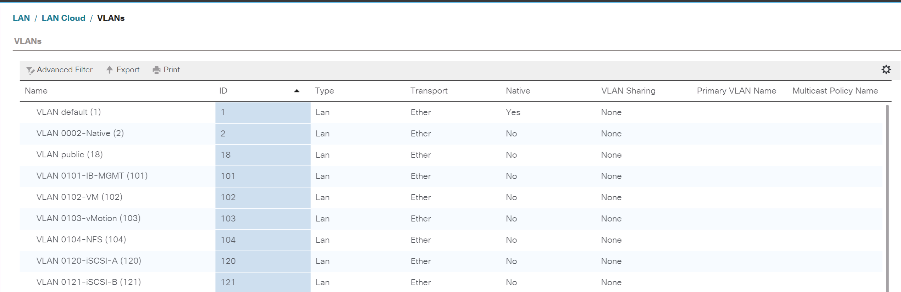

Define VLANs

Before individual ports with different VLANs are configured, the layer-2 VLANs must be defined on the switch. It is also a good practice to name the VLANs for easy troubleshooting in the future.

From configuration mode (config t), run the following commands to define and describe the layer 2 VLANs on Cisco Nexus switch A and switch B:

vlan <<nfs_vlan_id>> name NFS-VLAN vlan <<iSCSI_A_vlan_id>> name iSCSI-A-VLAN vlan <<iSCSI_B_vlan_id>> name iSCSI-B-VLAN vlan <<vmotion_vlan_id>> name vMotion-VLAN vlan <<vmtraffic_vlan_id>> name VM-Traffic-VLAN vlan <<mgmt_vlan_id>> name MGMT-VLAN vlan <<native_vlan_id>> name NATIVE-VLAN exit

Configure access and management port descriptions

As is the case with assigning names to the layer-2 VLANs, setting descriptions for all the interfaces can help with both provisioning and troubleshooting.

From configuration mode (config t) in each of the switches, enter the following port descriptions for the FlexPod Express large configuration:

Cisco Nexus switch A

int eth1/1 description AFF A220-A e0M int eth1/2 description Cisco UCS FI-A mgmt0 int eth1/3 description Cisco UCS FI-A eth1/1 int eth1/4 description Cisco UCS FI-B eth1/1 int eth1/13 description vPC peer-link 31108PVC-B 1/13 int eth1/14 description vPC peer-link 31108PVC-B 1/14

Cisco Nexus switch B

int eth1/1 description AFF A220-B e0M int eth1/2 description Cisco UCS FI-B mgmt0 int eth1/3 description Cisco UCS FI-A eth1/2 int eth1/4 description Cisco UCS FI-B eth1/2 int eth1/13 description vPC peer-link 31108PVC-B 1/13 int eth1/14 description vPC peer-link 31108PVC-B 1/14

Configure server and storage management interfaces

The management interfaces for both the server and the storage typically use only a single VLAN. Therefore, configure the management interface ports as access ports. Define the management VLAN for each switch and change the spanning-tree port type to edge.

From configuration mode (config t), run the following commands to configure the port settings for the management interfaces of both the servers and the storage:

Cisco Nexus switch A

int eth1/1-2 switchport mode access switchport access vlan <<mgmt_vlan>> spanning-tree port type edge speed 1000 exit

Cisco Nexus switch B

int eth1/1-2 switchport mode access switchport access vlan <<mgmt_vlan>> spanning-tree port type edge speed 1000 exit

Add NTP distribution interface

Cisco Nexus switch A

From the global configuration mode, execute the following commands.

interface Vlan<ib-mgmt-vlan-id> ip address <switch-a-ntp-ip>/<ib-mgmt-vlan-netmask-length> no shutdown exitntp peer <switch-b-ntp-ip> use-vrf default

Cisco Nexus switch B

From the global configuration mode, execute the following commands.

interface Vlan<ib-mgmt-vlan-id> ip address <switch- b-ntp-ip>/<ib-mgmt-vlan-netmask-length> no shutdown exitntp peer <switch-a-ntp-ip> use-vrf default

Perform virtual port channel global configuration

A virtual port channel (vPC) enables links that are physically connected to two different Cisco Nexus switches to appear as a single port channel to a third device. The third device can be a switch, server, or any other networking device. A vPC can provide layer-2 multipathing, which allows you to create redundancy by increasing bandwidth, enabling multiple parallel paths between nodes, and load-balancing traffic where alternative paths exist.

A vPC provides the following benefits:

-

Enabling a single device to use a port channel across two upstream devices

-

Eliminating spanning-tree protocol blocked ports

-

Providing a loop-free topology

-

Using all available uplink bandwidth

-

Providing fast convergence if either the link or a device fails

-

Providing link-level resiliency

-

Helping provide high availability

The vPC feature requires some initial setup between the two Cisco Nexus switches to function properly. If you use the back-to-back mgmt0 configuration, use the addresses defined on the interfaces and verify that they can communicate by using the ping <<switch_A/B_mgmt0_ip_addr>>vrf management command.

From configuration mode (config t), run the following commands to configure the vPC global configuration for both switches:

Cisco Nexus switch A

vpc domain 1 role priority 10 peer-keepalive destination <<switch_B_mgmt0_ip_addr>> source <<switch_A_mgmt0_ip_addr>> vrf management peer-gateway auto-recovery ip arp synchronize int eth1/13-14 channel-group 10 mode active int Po10description vPC peer-link switchport switchport mode trunkswitchport trunk native vlan <<native_vlan_id>> switchport trunk allowed vlan <<nfs_vlan_id>>,<<vmotion_vlan_id>>, <<vmtraffic_vlan_id>>, <<mgmt_vlan>, <<iSCSI_A_vlan_id>>, <<iSCSI_B_vlan_id>> spanning-tree port type network vpc peer-link no shut exit int Po13 description vPC ucs-FI-A switchport mode trunk switchport trunk native vlan <<native_vlan_id>> switchport trunk allowed vlan <<vmotion_vlan_id>>, <<vmtraffic_vlan_id>>, <<mgmt_vlan>> spanning-tree port type network mtu 9216 vpc 13 no shut exit int eth1/3 channel-group 13 mode active int Po14 description vPC ucs-FI-B switchport mode trunk switchport trunk native vlan <<native_vlan_id>> switchport trunk allowed vlan <<vmotion_vlan_id>>, <<vmtraffic_vlan_id>>, <<mgmt_vlan>> spanning-tree port type network mtu 9216 vpc 14 no shut exit int eth1/4 channel-group 14 mode active copy run start

Cisco Nexus switch B

vpc domain 1 peer-switch role priority 20 peer-keepalive destination <<switch_A_mgmt0_ip_addr>> source <<switch_B_mgmt0_ip_addr>> vrf management peer-gateway auto-recovery ip arp synchronize int eth1/13-14 channel-group 10 mode active int Po10 description vPC peer-link switchport switchport mode trunk switchport trunk native vlan <<native_vlan_id>> switchport trunk allowed vlan <<nfs_vlan_id>>,<<vmotion_vlan_id>>, <<vmtraffic_vlan_id>>, <<mgmt_vlan>>, <<iSCSI_A_vlan_id>>, <<iSCSI_B_vlan_id>> spanning-tree port type network vpc peer-link no shut exit int Po13 description vPC ucs-FI-A switchport mode trunk switchport trunk native vlan <<native_vlan_id>> switchport trunk allowed vlan <<vmotion_vlan_id>>, <<vmtraffic_vlan_id>>, <<mgmt_vlan>> spanning-tree port type network mtu 9216 vpc 13 no shut exit int eth1/3 channel-group 13 mode active int Po14 description vPC ucs-FI-B switchport mode trunk switchport trunk native vlan <<native_vlan_id>> switchport trunk allowed vlan <<vmotion_vlan_id>>, <<vmtraffic_vlan_id>>, <<mgmt_vlan>> spanning-tree port type network mtu 9216 vpc 14 no shut exit int eth1/4 channel-group 14 mode active copy run start

|

In this solution validation, a maximum transmission unit (MTU) of 9000 was used. However, based on application requirements, you can configure an appropriate value of MTU. It is important to set the same MTU value across the FlexPod solution. Incorrect MTU configurations between components result in packets being dropped. |

Uplink into existing network infrastructure

Depending on the available network infrastructure, several methods and features can be used to uplink the FlexPod environment. If an existing Cisco Nexus environment is present, NetApp recommends using vPCs to uplink the Cisco Nexus 31108PVC switches included in the FlexPod environment into the infrastructure. The uplinks can be 10GbE uplinks for a 10GbE infrastructure solution or 1GbE for a 1GbE infrastructure solution if required. The previously described procedures can be used to create an uplink vPC to the existing environment. Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

NetApp storage deployment procedure (part 1)

This section describes the NetApp AFF storage deployment procedure.

NetApp Storage Controller AFF2xx Series Installation

NetApp Hardware Universe

The NetApp Hardware Universe (HWU) application provides supported hardware and software components for any specific ONTAP version. It provides configuration information for all the NetApp storage appliances currently supported by ONTAP software. It also provides a table of component compatibilities.

Confirm that the hardware and software components that you would like to use are supported with the version of ONTAP that you plan to install:

-

Access the HWU application to view the system configuration guides. Select the Compare Storage Systems tab to view the compatibility between different version of the ONTAP software and the NetApp storage appliances with your desired specifications.

-

Alternatively, to compare components by storage appliance, click Compare Storage Systems.

| Controller AFF2XX Series prerequisites |

|---|

To plan the physical location of the storage systems, see the the following sections: |

Storage controllers

Follow the physical installation procedures for the controllers in the AFF A220 Documentation.

NetApp ONTAP 9.5

Configuration worksheet

Before running the setup script, complete the configuration worksheet from the product manual. The configuration worksheet is available in the ONTAP 9.5 Software Setup Guide (available in the ONTAP 9 Documentation Center). The table below illustrates ONTAP 9.5 installation and configuration information.

|

This system is set up in a two-node switchless cluster configuration. |

| Cluster Detail | Cluster Detail Value |

|---|---|

Cluster node A IP address |

<<var_nodeA_mgmt_ip>> |

Cluster node A netmask |

<<var_nodeA_mgmt_mask>> |

Cluster node A gateway |

<<var_nodeA_mgmt_gateway>> |

Cluster node A name |

<<var_nodeA>> |

Cluster node B IP address |

<<var_nodeB_mgmt_ip>> |

Cluster node B netmask |

<<var_nodeB_mgmt_mask>> |

Cluster node B gateway |

<<var_nodeB_mgmt_gateway>> |

Cluster node B name |

<<var_nodeB>> |

ONTAP 9.5 URL |

<<var_url_boot_software>> |

Name for cluster |

<<var_clustername>> |

Cluster management IP address |

<<var_clustermgmt_ip>> |

Cluster B gateway |

<<var_clustermgmt_gateway>> |

Cluster B netmask |

<<var_clustermgmt_mask>> |

Domain name |

<<var_domain_name>> |

DNS server IP (you can enter more than one) |

<<var_dns_server_ip>> |

NTP server A IP |

<< switch-a-ntp-ip >> |

NTP server B IP |

<< switch-b-ntp-ip >> |

Configure node A

To configure node A, complete the following steps:

-

Connect to the storage system console port. You should see a Loader-A prompt. However, if the storage system is in a reboot loop, press Ctrl- C to exit the autoboot loop when you see this message:

Starting AUTOBOOT press Ctrl-C to abort...

-

Allow the system to boot.

autoboot

-

Press Ctrl- C to enter the Boot menu.

If ONTAP 9. 5 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9. 5 is the version being booted, select option 8 and y to reboot the node. Then, continue with step 14.

-

To install new software, select option

7. -

Enter

yto perform an upgrade. -

Select

e0Mfor the network port you want to use for the download. -

Enter

yto reboot now. -

Enter the IP address, netmask, and default gateway for e0M in their respective places.

<<var_nodeA_mgmt_ip>> <<var_nodeA_mgmt_mask>> <<var_nodeA_mgmt_gateway>>

-

Enter the URL where the software can be found.

This web server must be pingable. -

Press Enter for the user name, indicating no user name.

-

Enter

yto set the newly installed software as the default to be used for subsequent reboots. -

Enter

yto reboot the node.When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

-

Press Ctrl- C to enter the Boot menu.

-

Select option

4for Clean Configuration and Initialize All Disks. -

Enter

yto zero disks, reset config, and install a new file system. -

Enter

yto erase all the data on the disks.The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with the node B configuration while the disks for node A are zeroing.

-

While node A is initializing, begin configuring node B.

Configure node B

To configure node B, complete the following steps:

-

Connect to the storage system console port. You should see a Loader-A prompt. However, if the storage system is in a reboot loop, press Ctrl-C to exit the autoboot loop when you see this message:

Starting AUTOBOOT press Ctrl-C to abort...

-

Press Ctrl-C to enter the Boot menu.

autoboot

-

Press Ctrl-C when prompted.

If ONTAP 9. 5 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.4 is the version being booted, select option 8 and y to reboot the node. Then, continue with step 14.

-

To install new software, select option 7.

-

Enter

yto perform an upgrade. -

Select

e0Mfor the network port you want to use for the download. -

Enter

yto reboot now. -

Enter the IP address, netmask, and default gateway for e0M in their respective places.

<<var_nodeB_mgmt_ip>> <<var_nodeB_mgmt_ip>><<var_nodeB_mgmt_gateway>>

-

Enter the URL where the software can be found.

This web server must be pingable. <<var_url_boot_software>>

-

Press Enter for the user name, indicating no user name

-

Enter

yto set the newly installed software as the default to be used for subsequent reboots. -

Enter

yto reboot the node.When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

-

Press Ctrl-C to enter the Boot menu.

-

Select option 4 for Clean Configuration and Initialize All Disks.

-

Enter

yto zero disks, reset config, and install a new file system. -

Enter

yto erase all the data on the disks.The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize.

Continuation node A configuration and cluster configuration

From a console port program attached to the storage controller A (node A) console port, run the node setup script. This script appears when ONTAP 9.5 boots on the node for the first time.

The node and cluster setup procedure has changed slightly in ONTAP 9.5. The cluster setup wizard is now used to configure the first node in a cluster, and System Manager is used to configure the cluster.

-

Follow the prompts to set up node A.

Welcome to the cluster setup wizard. You can enter the following commands at any time: "help" or "?" - if you want to have a question clarified, "back" - if you want to change previously answered questions, and "exit" or "quit" - if you want to quit the cluster setup wizard. Any changes you made before quitting will be saved. You can return to cluster setup at any time by typing "cluster setup". To accept a default or omit a question, do not enter a value. This system will send event messages and periodic reports to NetApp Technical Support. To disable this feature, enter autosupport modify -support disable within 24 hours. Enabling AutoSupport can significantly speed problem determination and resolution should a problem occur on your system. For further information on AutoSupport, see: http://support.netapp.com/autosupport/ Type yes to confirm and continue {yes}: yes Enter the node management interface port [e0M]: Enter the node management interface IP address: <<var_nodeA_mgmt_ip>> Enter the node management interface netmask: <<var_nodeA_mgmt_mask>> Enter the node management interface default gateway: <<var_nodeA_mgmt_gateway>> A node management interface on port e0M with IP address <<var_nodeA_mgmt_ip>> has been created. Use your web browser to complete cluster setup by accessing https://<<var_nodeA_mgmt_ip>> Otherwise, press Enter to complete cluster setup using the command line interface: -

Navigate to the IP address of the node’s management interface.

Cluster setup can also be performed by using the CLI. This document describes cluster setup using NetApp System Manager guided setup. -

Click Guided Setup to configure the cluster.

-

Enter

<<var_clustername>>for the cluster name and<<var_nodeA>>and<<var_nodeB>>for each of the nodes that you are configuring. Enter the password that you would like to use for the storage system. Select Switchless Cluster for the cluster type. Enter the cluster base license. -

You can also enter feature licenses for Cluster, NFS, and iSCSI.

-

You see a status message stating the cluster is being created. This status message cycles through several statuses. This process takes several minutes.

-

Configure the network.

-

Deselect the IP Address Range option.

-

Enter

<<var_clustermgmt_ip>>in the Cluster Management IP Address field,<<var_clustermgmt_mask>>in the Netmask field, and<<var_clustermgmt_gateway>>in the Gateway field. Use the … selector in the Port field to select e0M of node A. -

The node management IP for node A is already populated. Enter

<<var_nodeA_mgmt_ip>>for node B. -

Enter

<<var_domain_name>>in the DNS Domain Name field. Enter<<var_dns_server_ip>>in the DNS Server IP Address field.You can enter multiple DNS server IP addresses.

-

Enter

<<switch-a-ntp-ip>>in the Primary NTP Server field.You can also enter an alternate NTP server as

<<switch- b-ntp-ip>>.

-

-

Configure the support information.

-

If your environment requires a proxy to access AutoSupport, enter the URL in Proxy URL.

-

Enter the SMTP mail host and email address for event notifications.

You must, at a minimum, set up the event notification method before you can proceed. You can select any of the methods.

-

-

When indicated that the cluster configuration has completed, click Manage Your Cluster to configure the storage.

Continuation of storage cluster configuration

After the configuration of the storage nodes and base cluster, you can continue with the configuration of the storage cluster.

Zero all spare disks

To zero all spare disks in the cluster, run the following command:

disk zerospares

Set on-board UTA2 ports personality

-

Verify the current mode and the current type of the ports by running the

ucadmin showcommand.AFFA220-Clus::> ucadmin show Current Current Pending Pending Admin Node Adapter Mode Type Mode Type Status ------------ ------- ------- --------- ------- --------- ----------- AFFA220-Clus-01 0c cna target - - offline AFFA220-Clus-01 0d cna target - - offline AFFA220-Clus-01 0e cna target - - offline AFFA220-Clus-01 0f cna target - - offline AFFA220-Clus-02 0c cna target - - offline AFFA220-Clus-02 0d cna target - - offline AFFA220-Clus-02 0e cna target - - offline AFFA220-Clus-02 0f cna target - - offline 8 entries were displayed. -

Verify that the current mode of the ports that are in use is

cnaand that the current type is set totarget. If not, change the port personality by running the following command:ucadmin modify -node <home node of the port> -adapter <port name> -mode cna -type target

The ports must be offline to run the previous command. To take a port offline, run the following command:

network fcp adapter modify -node <home node of the port> -adapter <port name> -state down

If you changed the port personality, you must reboot each node for the change to take effect.

Enable Cisco Discovery Protocol

To enable the Cisco Discovery Protocol (CDP) on the NetApp storage controllers, run the following command:

node run -node * options cdpd.enable on

Enable Link-layer Discovery Protocol on all Ethernet ports

Enable the exchange of Link-layer Discovery Protocol (LLDP) neighbor information between the storage and network switches by running the following command. This command enables LLDP on all ports of all nodes in the cluster.

node run * options lldp.enable on

Rename management logical interfaces

To rename the management logical interfaces (LIFs), complete the following steps:

-

Show the current management LIF names.

network interface show –vserver <<clustername>>

-

Rename the cluster management LIF.

network interface rename –vserver <<clustername>> –lif cluster_setup_cluster_mgmt_lif_1 –newname cluster_mgmt

-

Rename the node B management LIF.

network interface rename -vserver <<clustername>> -lif cluster_setup_node_mgmt_lif_AFF A220_A_1 - newname AFF A220-01_mgmt1

Set auto-revert on cluster management

Set the auto-revert parameter on the cluster management interface.

network interface modify –vserver <<clustername>> -lif cluster_mgmt –auto-revert true

Set up service processor network interface

To assign a static IPv4 address to the service processor on each node, run the following commands:

system service-processor network modify –node <<var_nodeA>> -address-family IPv4 –enable true – dhcp none –ip-address <<var_nodeA_sp_ip>> -netmask <<var_nodeA_sp_mask>> -gateway <<var_nodeA_sp_gateway>> system service-processor network modify –node <<var_nodeB>> -address-family IPv4 –enable true – dhcp none –ip-address <<var_nodeB_sp_ip>> -netmask <<var_nodeB_sp_mask>> -gateway <<var_nodeB_sp_gateway>>

|

The service processor IP addresses should be in the same subnet as the node management IP addresses. |

Enable storage failover in ONTAP

To confirm that storage failover is enabled, run the following commands in a failover pair:

-

Verify the status of storage failover.

storage failover show

Both

<<var_nodeA>>and<<var_nodeB>>must be able to perform a takeover. Go to step 3 if the nodes can perform a takeover. -

Enable failover on one of the two nodes.

storage failover modify -node <<var_nodeA>> -enabled true

-

Verify the HA status of the two-node cluster.

This step is not applicable for clusters with more than two nodes. cluster ha show

-

Go to step 6 if high availability is configured. If high availability is configured, you see the following message upon issuing the command:

High Availability Configured: true

-

Enable HA mode only for the two-node cluster.

Do not run this command for clusters with more than two nodes because it causes problems with failover.

cluster ha modify -configured true Do you want to continue? {y|n}: y -

Verify that hardware assist is correctly configured and, if needed, modify the partner IP address.

storage failover hwassist show

The message

Keep Alive Status : Error: did not receive hwassist keep alive alerts from partnerindicates that hardware assist is not configured. Run the following commands to configure hardware assist.storage failover modify –hwassist-partner-ip <<var_nodeB_mgmt_ip>> -node <<var_nodeA>> storage failover modify –hwassist-partner-ip <<var_nodeA_mgmt_ip>> -node <<var_nodeB>>

Create jumbo frame MTU broadcast domain in ONTAP

To create a data broadcast domain with an MTU of 9000, run the following commands:

broadcast-domain create -broadcast-domain Infra_NFS -mtu 9000 broadcast-domain create -broadcast-domain Infra_iSCSI-A -mtu 9000 broadcast-domain create -broadcast-domain Infra_iSCSI-B -mtu 9000

Remove data ports from default broadcast domain

The 10GbE data ports are used for iSCSI/NFS traffic, and these ports should be removed from the default domain. Ports e0e and e0f are not used and should also be removed from the default domain.

To remove the ports from the broadcast domain, run the following command:

broadcast-domain remove-ports -broadcast-domain Default -ports <<var_nodeA>>:e0c, <<var_nodeA>>:e0d, <<var_nodeA>>:e0e, <<var_nodeA>>:e0f, <<var_nodeB>>:e0c, <<var_nodeB>>:e0d, <<var_nodeA>>:e0e, <<var_nodeA>>:e0f

Disable flow control on UTA2 ports

It is a NetApp best practice to disable flow control on all UTA2 ports that are connected to external devices. To disable flow control, run the following commands:

net port modify -node <<var_nodeA>> -port e0c -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeA>> -port e0d -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeA>> -port e0e -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeA>> -port e0f -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeB>> -port e0c -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeB>> -port e0d -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeB>> -port e0e -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

net port modify -node <<var_nodeB>> -port e0f -flowcontrol-admin none

Warning: Changing the network port settings will cause a several second interruption in carrier. Do you want to continue? {y|n}: y

|

The Cisco UCS Mini direct connection to ONTAP does not support LACP. |

Configure jumbo frames in NetApp ONTAP

To configure an ONTAP network port to use jumbo frames (that usually have an MTU of 9,000 bytes), run the following commands from the cluster shell:

AFF A220::> network port modify -node node_A -port e0e -mtu 9000

Warning: This command will cause a several second interruption of service on this network port.

Do you want to continue? {y|n}: y

AFF A220::> network port modify -node node_B -port e0e -mtu 9000

Warning: This command will cause a several second interruption of service on this network port.

Do you want to continue? {y|n}: y

AFF A220::> network port modify -node node_A -port e0f -mtu 9000

Warning: This command will cause a several second interruption of service on this network port.

Do you want to continue? {y|n}: y

AFF A220::> network port modify -node node_B -port e0f -mtu 9000

Warning: This command will cause a several second interruption of service on this network port.

Do you want to continue? {y|n}: y

Create VLANs in ONTAP

To create VLANs in ONTAP, complete the following steps:

-

Create NFS VLAN ports and add them to the data broadcast domain.

network port vlan create –node <<var_nodeA>> -vlan-name e0e-<<var_nfs_vlan_id>> network port vlan create –node <<var_nodeA>> -vlan-name e0f-<<var_nfs_vlan_id>> network port vlan create –node <<var_nodeB>> -vlan-name e0e-<<var_nfs_vlan_id>> network port vlan create –node <<var_nodeB>> -vlan-name e0f-<<var_nfs_vlan_id>> broadcast-domain add-ports -broadcast-domain Infra_NFS -ports <<var_nodeA>>: e0e- <<var_nfs_vlan_id>>, <<var_nodeB>>: e0e-<<var_nfs_vlan_id>> , <<var_nodeA>>:e0f- <<var_nfs_vlan_id>>, <<var_nodeB>>:e0f-<<var_nfs_vlan_id>>

-

Create iSCSI VLAN ports and add them to the data broadcast domain.

network port vlan create –node <<var_nodeA>> -vlan-name e0e-<<var_iscsi_vlan_A_id>> network port vlan create –node <<var_nodeA>> -vlan-name e0f-<<var_iscsi_vlan_B_id>> network port vlan create –node <<var_nodeB>> -vlan-name e0e-<<var_iscsi_vlan_A_id>> network port vlan create –node <<var_nodeB>> -vlan-name e0f-<<var_iscsi_vlan_B_id>> broadcast-domain add-ports -broadcast-domain Infra_iSCSI-A -ports <<var_nodeA>>: e0e- <<var_iscsi_vlan_A_id>>,<<var_nodeB>>: e0e-<<var_iscsi_vlan_A_id>> broadcast-domain add-ports -broadcast-domain Infra_iSCSI-B -ports <<var_nodeA>>: e0f- <<var_iscsi_vlan_B_id>>,<<var_nodeB>>: e0f-<<var_iscsi_vlan_B_id>>

-

Create MGMT-VLAN ports.

network port vlan create –node <<var_nodeA>> -vlan-name e0m-<<mgmt_vlan_id>> network port vlan create –node <<var_nodeB>> -vlan-name e0m-<<mgmt_vlan_id>>

Create aggregates in ONTAP

An aggregate containing the root volume is created during the ONTAP setup process. To create additional aggregates, determine the aggregate name, the node on which to create it, and the number of disks it contains.

To create aggregates, run the following commands:

aggr create -aggregate aggr1_nodeA -node <<var_nodeA>> -diskcount <<var_num_disks>> aggr create -aggregate aggr1_nodeB -node <<var_nodeB>> -diskcount <<var_num_disks>>

Retain at least one disk (select the largest disk) in the configuration as a spare. A best practice is to have at least one spare for each disk type and size.

Start with five disks; you can add disks to an aggregate when additional storage is required.

The aggregate cannot be created until disk zeroing completes. Run the aggr show command to display the aggregate creation status. Do not proceed until aggr1_nodeA is online.

Configure time zone in ONTAP

To configure time synchronization and to set the time zone on the cluster, run the following command:

timezone <<var_timezone>>

|

For example, in the eastern United States, the time zone is America/New_York. After you begin typing the time zone name, press the Tab key to see available options.

|

Configure SNMP in ONTAP

To configure the SNMP, complete the following steps:

-

Configure SNMP basic information, such as the location and contact. When polled, this information is visible as the

sysLocationandsysContactvariables in SNMP.snmp contact <<var_snmp_contact>> snmp location “<<var_snmp_location>>” snmp init 1 options snmp.enable on

-

Configure SNMP traps to send to remote hosts.

snmp traphost add <<var_snmp_server_fqdn>>

Configure SNMPv1 in ONTAP

To configure SNMPv1, set the shared secret plain-text password called a community.

snmp community add ro <<var_snmp_community>>

|

Use the snmp community delete all command with caution. If community strings are used for other monitoring products, this command removes them.

|

Configure SNMPv3 in ONTAP

SNMPv3 requires that you define and configure a user for authentication. To configure SNMPv3, complete the following steps:

-

Run the

security snmpuserscommand to view the engine ID. -

Create a user called

snmpv3user.security login create -username snmpv3user -authmethod usm -application snmp

-

Enter the authoritative entity's engine ID and select

md5as the authentication protocol. -

Enter an eight-character minimum-length password for the authentication protocol when prompted.

-

Select

desas the privacy protocol. -

Enter an eight-character minimum-length password for the privacy protocol when prompted.

Configure AutoSupport HTTPS in ONTAP

The NetApp AutoSupport tool sends support summary information to NetApp through HTTPS. To configure AutoSupport, run the following command:

system node autosupport modify -node * -state enable –mail-hosts <<var_mailhost>> -transport https -support enable -noteto <<var_storage_admin_email>>

Create a storage virtual machine

To create an infrastructure storage virtual machine (SVM), complete the following steps:

-

Run the

vserver createcommand.vserver create –vserver Infra-SVM –rootvolume rootvol –aggregate aggr1_nodeA –rootvolume- security-style unix

-

Add the data aggregate to the infra-SVM aggregate list for the NetApp VSC.

vserver modify -vserver Infra-SVM -aggr-list aggr1_nodeA,aggr1_nodeB

-

Remove the unused storage protocols from the SVM, leaving NFS and iSCSI.

vserver remove-protocols –vserver Infra-SVM -protocols cifs,ndmp,fcp

-

Enable and run the NFS protocol in the infra-SVM SVM.

nfs create -vserver Infra-SVM -udp disabled

-

Turn on the

SVM vstorageparameter for the NetApp NFS VAAI plug-in. Then, verify that NFS has been configured.vserver nfs modify –vserver Infra-SVM –vstorage enabled vserver nfs show

Commands are prefaced by vserverin the command line because SVMs were previously called servers

Configure NFSv3 in ONTAP

The table below lists the information needed to complete this configuration.

| Detail | Detail Value |

|---|---|

ESXi host A NFS IP address |

<<var_esxi_hostA_nfs_ip>> |

ESXi host B NFS IP address |

<<var_esxi_hostB_nfs_ip>> |

To configure NFS on the SVM, run the following commands:

-

Create a rule for each ESXi host in the default export policy.

-

For each ESXi host being created, assign a rule. Each host has its own rule index. Your first ESXi host has rule index 1, your second ESXi host has rule index 2, and so on.

vserver export-policy rule create –vserver Infra-SVM -policyname default –ruleindex 1 –protocol nfs -clientmatch <<var_esxi_hostA_nfs_ip>> -rorule sys –rwrule sys -superuser sys –allow-suid falsevserver export-policy rule create –vserver Infra-SVM -policyname default –ruleindex 2 –protocol nfs -clientmatch <<var_esxi_hostB_nfs_ip>> -rorule sys –rwrule sys -superuser sys –allow-suid false vserver export-policy rule show

-

Assign the export policy to the infrastructure SVM root volume.

volume modify –vserver Infra-SVM –volume rootvol –policy default

The NetApp VSC automatically handles export policies if you choose to install it after vSphere has been set up. If you do not install it, you must create export policy rules when additional Cisco UCS B-Series servers are added.

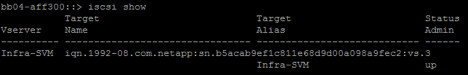

Create iSCSI service in ONTAP

To create the iSCSI service, complete the following step:

-

Create the iSCSI service on the SVM. This command also starts the iSCSI service and sets the iSCSI Qualified Name (IQN) for the SVM. Verify that iSCSI has been configured.

iscsi create -vserver Infra-SVM iscsi show

Create load-sharing mirror of SVM root volume in ONTAP

To create a load-sharing mirror of the SVM root volume in ONTAP, complete the following steps:

-

Create a volume to be the load-sharing mirror of the infrastructure SVM root volume on each node.

volume create –vserver Infra_Vserver –volume rootvol_m01 –aggregate aggr1_nodeA –size 1GB –type DPvolume create –vserver Infra_Vserver –volume rootvol_m02 –aggregate aggr1_nodeB –size 1GB –type DP

-

Create a job schedule to update the root volume mirror relationships every 15 minutes.

job schedule interval create -name 15min -minutes 15

-

Create the mirroring relationships.

snapmirror create -source-path Infra-SVM:rootvol -destination-path Infra-SVM:rootvol_m01 -type LS -schedule 15min snapmirror create -source-path Infra-SVM:rootvol -destination-path Infra-SVM:rootvol_m02 -type LS -schedule 15min

-

Initialize the mirroring relationship and verify that it has been created.

snapmirror initialize-ls-set -source-path Infra-SVM:rootvol snapmirror show

Configure HTTPS access in ONTAP

To configure secure access to the storage controller, complete the following steps:

-

Increase the privilege level to access the certificate commands.

set -privilege diag Do you want to continue? {y|n}: y -

Generally, a self-signed certificate is already in place. Verify the certificate by running the following command:

security certificate show

-

For each SVM shown, the certificate common name should match the DNS fully qualified domain name (FQDN) of the SVM. The four default certificates should be deleted and replaced by either self-signed certificates or certificates from a certificate authority.

Deleting expired certificates before creating certificates is a best practice. Run the

security certificate deletecommand to delete expired certificates. In the following command, use TAB completion to select and delete each default certificate.security certificate delete [TAB] ... Example: security certificate delete -vserver Infra-SVM -common-name Infra-SVM -ca Infra-SVM - type server -serial 552429A6

-

To generate and install self-signed certificates, run the following commands as one-time commands. Generate a server certificate for the infra-SVM and the cluster SVM. Again, use TAB completion to aid in completing these commands.

security certificate create [TAB] ... Example: security certificate create -common-name infra-svm.netapp.com -type server -size 2048 - country US -state "North Carolina" -locality "RTP" -organization "NetApp" -unit "FlexPod" -email- addr "abc@netapp.com" -expire-days 365 -protocol SSL -hash-function SHA256 -vserver Infra-SVM

-

To obtain the values for the parameters required in the following step, run the

security certificate showcommand. -

Enable each certificate that was just created using the

–server-enabled trueand–client- enabled falseparameters. Again, use TAB completion.security ssl modify [TAB] ... Example: security ssl modify -vserver Infra-SVM -server-enabled true -client-enabled false -ca infra-svm.netapp.com -serial 55243646 -common-name infra-svm.netapp.com

-

Configure and enable SSL and HTTPS access and disable HTTP access.

system services web modify -external true -sslv3-enabled true Warning: Modifying the cluster configuration will cause pending web service requests to be interrupted as the web servers are restarted. Do you want to continue {y|n}: y System services firewall policy delete -policy mgmt -service http -vserver <<var_clustername>>It is normal for some of these commands to return an error message stating that the entry does not exist. -

Revert to the admin privilege level and create the setup to allow SVM to be available by the web.

set –privilege admin vserver services web modify –name spi|ontapi|compat –vserver * -enabled true

Create a NetApp FlexVol volume in ONTAP

To create a NetApp FlexVol® volume, enter the volume name, size, and the aggregate on which it exists. Create two VMware datastore volumes and a server boot volume.

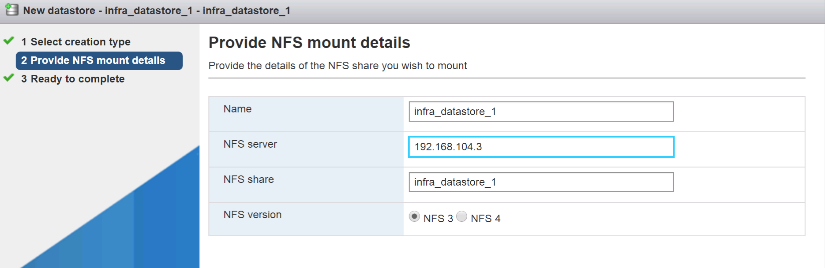

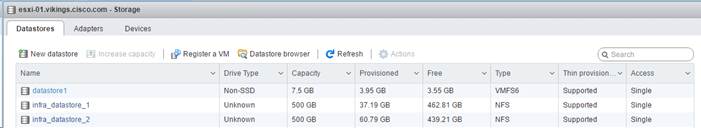

volume create -vserver Infra-SVM -volume infra_datastore_1 -aggregate aggr1_nodeA -size 500GB - state online -policy default -junction-path /infra_datastore_1 -space-guarantee none -percent- snapshot-space 0 volume create -vserver Infra-SVM -volume infra_datastore_2 -aggregate aggr1_nodeB -size 500GB - state online -policy default -junction-path /infra_datastore_2 -space-guarantee none -percent- snapshot-space 0

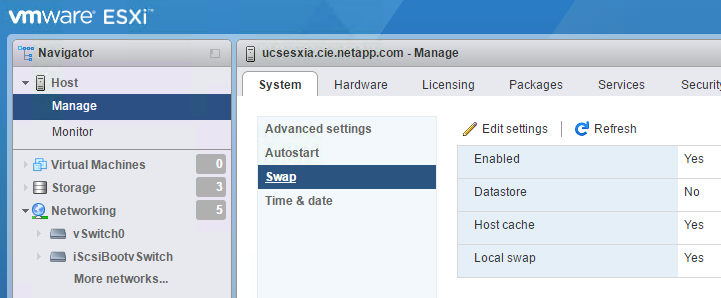

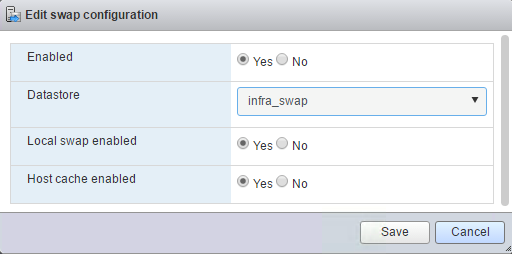

volume create -vserver Infra-SVM -volume infra_swap -aggregate aggr1_nodeA -size 100GB -state online -policy default -juntion-path /infra_swap -space-guarantee none -percent-snapshot-space 0 -snapshot-policy none volume create -vserver Infra-SVM -volume esxi_boot -aggregate aggr1_nodeA -size 100GB -state online -policy default -space-guarantee none -percent-snapshot-space 0

Enable deduplication in ONTAP

To enable deduplication on appropriate volumes once a day, run the following commands:

volume efficiency modify –vserver Infra-SVM –volume esxi_boot –schedule sun-sat@0 volume efficiency modify –vserver Infra-SVM –volume infra_datastore_1 –schedule sun-sat@0 volume efficiency modify –vserver Infra-SVM –volume infra_datastore_2 –schedule sun-sat@0

Create LUNs in ONTAP

To create two boot logical unit numbers (LUNs), run the following commands:

lun create -vserver Infra-SVM -volume esxi_boot -lun VM-Host-Infra-A -size 15GB -ostype vmware - space-reserve disabled lun create -vserver Infra-SVM -volume esxi_boot -lun VM-Host-Infra-B -size 15GB -ostype vmware - space-reserve disabled

|

When adding an extra Cisco UCS C-Series server, an extra boot LUN must be created. |

Create iSCSI LIFs in ONTAP

The table below lists the information needed to complete this configuration.

| Detail | Detail Value |

|---|---|

Storage node A iSCSI LIF01A |

<<var_nodeA_iscsi_lif01a_ip>> |

Storage node A iSCSI LIF01A network mask |

<<var_nodeA_iscsi_lif01a_mask>> |

Storage node A iSCSI LIF01B |

<<var_nodeA_iscsi_lif01b_ip>> |

Storage node A iSCSI LIF01B network mask |

<<var_nodeA_iscsi_lif01b_mask>> |

Storage node B iSCSI LIF01A |

<<var_nodeB_iscsi_lif01a_ip>> |

Storage node B iSCSI LIF01A network mask |

<<var_nodeB_iscsi_lif01a_mask>> |

Storage node B iSCSI LIF01B |

<<var_nodeB_iscsi_lif01b_ip>> |

Storage node B iSCSI LIF01B network mask |

<<var_nodeB_iscsi_lif01b_mask>> |

-

Create four iSCSI LIFs, two on each node.

network interface create -vserver Infra-SVM -lif iscsi_lif01a -role data -data-protocol iscsi - home-node <<var_nodeA>> -home-port e0e-<<var_iscsi_vlan_A_id>> -address <<var_nodeA_iscsi_lif01a_ip>> -netmask <<var_nodeA_iscsi_lif01a_mask>> –status-admin up – failover-policy disabled –firewall-policy data –auto-revert false network interface create -vserver Infra-SVM -lif iscsi_lif01b -role data -data-protocol iscsi - home-node <<var_nodeA>> -home-port e0f-<<var_iscsi_vlan_B_id>> -address <<var_nodeA_iscsi_lif01b_ip>> -netmask <<var_nodeA_iscsi_lif01b_mask>> –status-admin up – failover-policy disabled –firewall-policy data –auto-revert false network interface create -vserver Infra-SVM -lif iscsi_lif02a -role data -data-protocol iscsi - home-node <<var_nodeB>> -home-port e0e-<<var_iscsi_vlan_A_id>> -address <<var_nodeB_iscsi_lif01a_ip>> -netmask <<var_nodeB_iscsi_lif01a_mask>> –status-admin up – failover-policy disabled –firewall-policy data –auto-revert false network interface create -vserver Infra-SVM -lif iscsi_lif02b -role data -data-protocol iscsi - home-node <<var_nodeB>> -home-port e0f-<<var_iscsi_vlan_B_id>> -address <<var_nodeB_iscsi_lif01b_ip>> -netmask <<var_nodeB_iscsi_lif01b_mask>> –status-admin up – failover-policy disabled –firewall-policy data –auto-revert false network interface show

Create NFS LIFs in ONTAP

The following table lists the information needed to complete this configuration.

| Detail | Detail value |

|---|---|

Storage node A NFS LIF 01 a IP |

<<var_nodeA_nfs_lif_01_a_ip>> |

Storage node A NFS LIF 01 a network mask |

<<var_nodeA_nfs_lif_01_a_mask>> |

Storage node A NFS LIF 01 b IP |

<<var_nodeA_nfs_lif_01_b_ip>> |

Storage node A NFS LIF 01 b network mask |

<<var_nodeA_nfs_lif_01_b_mask>> |

Storage node B NFS LIF 02 a IP |

<<var_nodeB_nfs_lif_02_a_ip>> |

Storage node B NFS LIF 02 a network mask |

<<var_nodeB_nfs_lif_02_a_mask>> |

Storage node B NFS LIF 02 b IP |

<<var_nodeB_nfs_lif_02_b_ip>> |

Storage node B NFS LIF 02 b network mask |

<<var_nodeB_nfs_lif_02_b_mask>> |

-

Create an NFS LIF.

network interface create -vserver Infra-SVM -lif nfs_lif01_a -role data -data-protocol nfs -home- node <<var_nodeA>> -home-port e0e-<<var_nfs_vlan_id>> –address <<var_nodeA_nfs_lif_01_a_ip>> - netmask << var_nodeA_nfs_lif_01_a_mask>> -status-admin up –failover-policy broadcast-domain-wide – firewall-policy data –auto-revert true network interface create -vserver Infra-SVM -lif nfs_lif01_b -role data -data-protocol nfs -home- node <<var_nodeA>> -home-port e0f-<<var_nfs_vlan_id>> –address <<var_nodeA_nfs_lif_01_b_ip>> - netmask << var_nodeA_nfs_lif_01_b_mask>> -status-admin up –failover-policy broadcast-domain-wide – firewall-policy data –auto-revert true network interface create -vserver Infra-SVM -lif nfs_lif02_a -role data -data-protocol nfs -home- node <<var_nodeB>> -home-port e0e-<<var_nfs_vlan_id>> –address <<var_nodeB_nfs_lif_02_a_ip>> - netmask << var_nodeB_nfs_lif_02_a_mask>> -status-admin up –failover-policy broadcast-domain-wide – firewall-policy data –auto-revert true network interface create -vserver Infra-SVM -lif nfs_lif02_b -role data -data-protocol nfs -home- node <<var_nodeB>> -home-port e0f-<<var_nfs_vlan_id>> –address <<var_nodeB_nfs_lif_02_b_ip>> - netmask << var_nodeB_nfs_lif_02_b_mask>> -status-admin up –failover-policy broadcast-domain-wide – firewall-policy data –auto-revert true network interface show

Add infrastructure SVM administrator

The following table lists the information needed to complete this configuration.

| Detail | Detail value |

|---|---|

Vsmgmt IP |

<<var_svm_mgmt_ip>> |

Vsmgmt network mask |

<<var_svm_mgmt_mask>> |

Vsmgmt default gateway |

<<var_svm_mgmt_gateway>> |

To add the infrastructure SVM administrator and SVM administration LIF to the management network, complete the following steps:

-

Run the following command:

network interface create –vserver Infra-SVM –lif vsmgmt –role data –data-protocol none –home-node <<var_nodeB>> -home-port e0M –address <<var_svm_mgmt_ip>> -netmask <<var_svm_mgmt_mask>> - status-admin up –failover-policy broadcast-domain-wide –firewall-policy mgmt –auto-revert true

The SVM management IP here should be in the same subnet as the storage cluster management IP. -

Create a default route to allow the SVM management interface to reach the outside world.

network route create –vserver Infra-SVM -destination 0.0.0.0/0 –gateway <<var_svm_mgmt_gateway>> network route show

-

Set a password for the SVM

vsadminuser and unlock the user.security login password –username vsadmin –vserver Infra-SVM Enter a new password: <<var_password>> Enter it again: <<var_password>> security login unlock –username vsadmin –vserver

Cisco UCS server configuration

FlexPod Cisco UCS base

Perform Initial Setup of Cisco UCS 6324 Fabric Interconnect for FlexPod Environments.

This section provides detailed procedures to configure Cisco UCS for use in a FlexPod ROBO environment by using Cisco UCS Manger.

Cisco UCS fabric interconnect 6324 A

Cisco UCS uses access layer networking and servers. This high-performance, next-generation server system provides a data center with a high degree of workload agility and scalability.

Cisco UCS Manager 4.0(1b) supports the 6324 Fabric Interconnect that integrates the Fabric Interconnect into the Cisco UCS Chassis and provides an integrated solution for a smaller deployment environment. Cisco UCS Mini simplifies the system management and saves cost for the low scale deployments.

The hardware and software components support Cisco's unified fabric, which runs multiple types of data center traffic over a single converged network adapter.

Initial system setup

The first time when you access a fabric interconnect in a Cisco UCS domain, a setup wizard prompts you for the following information required to configure the system:

-

Installation method (GUI or CLI)

-

Setup mode (restore from full system backup or initial setup)

-

System configuration type (standalone or cluster configuration)

-

System name

-

Admin password

-

Management port IPv4 address and subnet mask, or IPv6 address and prefix

-

Default gateway IPv4 or IPv6 address

-

DNS Server IPv4 or IPv6 address

-

Default domain name

The following table lists the information needed to complete the Cisco UCS initial configuration on Fabric Interconnect A

| Detail | Detail/value |

|---|---|

System Name |

<<var_ucs_clustername>> |

Admin Password |

<<var_password>> |

Management IP Address: Fabric Interconnect A |

<<var_ucsa_mgmt_ip>> |

Management netmask: Fabric Interconnect A |

<<var_ucsa_mgmt_mask>> |

Default gateway: Fabric Interconnect A |

<<var_ucsa_mgmt_gateway>> |

Cluster IP address |

<<var_ucs_cluster_ip>> |

DNS server IP address |

<<var_nameserver_ip>> |

Domain name |

<<var_domain_name>> |

To configure the Cisco UCS for use in a FlexPod environment, complete the following steps:

-

Connect to the console port on the first Cisco UCS 6324 Fabric Interconnect A.

Enter the configuration method. (console/gui) ? console Enter the setup mode; setup newly or restore from backup. (setup/restore) ? setup You have chosen to setup a new Fabric interconnect. Continue? (y/n): y Enforce strong password? (y/n) [y]: Enter Enter the password for "admin":<<var_password>> Confirm the password for "admin":<<var_password>> Is this Fabric interconnect part of a cluster(select 'no' for standalone)? (yes/no) [n]: yes Enter the switch fabric (A/B) []: A Enter the system name: <<var_ucs_clustername>> Physical Switch Mgmt0 IP address : <<var_ucsa_mgmt_ip>> Physical Switch Mgmt0 IPv4 netmask : <<var_ucsa_mgmt_mask>> IPv4 address of the default gateway : <<var_ucsa_mgmt_gateway>> Cluster IPv4 address : <<var_ucs_cluster_ip>> Configure the DNS Server IP address? (yes/no) [n]: y DNS IP address : <<var_nameserver_ip>> Configure the default domain name? (yes/no) [n]: y Default domain name: <<var_domain_name>> Join centralized management environment (UCS Central)? (yes/no) [n]: no NOTE: Cluster IP will be configured only after both Fabric Interconnects are initialized. UCSM will be functional only after peer FI is configured in clustering mode. Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes Applying configuration. Please wait. Configuration file - Ok -

Review the settings displayed on the console. If they are correct, answer

yesto apply and save the configuration. -

Wait for the login prompt to verify that the configuration has been saved.

The following table lists the information needed to complete the Cisco UCS initial configuration on Fabric Interconnect B.

| Detail | Detail/value |

|---|---|

System Name |

<<var_ucs_clustername>> |

Admin Password |

<<var_password>> |

Management IP Address-FI B |

<<var_ucsb_mgmt_ip>> |

Management Netmask-FI B |

<<var_ucsb_mgmt_mask>> |

Default Gateway-FI B |

<<var_ucsb_mgmt_gateway>> |

Cluster IP Address |

<<var_ucs_cluster_ip>> |

DNS Server IP address |

<<var_nameserver_ip>> |

Domain Name |

<<var_domain_name>> |

-

Connect to the console port on the second Cisco UCS 6324 Fabric Interconnect B.

Enter the configuration method. (console/gui) ? console Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y Enter the admin password of the peer Fabric interconnect:<<var_password>> Connecting to peer Fabric interconnect... done Retrieving config from peer Fabric interconnect... done Peer Fabric interconnect Mgmt0 IPv4 Address: <<var_ucsb_mgmt_ip>> Peer Fabric interconnect Mgmt0 IPv4 Netmask: <<var_ucsb_mgmt_mask>> Cluster IPv4 address: <<var_ucs_cluster_address>> Peer FI is IPv4 Cluster enabled. Please Provide Local Fabric Interconnect Mgmt0 IPv4 Address Physical Switch Mgmt0 IP address : <<var_ucsb_mgmt_ip>> Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes Applying configuration. Please wait. Configuration file - Ok -

Wait for the login prompt to confirm that the configuration has been saved.

Log into Cisco UCS Manager

To log into the Cisco Unified Computing System (UCS) environment, complete the following steps:

-

Open a web browser and navigate to the Cisco UCS Fabric Interconnect cluster address.

You may need to wait at least 5 minutes after configuring the second fabric interconnect for Cisco UCS Manager to come up.

-

Click the Launch UCS Manager link to launch Cisco UCS Manager.

-

Accept the necessary security certificates.

-

When prompted, enter admin as the user name and enter the administrator password.

-

Click Login to log in to Cisco UCS Manager.

Cisco UCS Manager software version 4.0(1b)

This document assumes the use of Cisco UCS Manager Software version 4.0(1b). To upgrade the Cisco UCS Manager software and the Cisco UCS 6324 Fabric Interconnect software refer to Cisco UCS Manager Install and Upgrade Guides.

Configure Cisco UCS Call Home

Cisco highly recommends that you configure Call Home in Cisco UCS Manager. Configuring Call Home accelerates the resolution of support cases. To configure Call Home, complete the following steps:

-

In Cisco UCS Manager, click Admin on the left.

-

Select All > Communication Management > Call Home.

-

Change the State to On.

-

Fill in all the fields according to your Management preferences and click Save Changes and OK to complete configuring Call Home.

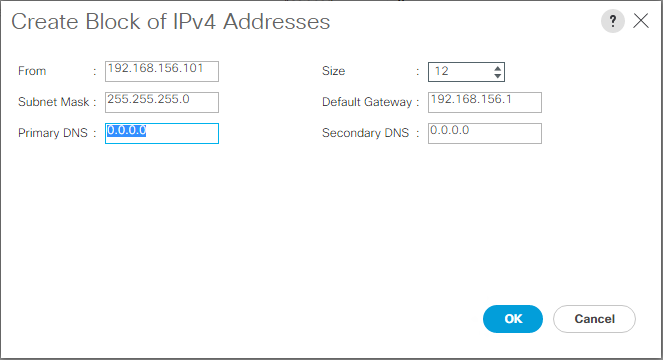

Add block of IP addresses for keyboard, video, mouse access

To create a block of IP addresses for in band server keyboard, video, mouse (KVM) access in the Cisco UCS environment, complete the following steps:

-

In Cisco UCS Manager, click LAN on the left.

-

Expand Pools > root > IP Pools.

-

Right-click IP Pool ext-mgmt and select Create Block of IPv4 Addresses.

-

Enter the starting IP address of the block, number of IP addresses required, and the subnet mask and gateway information.

-

Click OK to create the block.

-

Click OK in the confirmation message.

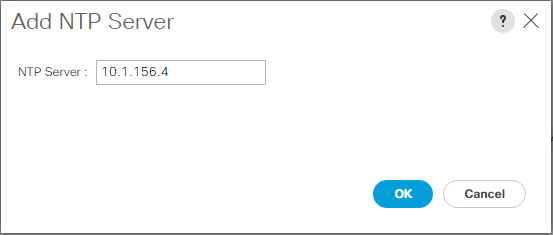

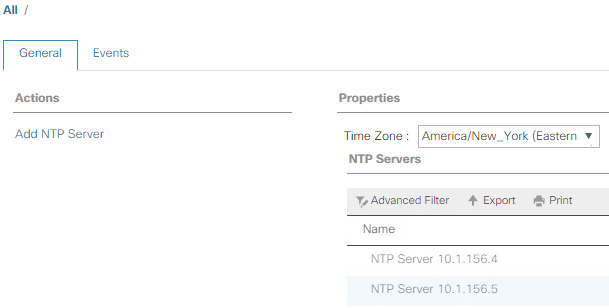

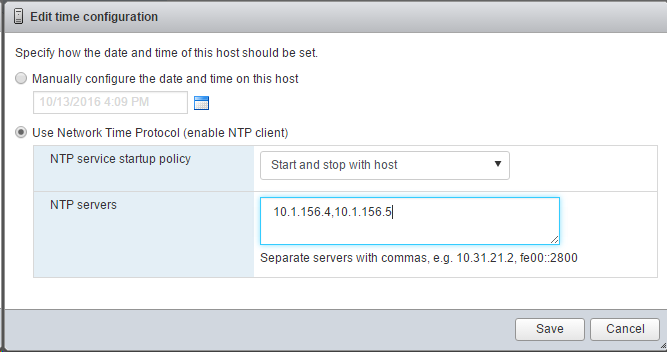

Synchronize Cisco UCS to NTP

To synchronize the Cisco UCS environment to the NTP servers in the Nexus switches, complete the following steps:

-

In Cisco UCS Manager, click Admin on the left.

-

Expand All > Time Zone Management.

-

Select Time Zone.

-

In the Properties pane, select the appropriate time zone in the Time Zone menu.

-

Click Save Changes and click OK.

-

Click Add NTP Server.

-

Enter

<switch-a-ntp-ip> or <Nexus-A-mgmt-IP>and click OK. Click OK.

-

Click Add NTP Server.

-

Enter

<switch-b-ntp-ip>or <Nexus-B-mgmt-IP>and click OK. Click OK on the confirmation.

Edit chassis discovery policy

Setting the discovery policy simplifies the addition of Cisco UCS B-Series chassis and of additional fabric extenders for further Cisco UCS C-Series connectivity. To modify the chassis discovery policy, complete the following steps:

-

In Cisco UCS Manager, click Equipment on the left and select Equipment in the second list.

-

In the right pane, select the Policies tab.

-

Under Global Policies, set the Chassis/FEX Discovery Policy to match the minimum number of uplink ports that are cabled between the chassis or fabric extenders (FEXes) and the fabric interconnects.

-

Set the Link Grouping Preference to Port Channel. If the environment being setup contains a large amount of multicast traffic, set the Multicast Hardware Hash setting to Enabled.

-

Click Save Changes.

-

Click OK.

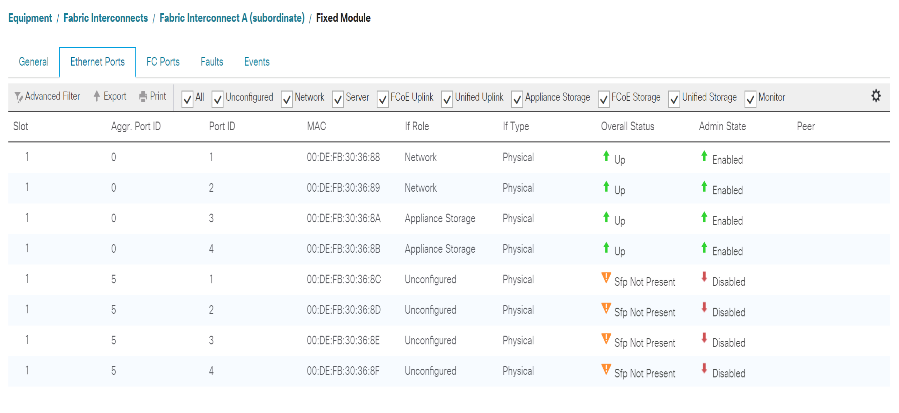

Enable server, uplink, and storage ports

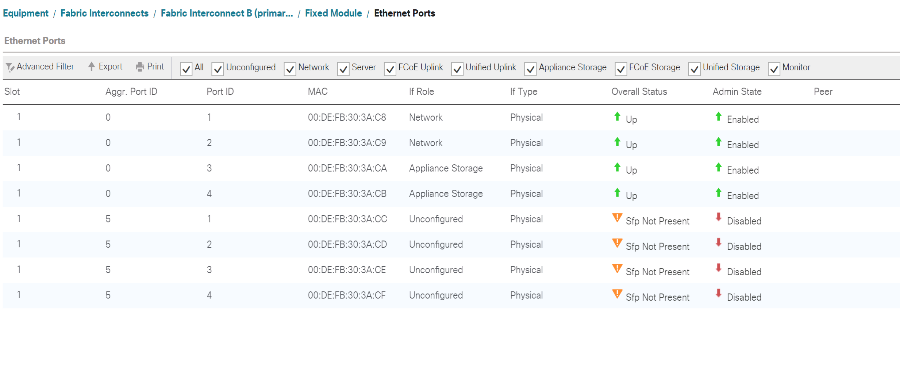

To enable server and uplink ports, complete the following steps:

-

In Cisco UCS Manager, in the navigation pane, select the Equipment tab.

-

Expand Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module.

-

Expand Ethernet Ports.

-

Select ports 1 and 2 that are connected to the Cisco Nexus 31108 switches, right-click, and select Configure as Uplink Port.

-

Click Yes to confirm the uplink ports and click OK.

-

Select ports 3 and 4 that are connected to the NetApp Storage Controllers, right-click, and select Configure as Appliance Port.

-

Click Yes to confirm the appliance ports.

-

On the Configure as Appliance Port window, click OK.

-

Click OK to confirm.

-

In the left pane, select Fixed Module under Fabric Interconnect A.

-

From the Ethernet Ports tab, confirm that ports have been configured correctly in the If Role column. If any port C-Series servers were configured on the Scalability port, click on it to verify port connectivity there.

-

Expand Equipment > Fabric Interconnects > Fabric Interconnect B > Fixed Module.

-

Expand Ethernet Ports.

-

Select Ethernet ports 1 and 2 that are connected to the Cisco Nexus 31108 switches, right-click, and select Configure as Uplink Port.

-

Click Yes to confirm the uplink ports and click OK.

-

Select ports 3 and 4 that are connected to the NetApp Storage Controllers, right-click, and select Configure as Appliance Port.

-

Click Yes to confirm the appliance ports.

-

On the Configure as Appliance Port window, click OK.

-

Click OK to confirm.

-

In the left pane, select Fixed Module under Fabric Interconnect B.

-

From the Ethernet Ports tab, confirm that ports have been configured correctly in the If Role column. If any port C-Series servers were configured on the Scalability port, click it to verify port connectivity there.

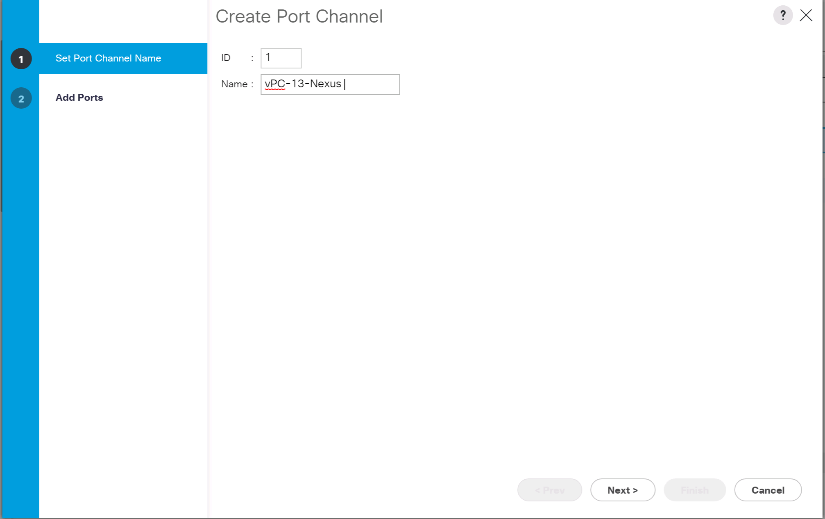

Create uplink port channels to Cisco Nexus 31108 switches

To configure the necessary port channels in the Cisco UCS environment, complete the following steps:

-

In Cisco UCS Manager, select the LAN tab in the navigation pane.

In this procedure, two port channels are created: one from Fabric A to both Cisco Nexus 31108 switches and one from Fabric B to both Cisco Nexus 31108 switches. If you are using standard switches, modify this procedure accordingly. If you are using 1 Gigabit Ethernet (1GbE) switches and GLC-T SFPs on the Fabric Interconnects, the interface speeds of Ethernet ports 1/1 and 1/2 in the Fabric Interconnects must be set to 1Gbps. -

Under LAN > LAN Cloud, expand the Fabric A tree.

-

Right-click Port Channels.

-

Select Create Port Channel.

-

Enter 13 as the unique ID of the port channel.

-

Enter vPC-13-Nexus as the name of the port channel.

-

Click Next.

-

Select the following ports to be added to the port channel:

-

Slot ID 1 and port 1

-

Slot ID 1 and port 2

-

-

Click >> to add the ports to the port channel.

-

Click Finish to create the port channel. Click OK.

-

Under Port Channels, select the newly created port channel.

The port channel should have an Overall Status of Up.

-

In the navigation pane, under LAN > LAN Cloud, expand the Fabric B tree.

-

Right-click Port Channels.

-

Select Create Port Channel.

-

Enter 14 as the unique ID of the port channel.

-

Enter vPC-14-Nexus as the name of the port channel. Click Next.

-

Select the following ports to be added to the port channel:

-

Slot ID 1 and port 1

-

Slot ID 1 and port 2

-

-

Click >> to add the ports to the port channel.

-

Click Finish to create the port channel. Click OK.

-

Under Port Channels, select the newly created port-channel.

-

The port channel should have an Overall Status of Up.

Create an organization (optional)

Organizations are used to organizing resources and restricting access to various groups within the IT organization, thereby enabling multitenancy of the compute resources.

|

Although this document does not assume the use of organizations, this procedure provides instructions for creating one. |

To configure an organization in the Cisco UCS environment, complete the following steps:

-

In Cisco UCS Manager, from the New menu in the toolbar at the top of the window, select Create Organization.

-

Enter a name for the organization.

-

Optional: Enter a description for the organization. Click OK.

-

Click OK in the confirmation message.

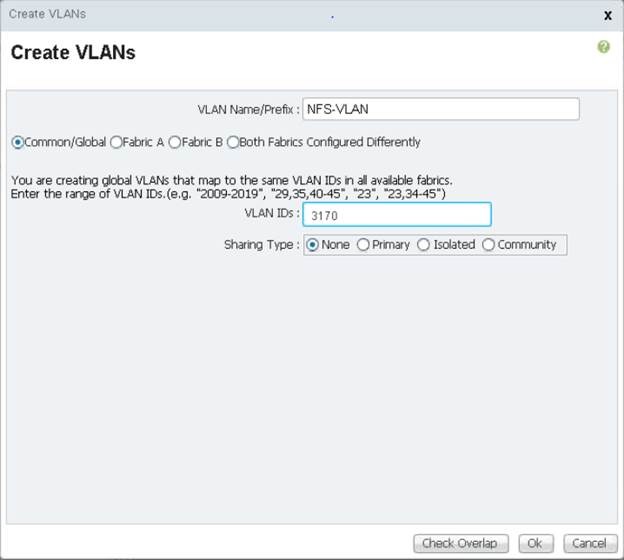

Configure storage appliance ports and storage VLANs

To configure the storage appliance ports and storage VLANs, complete the following steps:

-

In the Cisco UCS Manager, select the LAN tab.

-

Expand the Appliances cloud.

-

Right-click VLANs under Appliances Cloud.

-

Select Create VLANs.

-

Enter NFS-VLAN as the name for the Infrastructure NFS VLAN.

-

Leave Common/Global selected.

-

Enter

<<var_nfs_vlan_id>>for the VLAN ID. -

Leave Sharing Type set to None.

-

Click OK, and then click OK again to create the VLAN.

-

Right-click VLANs under Appliances Cloud.

-

Select Create VLANs.

-

Enter iSCSI-A-VLAN as the name for the Infrastructure iSCSI Fabric A VLAN.

-

Leave Common/Global selected.

-

Enter

<<var_iscsi-a_vlan_id>>for the VLAN ID. -

Click OK, and then click OK again to create the VLAN.

-

Right-click VLANs under Appliances Cloud.

-

Select Create VLANs.

-

Enter iSCSI-B-VLAN as the name for the Infrastructure iSCSI Fabric B VLAN.

-

Leave Common/Global selected.

-

Enter

<<var_iscsi-b_vlan_id>>for the VLAN ID. -

Click OK, and then click OK again to create the VLAN.

-

Right-click VLANs under Appliances Cloud.

-

Select Create VLANs.

-

Enter Native-VLAN as the name for the Native VLAN.

-

Leave Common/Global selected.

-

Enter

<<var_native_vlan_id>>for the VLAN ID. -

Click OK, and then click OK again to create the VLAN.

-

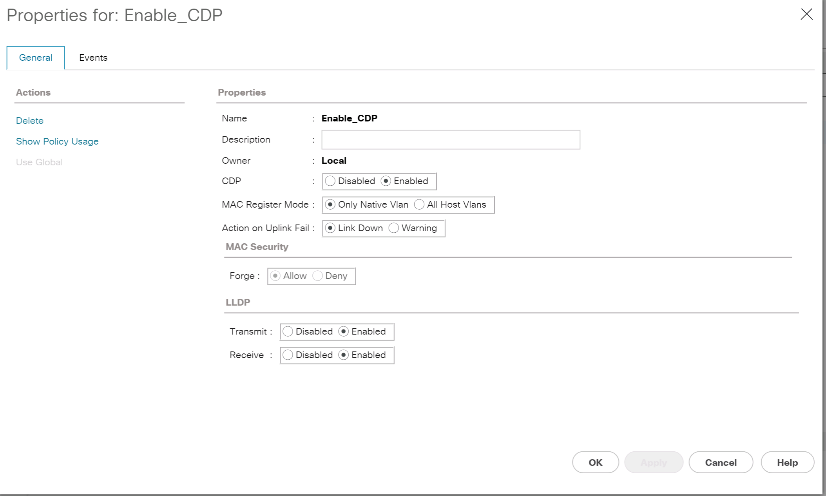

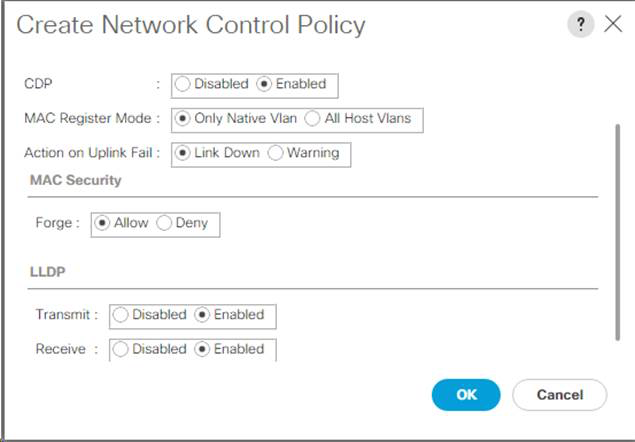

In the navigation pane, under LAN > Policies, expand Appliances and right-click Network Control Policies.

-

Select Create Network Control Policy.

-

Name the policy

Enable_CDP_LLPDand select Enabled next to CDP. -

Enable the Transmit and Receive features for LLDP.

-

Click OK and then click OK again to create the policy.

-

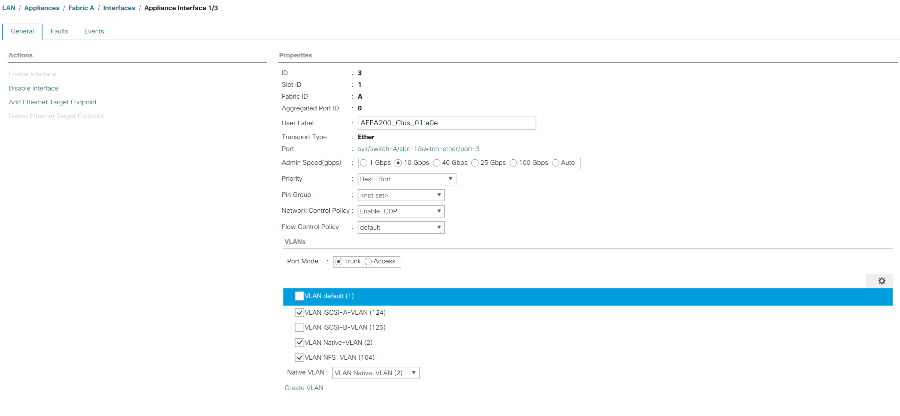

In the navigation pane, under LAN > Appliances Cloud, expand the Fabric A tree.

-

Expand Interfaces.

-

Select Appliance Interface 1/3.

-

In the User Label field, put in information indicating the storage controller port, such as

<storage_controller_01_name>:e0e. Click Save Changes and OK. -

Select the Enable_CDP Network Control Policy and select Save Changes and OK.

-

Under VLANs, select the iSCSI-A-VLAN, NFS VLAN, and Native VLAN. Set the Native-VLAN as the Native VLAN. Clear the default VLAN selection.

-

Click Save Changes and OK.

-

Select Appliance Interface 1/4 under Fabric A.

-

In the User Label field, put in information indicating the storage controller port, such as

<storage_controller_02_name>:e0e. Click Save Changes and OK. -

Select the Enable_CDP Network Control Policy and select Save Changes and OK.

-

Under VLANs, select the iSCSI-A-VLAN, NFS VLAN, and Native VLAN.

-

Set the Native-VLAN as the Native VLAN.

-

Clear the default VLAN selection.

-

Click Save Changes and OK.

-

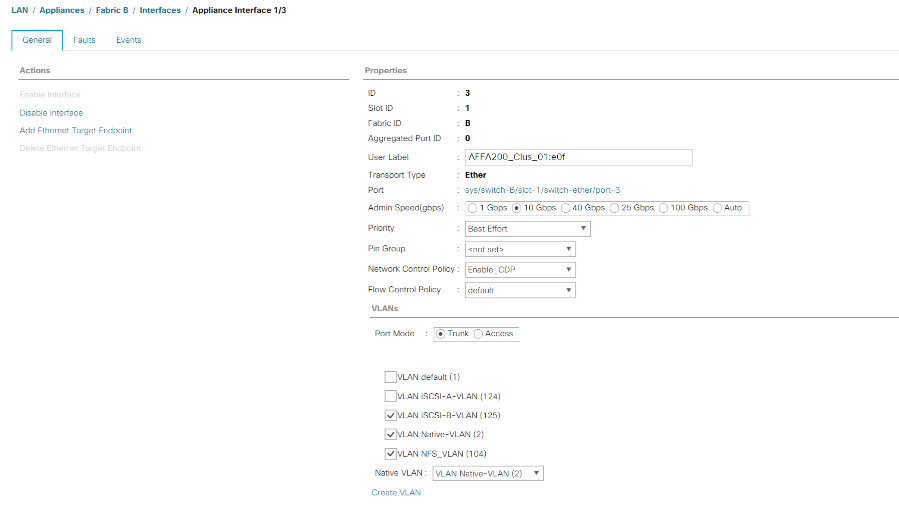

In the navigation pane, under LAN > Appliances Cloud, expand the Fabric B tree.

-

Expand Interfaces.

-

Select Appliance Interface 1/3.

-

In the User Label field, put in information indicating the storage controller port, such as

<storage_controller_01_name>:e0f. Click Save Changes and OK. -

Select the Enable_CDP Network Control Policy and select Save Changes and OK.

-

Under VLANs, select the iSCSI-B-VLAN, NFS VLAN, and Native VLAN. Set the Native-VLAN as the Native VLAN. Unselect the default VLAN.

-

Click Save Changes and OK.

-

Select Appliance Interface 1/4 under Fabric B.

-

In the User Label field, put in information indicating the storage controller port, such as

<storage_controller_02_name>:e0f. Click Save Changes and OK. -

Select the Enable_CDP Network Control Policy and select Save Changes and OK.

-

Under VLANs, select the iSCSI-B-VLAN, NFS VLAN, and Native VLAN. Set the Native-VLAN as the Native VLAN. Unselect the default VLAN.

-

Click Save Changes and OK.

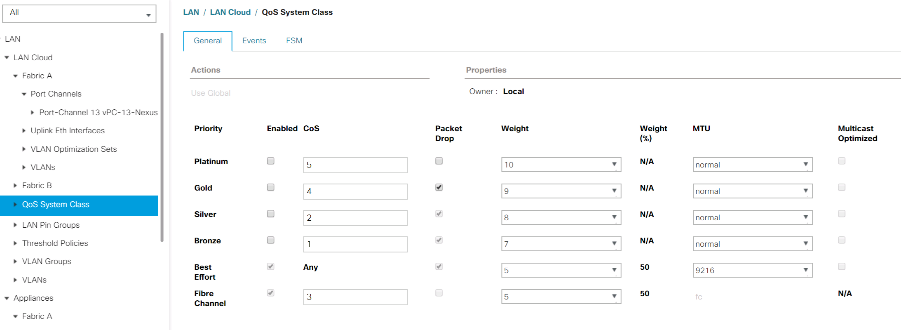

Set jumbo frames in Cisco UCS fabric

To configure jumbo frames and enable quality of service in the Cisco UCS fabric, complete the following steps:

-

In Cisco UCS Manager, in the navigation pane, click the LAN tab.

-

Select LAN > LAN Cloud > QoS System Class.

-

In the right pane, click the General tab.

-

On the Best Effort row, enter 9216 in the box under the MTU column.

-

Click Save Changes.

-

Click OK.

Acknowledge Cisco UCS chassis

To acknowledge all Cisco UCS chassis, complete the following steps:

-

In Cisco UCS Manager, select the Equipment tab, then Expand the Equipment tab on the right.

-

Expand Equipment > Chassis.

-

In the Actions for Chassis 1, select Acknowledge Chassis.

-

Click OK and then click OK to complete acknowledging the chassis.

-

Click Close to close the Properties window.

Load Cisco UCS 4.0(1b) firmware images

To upgrade the Cisco UCS Manager software and the Cisco UCS Fabric Interconnect software to version 4.0(1b) refer to Cisco UCS Manager Install and Upgrade Guides.

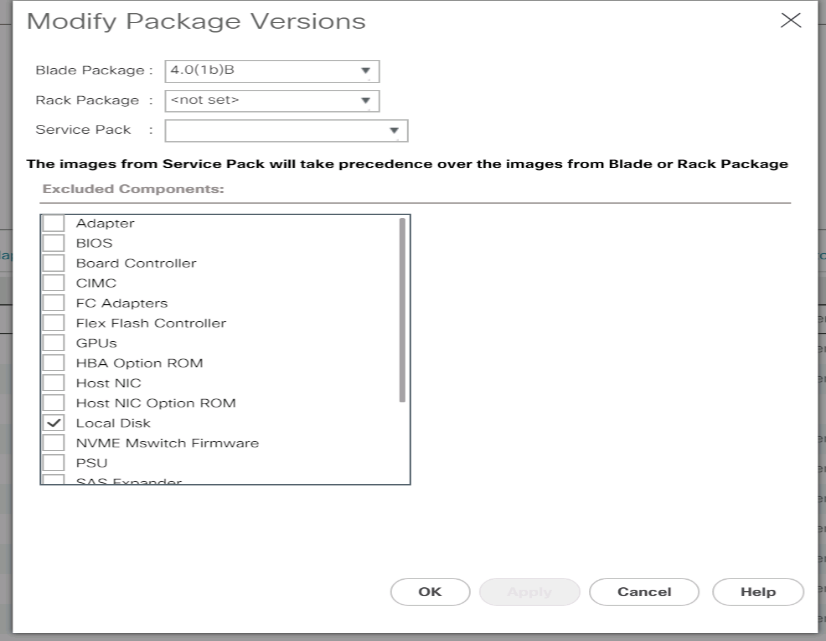

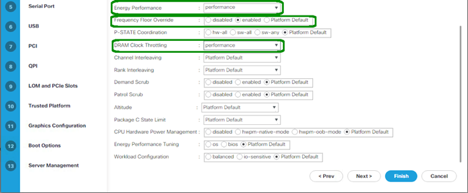

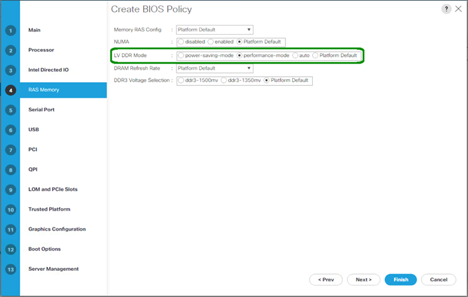

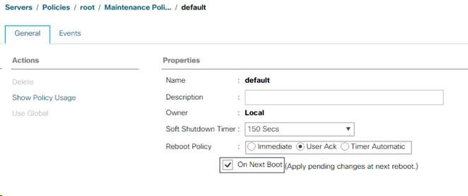

Create host firmware package

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties.

To create a firmware management policy for a given server configuration in the Cisco UCS environment, complete the following steps:

-

In Cisco UCS Manager, click Servers on the left.

-

Select Policies > root.

-

Expand Host Firmware Packages.

-

Select default.

-

In the Actions pane, select Modify Package Versions.

-

Select the version 4.0(1b) for both the Blade Packages.

-

Click OK then OK again to modify the host firmware package.

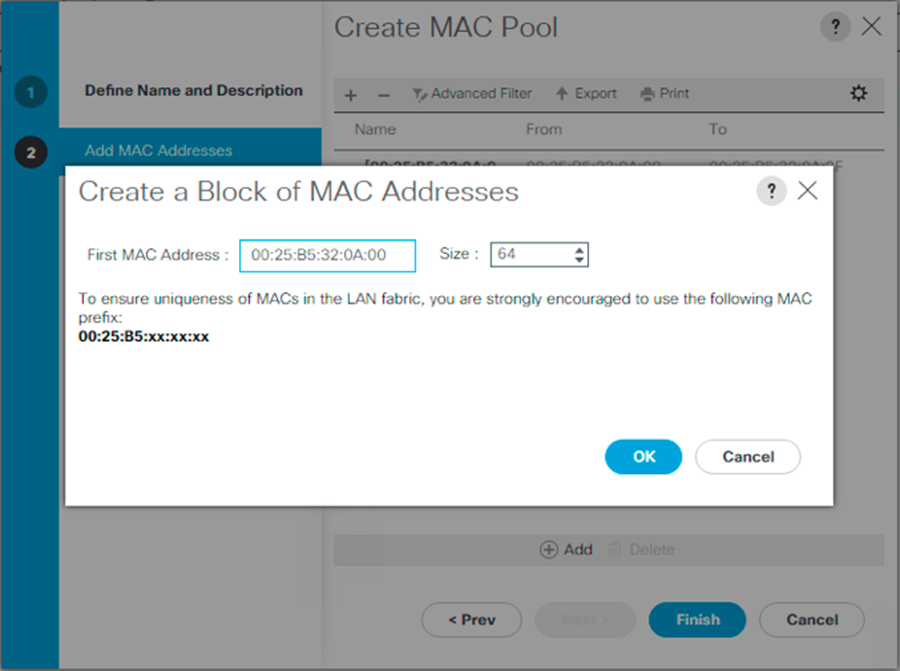

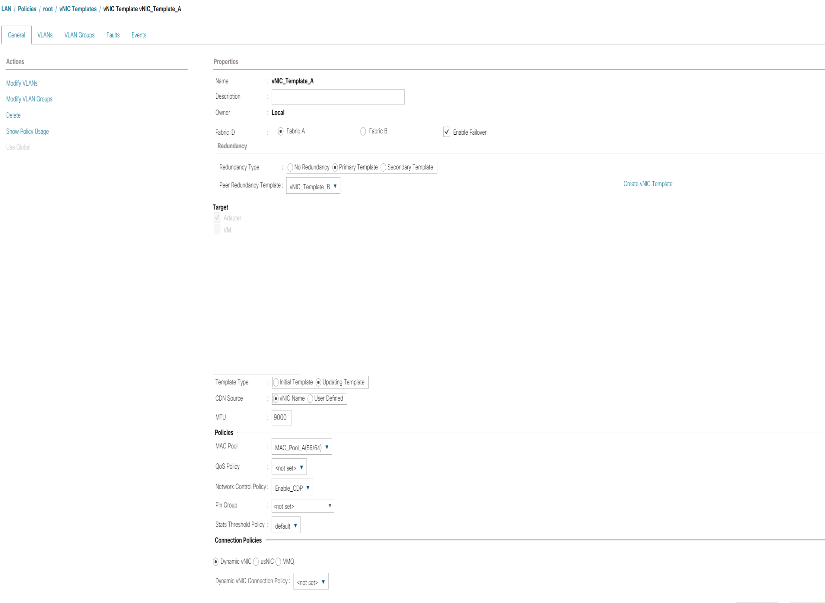

Create MAC address pools

To configure the necessary MAC address pools for the Cisco UCS environment, complete the following steps:

-

In Cisco UCS Manager, click LAN on the left.

-

Select Pools > root.

In this procedure, two MAC address pools are created, one for each switching fabric.

-

Right-click MAC Pools under the root organization.

-

Select Create MAC Pool to create the MAC address pool.

-

Enter MAC-Pool-A as the name of the MAC pool.

-

Optional: Enter a description for the MAC pool.

-

Select Sequential as the option for Assignment Order. Click Next.

-

Click Add.

-

Specify a starting MAC address.

For the FlexPod solution, the recommendation is to place 0A in the next-to-last octet of the starting MAC address to identify all of the MAC addresses as fabric A addresses. In our example, we have carried forward the example of also embedding the Cisco UCS domain number information giving us 00:25:B5:32:0A:00 as our first MAC address. -

Specify a size for the MAC address pool that is sufficient to support the available blade or server resources. Click OK.

-

Click Finish.

-

In the confirmation message, click OK.

-

Right-click MAC Pools under the root organization.

-

Select Create MAC Pool to create the MAC address pool.

-

Enter MAC-Pool-B as the name of the MAC pool.

-

Optional: Enter a description for the MAC pool.

-

Select Sequential as the option for Assignment Order. Click Next.

-

Click Add.

-

Specify a starting MAC address.

For the FlexPod solution, it is recommended to place 0B in the next to last octet of the starting MAC address to identify all the MAC addresses in this pool as fabric B addresses. Once again, we have carried forward in our example of also embedding the Cisco UCS domain number information giving us 00:25:B5:32:0B:00 as our first MAC address. -

Specify a size for the MAC address pool that is sufficient to support the available blade or server resources. Click OK.

-

Click Finish.

-

In the confirmation message, click OK.

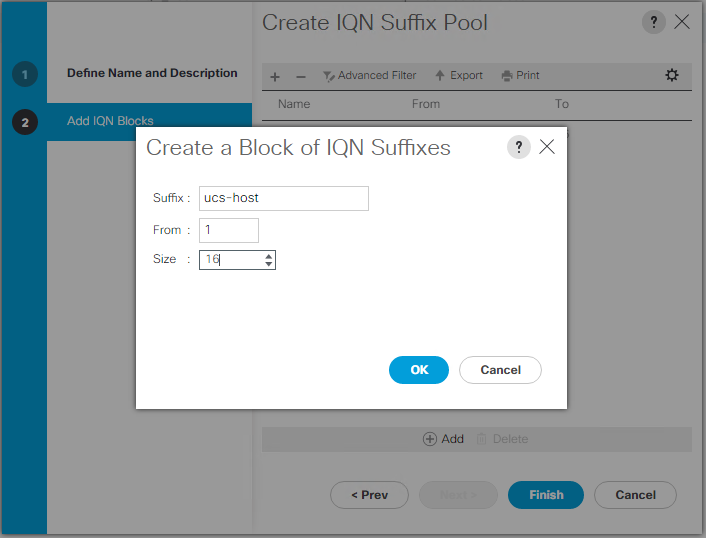

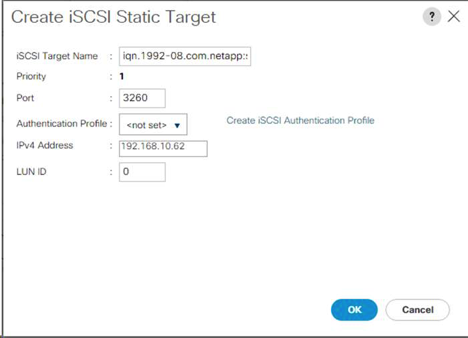

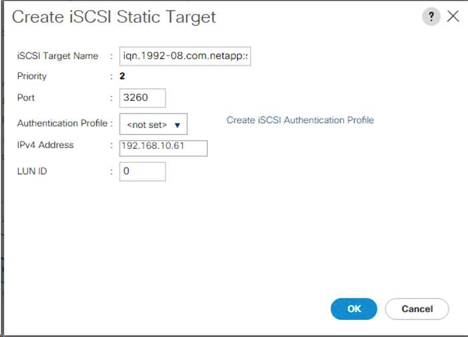

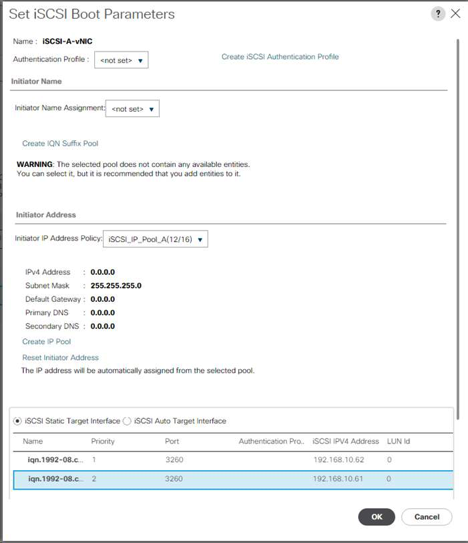

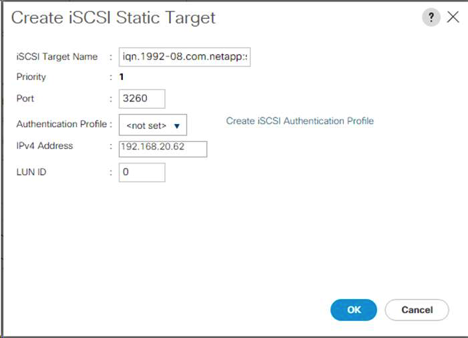

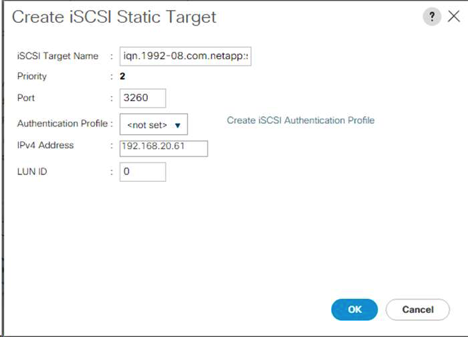

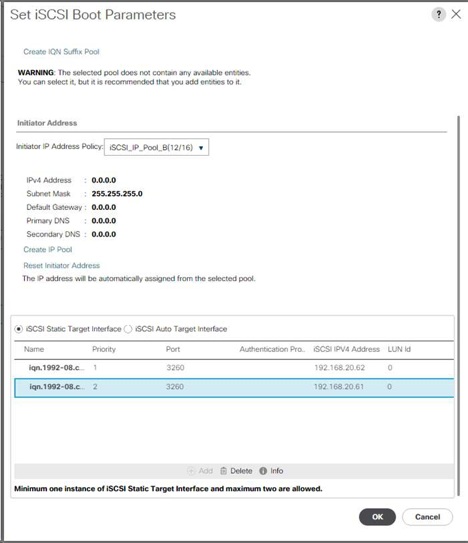

Create iSCSI IQN pool

To configure the necessary IQN pools for the Cisco UCS environment, complete the following steps:

-

In Cisco UCS Manager, click SAN on the left.

-

Select Pools > root.

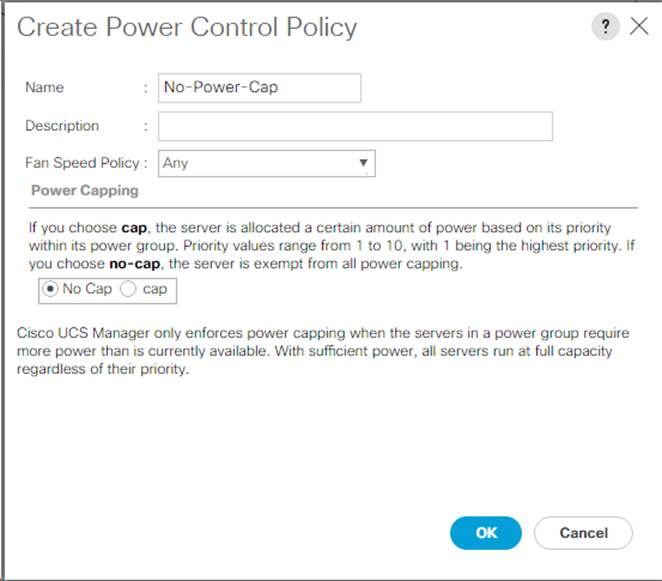

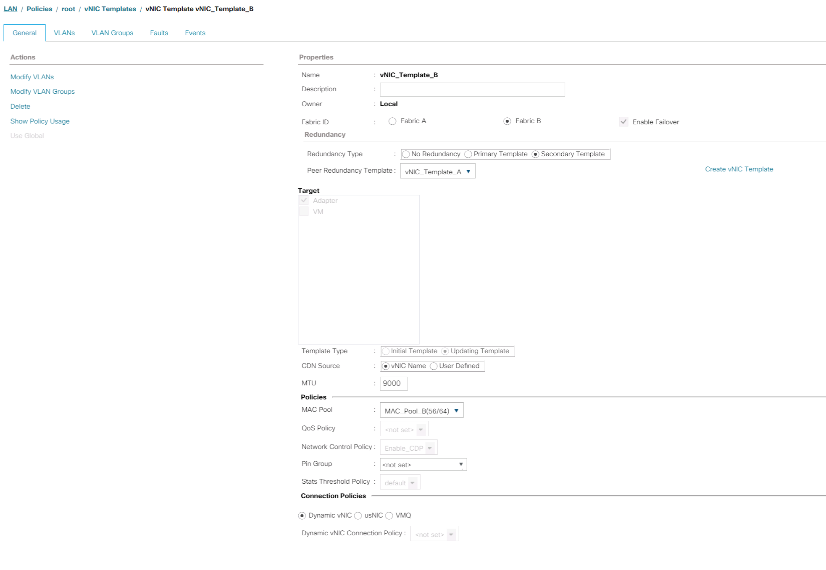

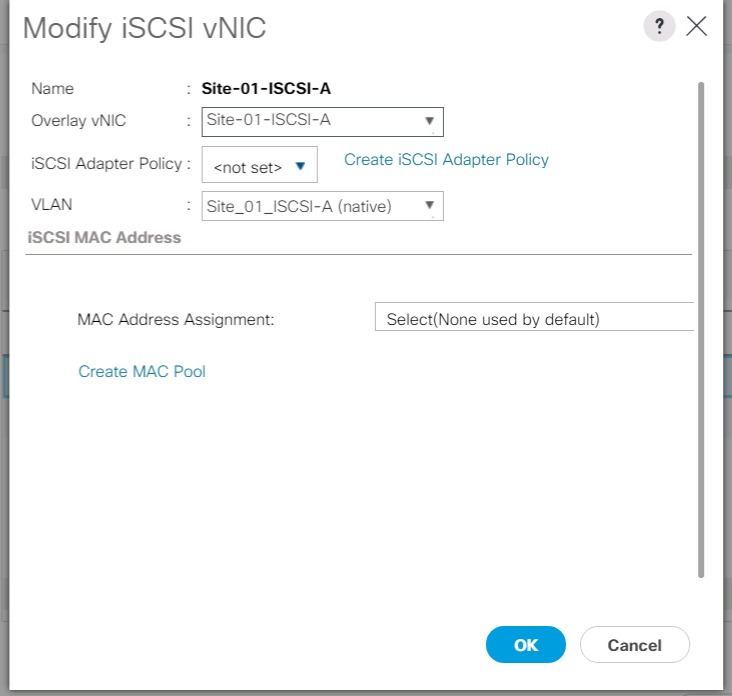

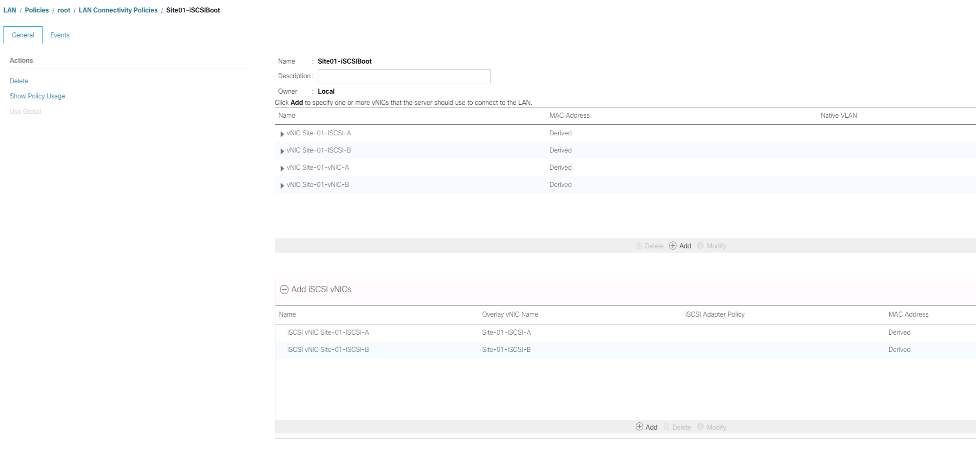

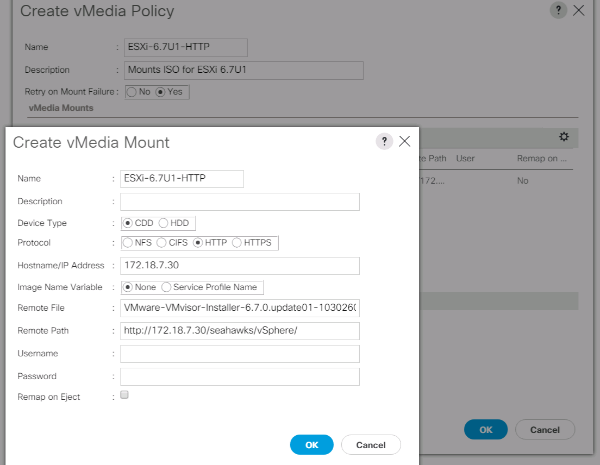

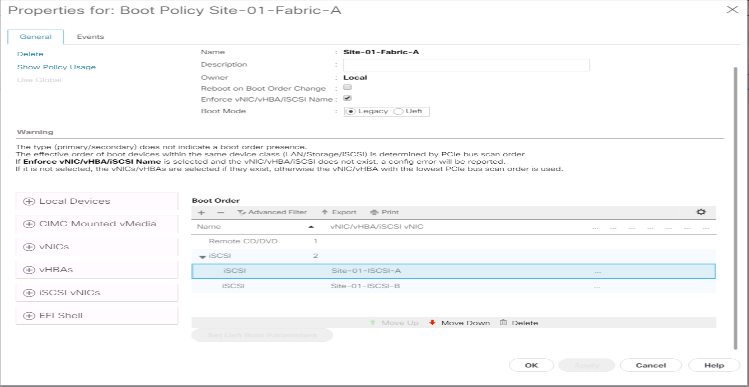

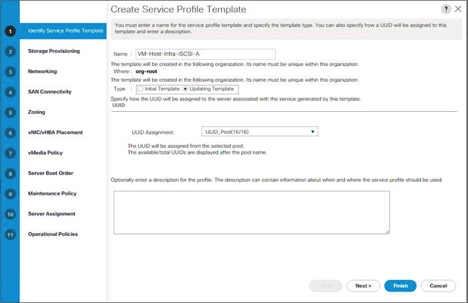

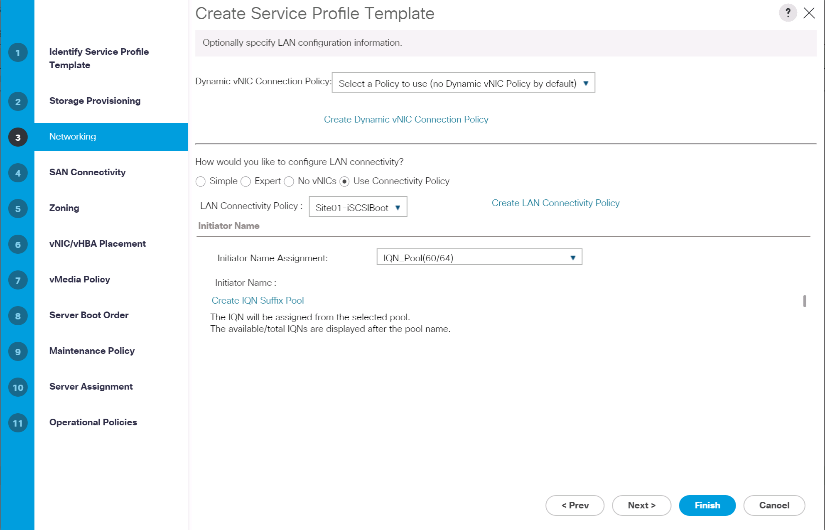

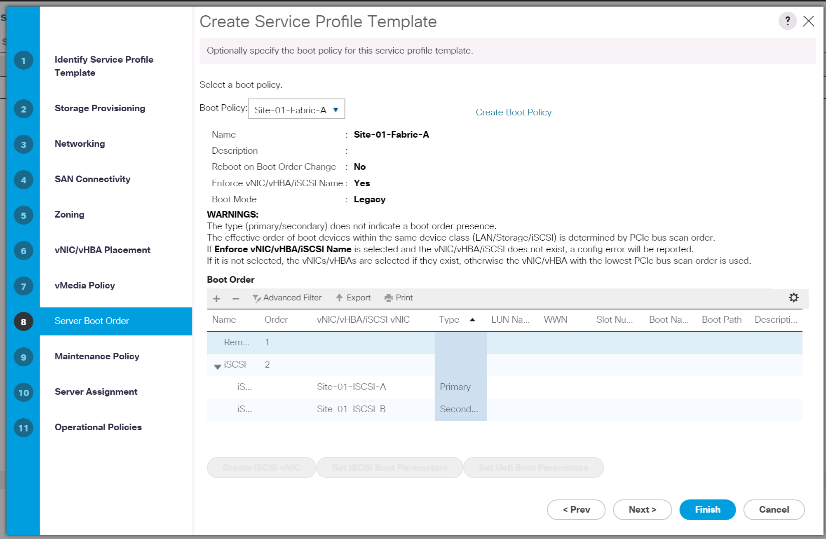

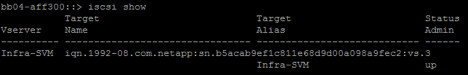

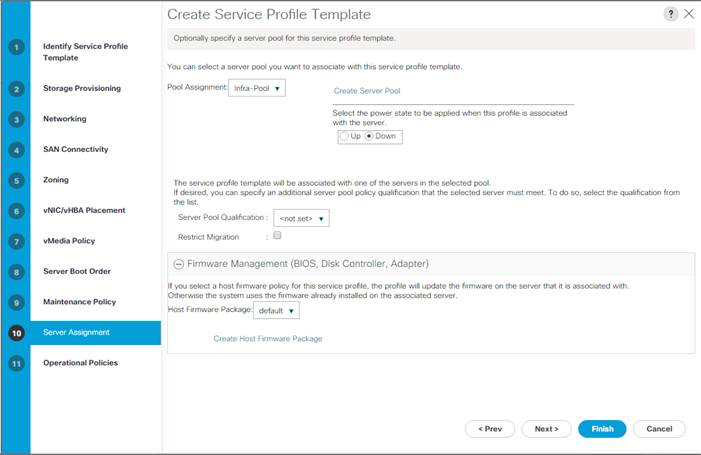

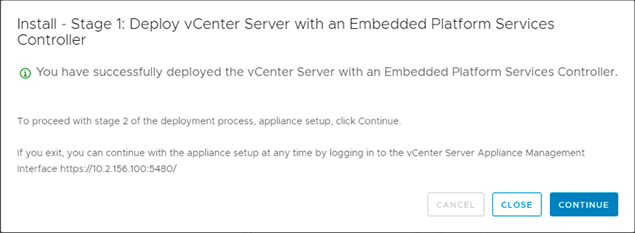

-