Example implementation

Suggest changes

Suggest changes

Due to the large number of options available for system and storage setups, the example implementation should be used as a template your individual system setup and configuration requirements.

|

The example scripts are provided as is and are not supported by NetApp. You can request the current version of the scripts via email to ng-sapcc@netapp.com. |

Validated configurations and limitations

The following principles were applied to the example implementation and might need to be adapted to meet customer needs:

-

Managed SAP systems used NFS to access NetApp storage volumes and were set up based on the adaptive design principle.

-

You can use all ONTAP releases supported by NetApp Ansible modules (ZAPI and REST API).

-

Credentials for a single NetApp cluster and SVM were hard coded as variables in the provider script.

-

Storage cloning was performed on the same storage system that was used by the source SAP system.

-

Storage volumes for the target SAP system had the same names as the source with an appendix.

-

No cloning at secondary storage (SV/SM) was implemented.

-

FlexClone split was not implemented.

-

Instance numbers were identical for the source and target SAP systems.

Lab setup

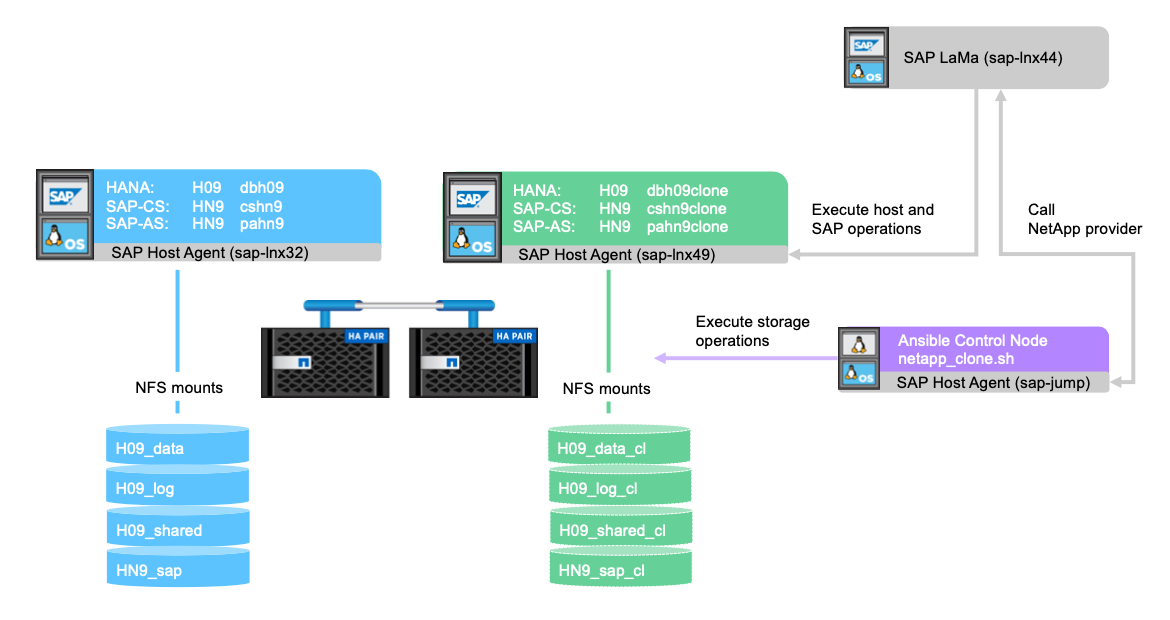

The following figure shows the lab setup we used. The source SAP system HN9 used for the system clone operation consisted of the database H09, the SAP CS, and the SAP AS services running on the same host (sap-lnx32) with installed adaptive design enabled. An Ansible control node was prepared according to the Ansible Playbooks for NetApp ONTAP documentation.

The SAP host agent was installed on this host as well. The NetApp provider script as well as the Ansible playbooks were configured on the Ansible control node as described in the “Appendix: Provider Script Configuration.”

The host sap-lnx49 was used as the target for the SAP LaMa cloning operations, and the isolation-ready feature was configured there.

Different SAP systems (HNA as source and HN2 as target) were used for system copy and refresh operations, because Post Copy Automation (PCA) was enabled there.

The following software releases were used in the lab setup:

-

SAP LaMa Enterprise Edition 3.00 SP23_2

-

SAP HANA 2.00.052.00.1599235305

-

SAP 7.77 Patch 27 (S/4 HANA 1909)

-

SAP Host Agent 7.22 Patch 56

-

SAPACEXT 7.22 Patch 69

-

Linux SLES 15 SP2

-

Ansible 2. 13.7

-

NetApp ONTAP 9.8P8

SAP LaMa configuration

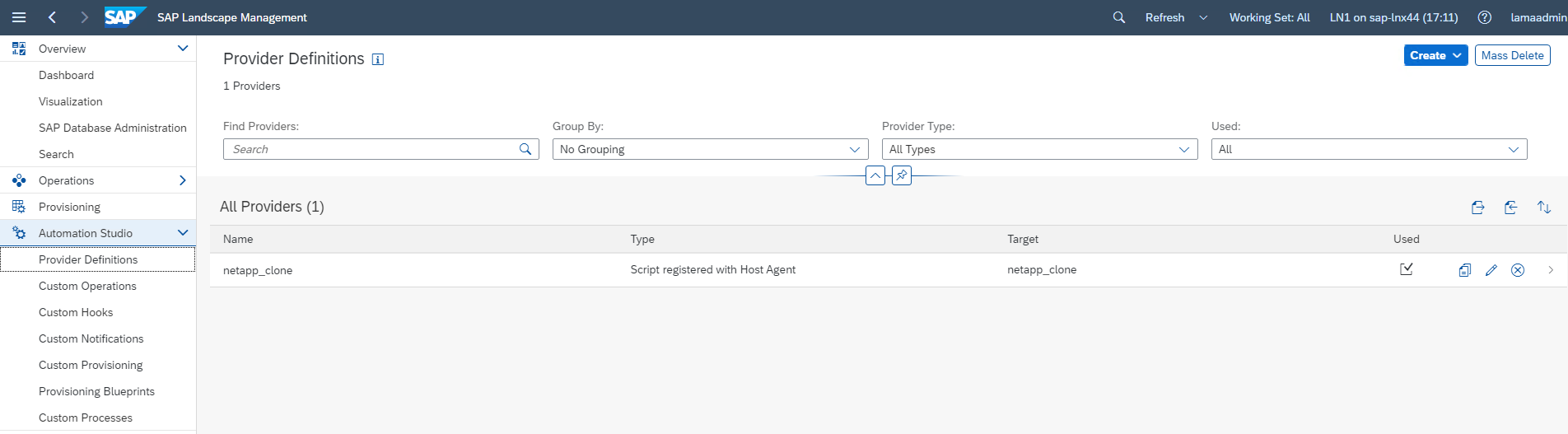

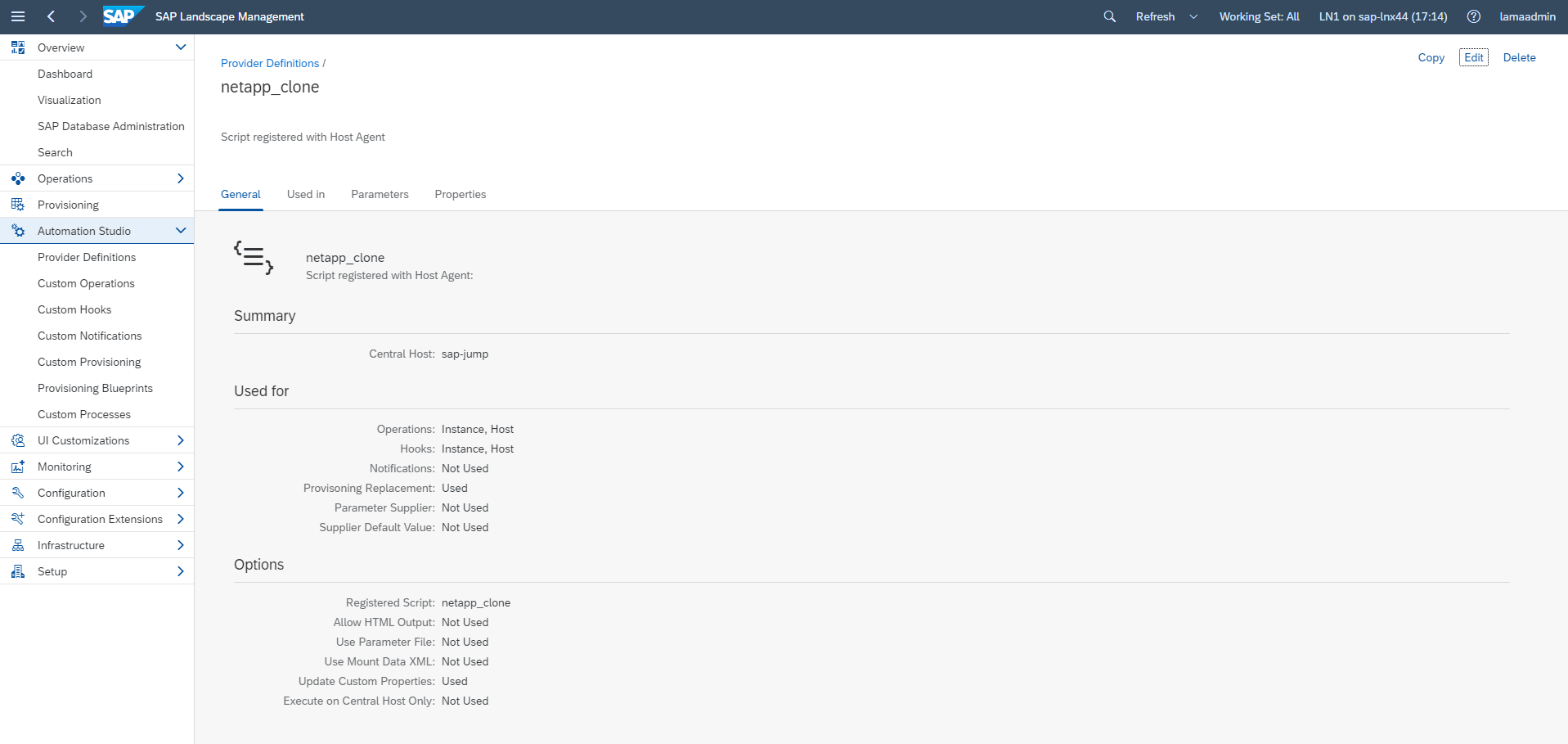

SAP LaMa provider definition

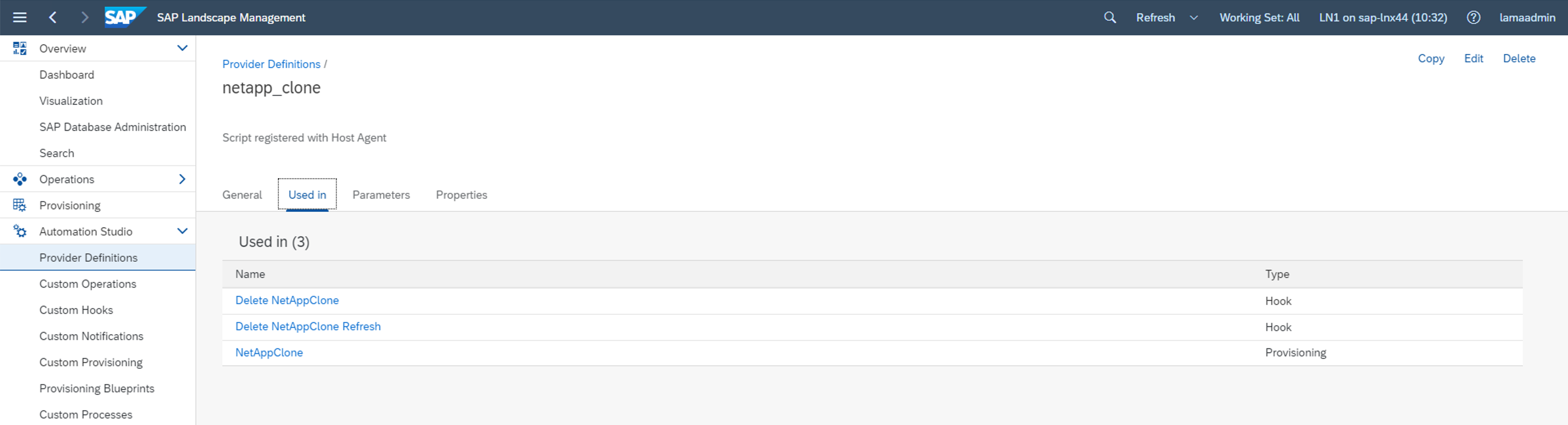

The provider definition is performed within Automation Studio of SAP LaMa as shown in the following screenshot. The example implementation uses a single provider definition that is used for different custom provisioning steps and operation hooks as explained before.

The provider netapp_clone is defined as the script netapp_clone.sh registered at the SAP host agent. The SAP host agent runs on the central host sap-jump, which also acts as the Ansible control node.

The Used in tab shows which custom operations the provider is used for. The configuration for the custom provisioning NetAppClone and the custom hooks Delete NetAppClone and Delete NetAppClone Refresh are shown in the next chapters.

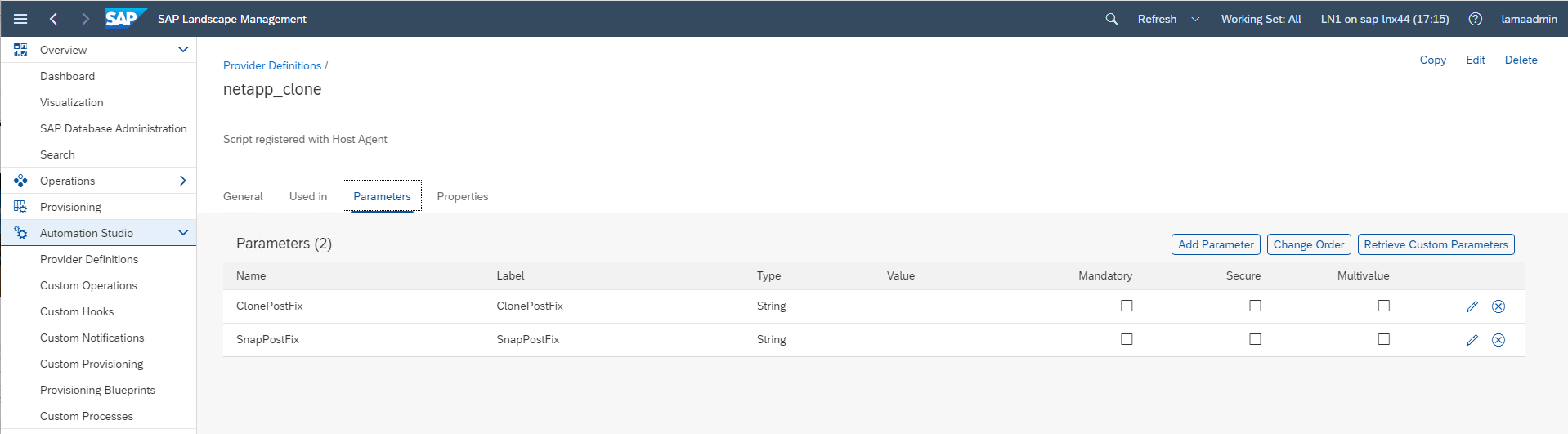

The parameters ClonePostFix and SnapPostFix are requested during the execution of the provisioning workflow and are used for the Snapshot and FlexClone volume names.

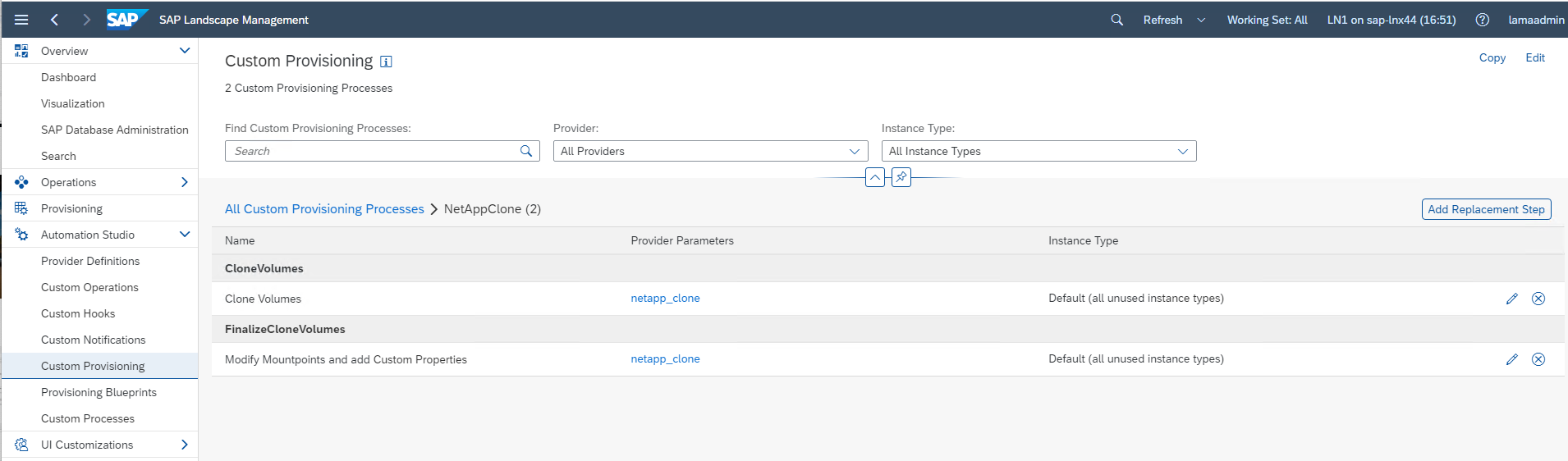

SAP LaMa custom provisioning

In the SAP LaMa custom provisioning configuration, the customer provider described before is used to replace the provisioning workflow steps Clone Volumes and PostCloneVolumes.

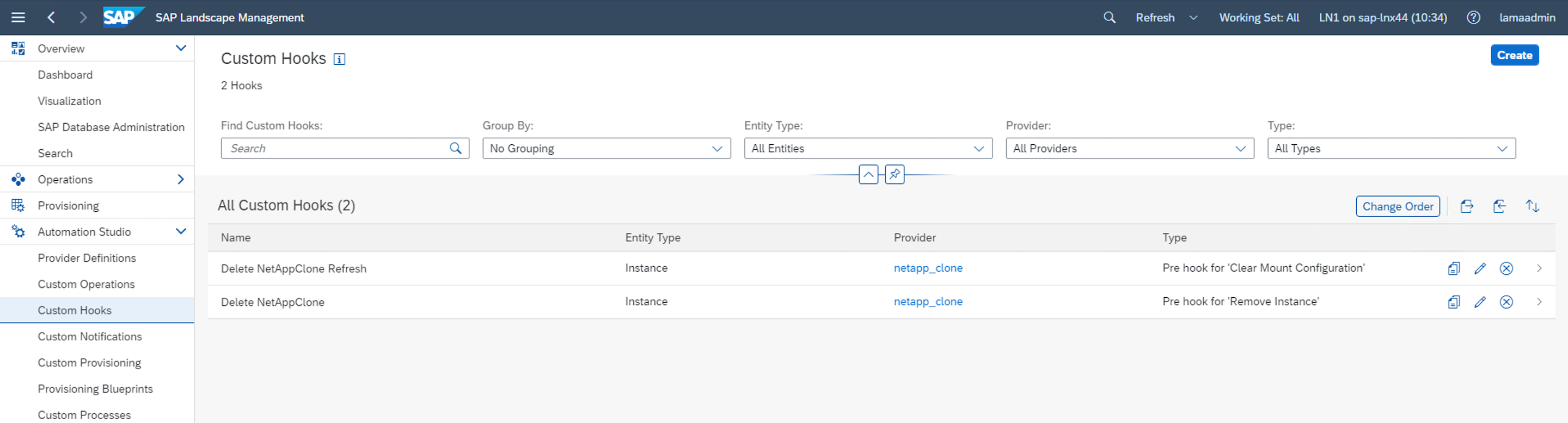

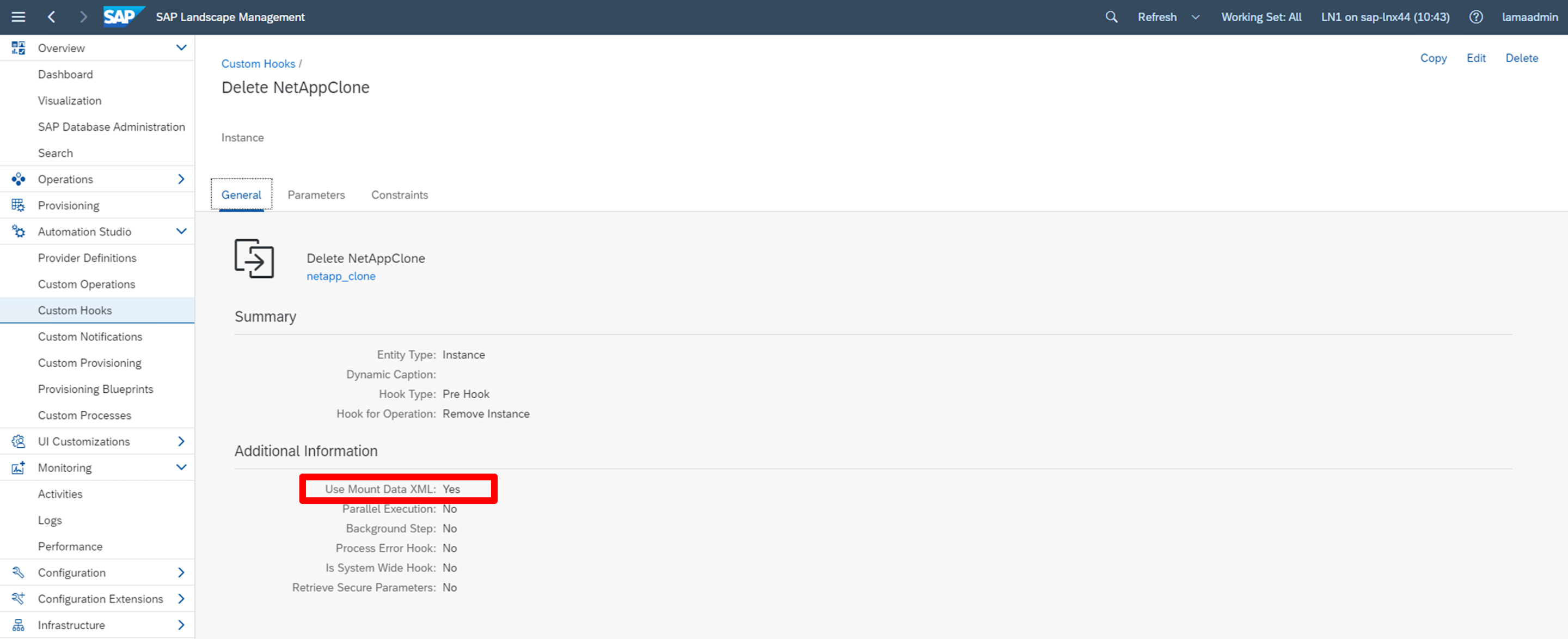

SAP LaMa custom hook

If a system is deleted with the system destroy workflow, the hook Delete NetAppClone is used to call the provider definition netapp_clone. The Delete NetApp Clone Refresh hook is used during the system refresh workflow because the instance is preserved during the execution.

It is important to configure Use Mount Data XML for the custom hook, so that SAP LaMa provides the information of the mount point configuration to the provider.

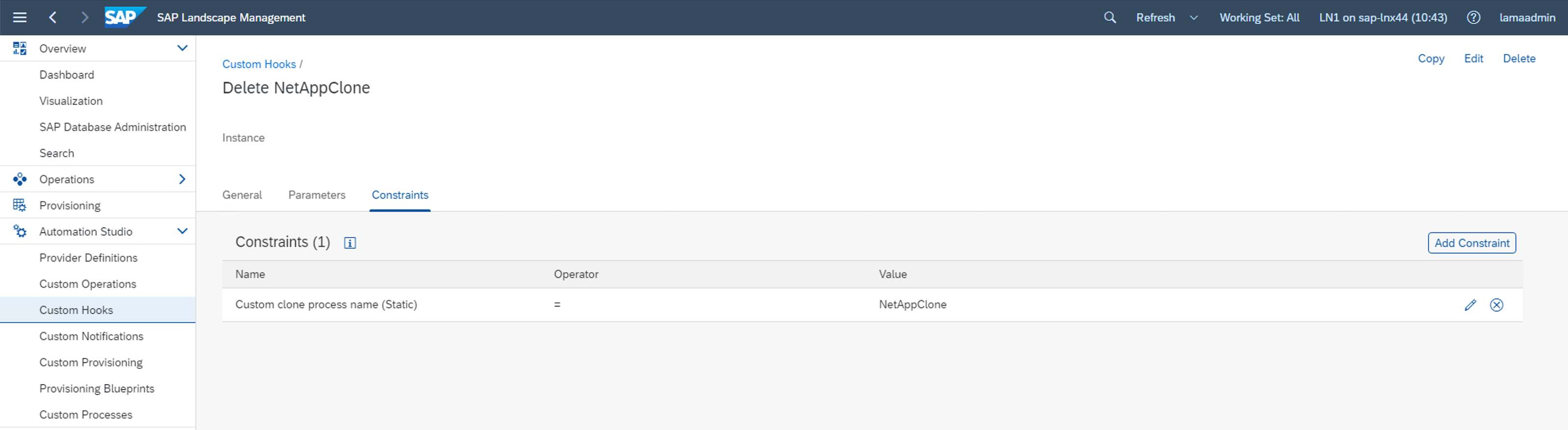

To ensure that the custom hook is only used and executed when the system was created with a custom provisioning workflow, the following constraint is added to it.

More information about the use of custom hooks can be found in the SAP LaMa Documentation.

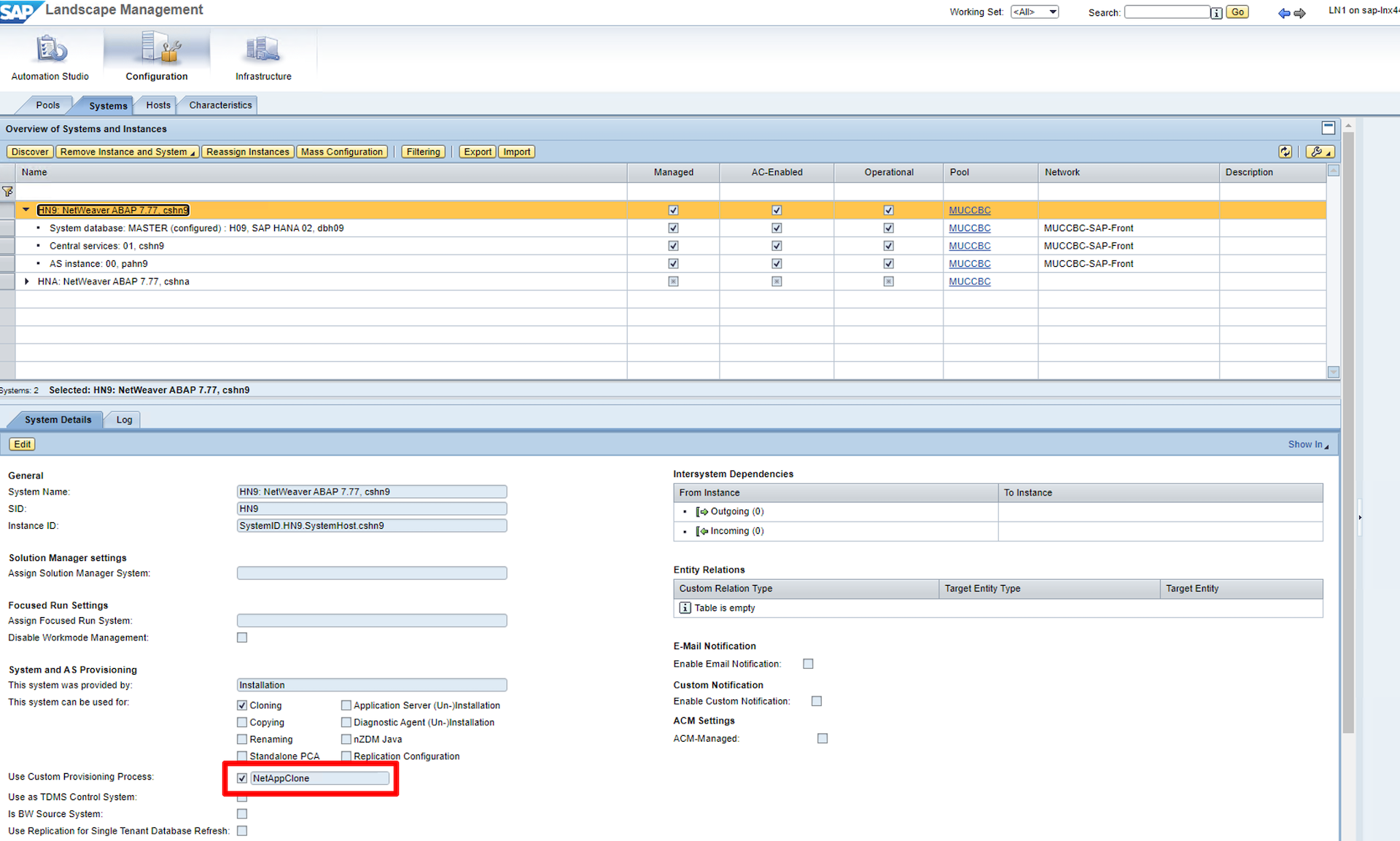

Enable custom provisioning workflow for SAP source system

To enable the custom provisioning workflow for the source system, it must be adapted in the configuration. The Use Custom Provisioning Process checkbox with the corresponding custom provisioning definition must be selected.