Configure NVMe/TCP supplemental storage for VCF Workload Domains

Suggest changes

Suggest changes

In this scenario we will demonstrate how to configure NVMe/TCP supplemental storage for a VCF workload domain.

Author: Josh Powell

Scenario Overview

This scenario covers the following high level steps:

-

Create a storage virtual machine (SVM) with logical interfaces (LIFs) for NVMe/TCP traffic.

-

Create distributed port groups for iSCSI networks on the VI workload domain.

-

Create vmkernel adapters for iSCSI on the ESXi hosts for the VI workload domain.

-

Add NVMe/TCP adapters on ESXi hosts.

-

Deploy NVMe/TCP datastore.

Prerequisites

This scenario requires the following components and configurations:

-

An ONTAP ASA storage system with physical data ports on ethernet switches dedicated to storage traffic.

-

VCF management domain deployment is complete and the vSphere client is accessible.

-

A VI workload domain has been previously deployed.

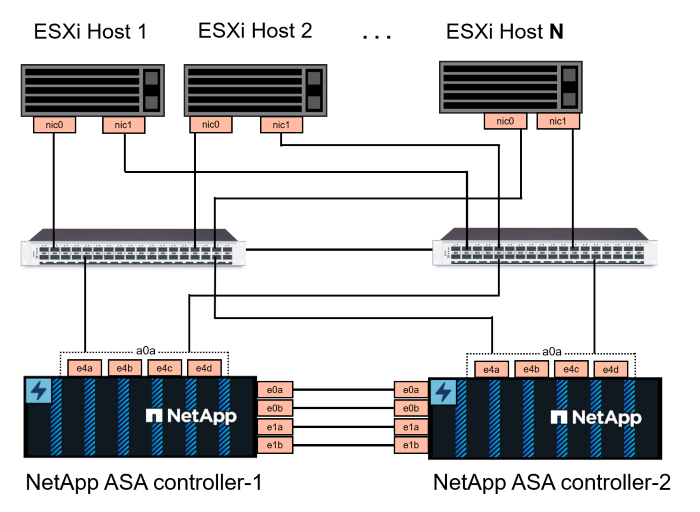

NetApp recommends fully redundant network designs for NVMe/TCP. The following diagram illustrates an example of a redundant configuration, providing fault tolerance for storage systems, switches, networks adapters and host systems. Refer to the NetApp SAN configuration reference for additional information.

For multipathing and failover across multiple paths, NetApp recommends having a minimum of two LIFs per storage node in separate ethernet networks for all SVMs in NVMe/TCP configurations.

This documentation demonstrates the process of creating a new SVM and specifying the IP address information to create multiple LIFs for NVMe/TCP traffic. To add new LIFs to an existing SVM refer to Create a LIF (network interface).

For additional information on NVMe design considerations for ONTAP storage systems, refer to NVMe configuration, support and limitations.

Deployment Steps

To create a VMFS datastore on a VCF workload domain using NVMe/TCP, complete the following steps.

Create SVM, LIFs and NVMe Namespace on ONTAP storage system

The following step is performed in ONTAP System Manager.

Create the storage VM and LIFs

Complete the following steps to create an SVM together with multiple LIFs for NVMe/TCP traffic.

-

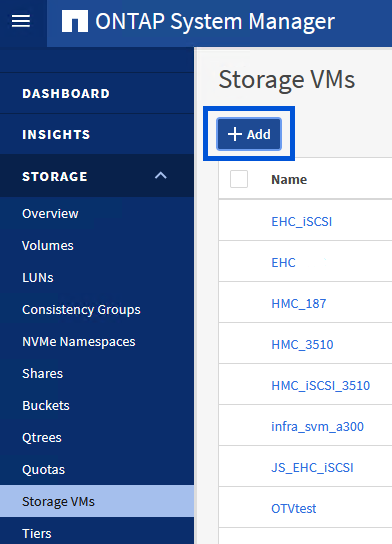

From ONTAP System Manager navigate to Storage VMs in the left-hand menu and click on + Add to start.

-

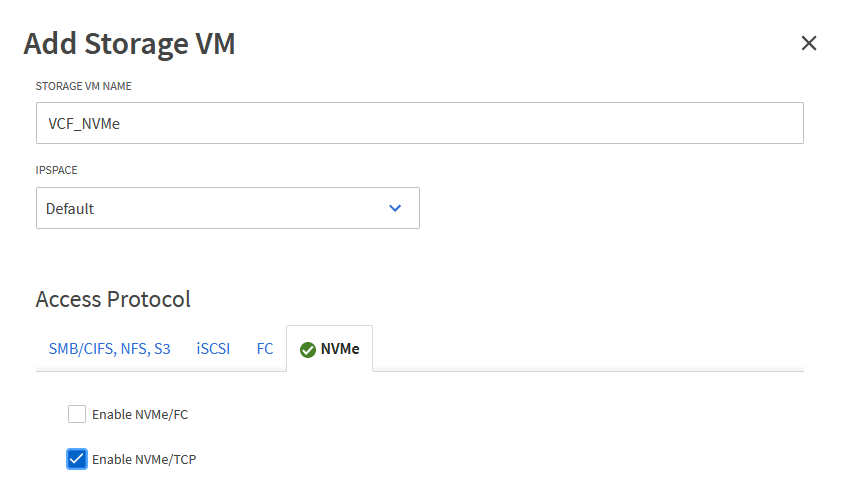

In the Add Storage VM wizard provide a Name for the SVM, select the IP Space and then, under Access Protocol, click on the NVMe tab and check the box to Enable NVMe/TCP.

-

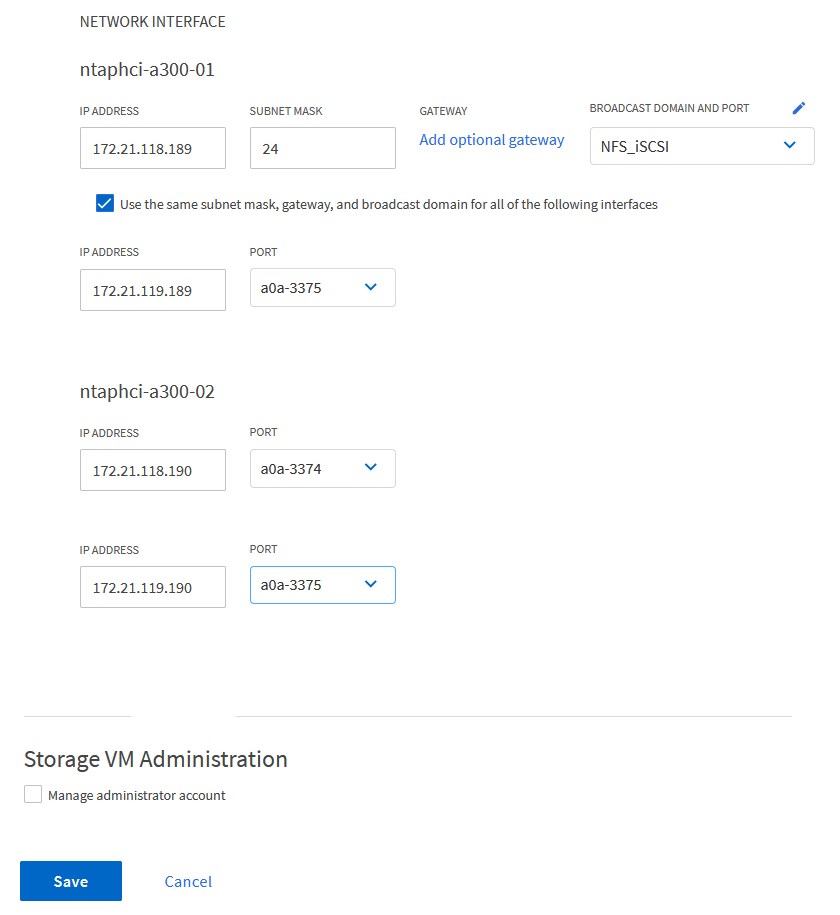

In the Network Interface section fill in the IP address, Subnet Mask, and Broadcast Domain and Port for the first LIF. For subsequent LIFs the checkbox may be enabled to use common settings across all remaining LIFs, or use separate settings.

For multipathing and failover across multiple paths, NetApp recommends having a minimum of two LIFs per storage node in separate Ethernet networks for all SVMs in NVMe/TCP configurations.

-

Choose whether to enable the Storage VM Administration account (for multi-tenancy environments) and click on Save to create the SVM.

Create the NVMe Namespace

NVMe namespaces are analogous to LUNs for iSCSi or FC. The NVMe Namespace must be created before a VMFS datastore can be deployed from the vSphere Client. To create the NVMe namespace, the NVMe Qualified Name (NQN) must first be obtained from each ESXi host in the cluster. The NQN is used by ONTAP to provide access control for the namespace.

Complete the following steps to create an NVMe Namespace:

-

Open an SSH session with an ESXi host in the cluster to obtain its NQN. Use the following command from the CLI:

esxcli nvme info getAn output similar to the following should be displayed:

Host NQN: nqn.2014-08.com.netapp.sddc:nvme:vcf-wkld-esx01 -

Record the NQN for each ESXi host in the cluster

-

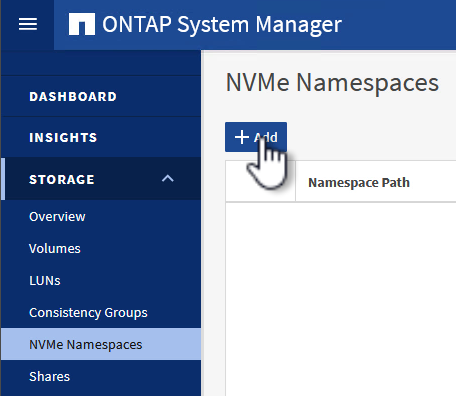

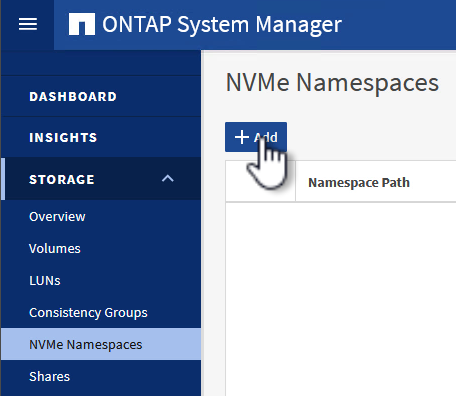

From ONTAP System Manager navigate to NVMe Namespaces in the left-hand menu and click on + Add to start.

-

On the Add NVMe Namespace page, fill in a name prefix, the number of namespaces to create, the size of the namespace, and the host operating system that will be accessing the namespace. In the Host NQN section create a comma separated list of the NQN's previously collected from the ESXi hosts that will be accessing the namespaces.

Click on More Options to configure additional items such as the snapshot protection policy. Finally, click on Save to create the NVMe Namespace.

+

Set up networking and NVMe software adapters on ESXi hosts

The following steps are performed on the VI workload domain cluster using the vSphere client. In this case vCenter Single Sign-On is being used so the vSphere client is common to both the management and workload domains.

Create Distributed Port Groups for NVME/TCP traffic

Complete the following to create a new distributed port group for each NVMe/TCP network:

-

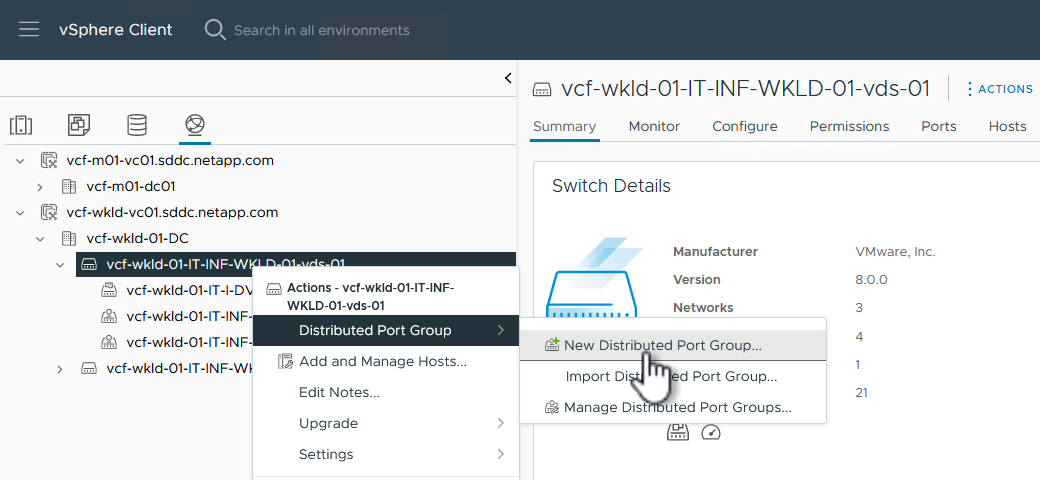

From the vSphere client , navigate to Inventory > Networking for the workload domain. Navigate to the existing Distributed Switch and choose the action to create New Distributed Port Group….

-

In the New Distributed Port Group wizard fill in a name for the new port group and click on Next to continue.

-

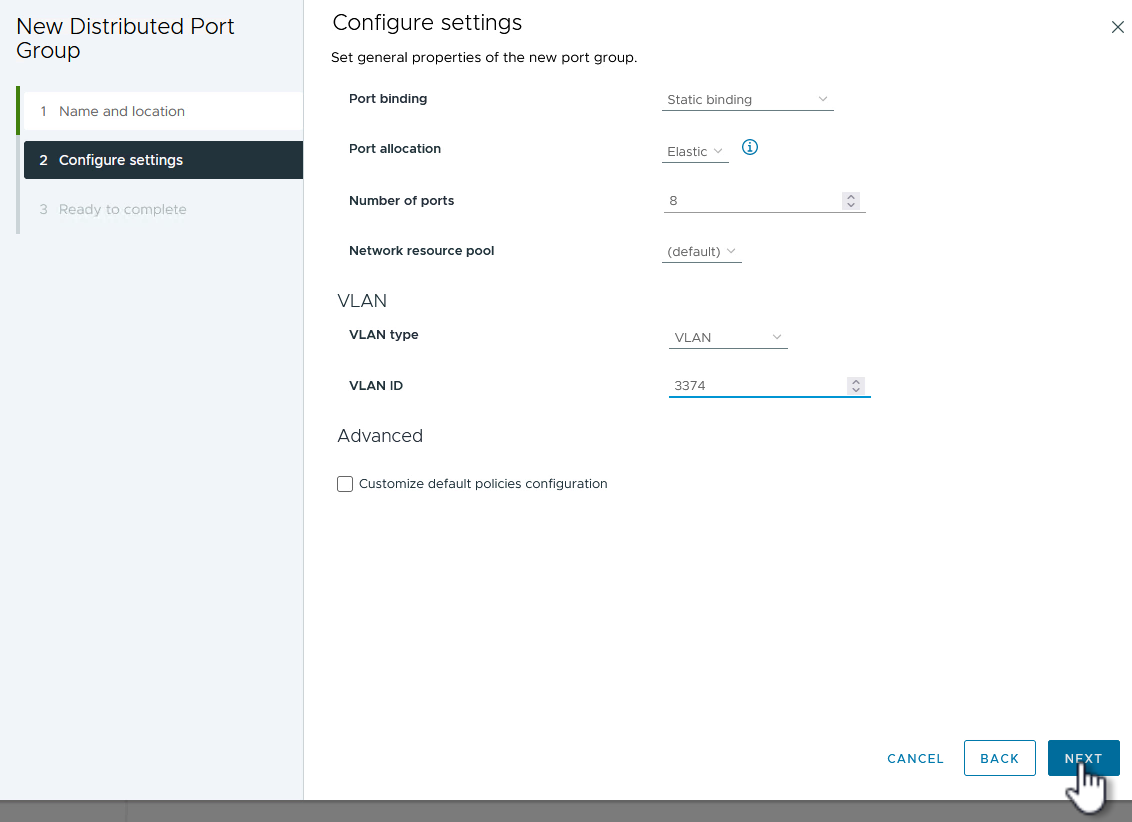

On the Configure settings page fill out all settings. If VLANs are being used be sure to provide the correct VLAN ID. Click on Next to continue.

-

On the Ready to complete page, review the changes and click on Finish to create the new distributed port group.

-

Repeat this process to create a distributed port group for the second NVMe/TCP network being used and ensure you have input the correct VLAN ID.

-

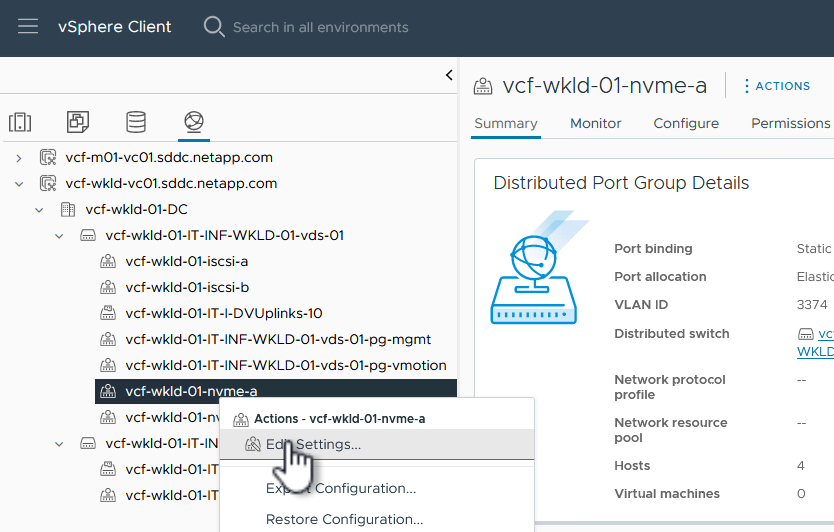

Once both port groups have been created, navigate to the first port group and select the action to Edit settings….

-

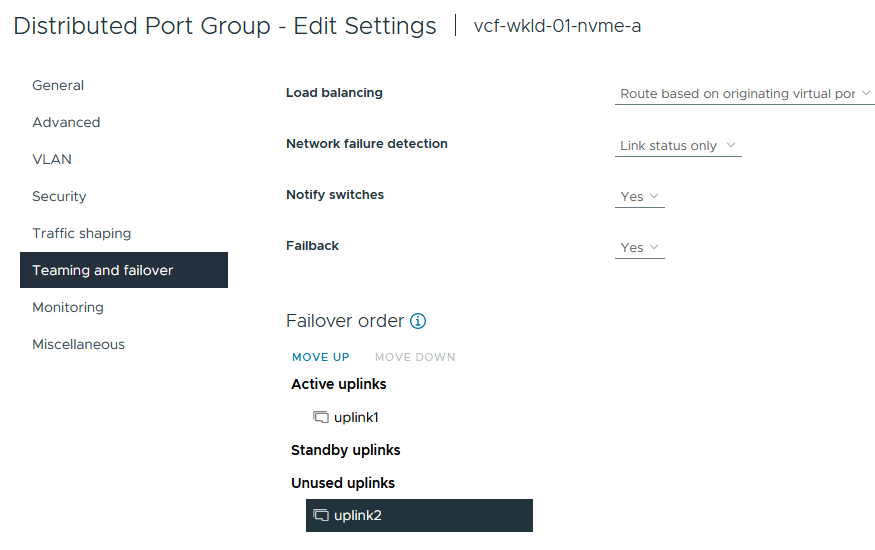

On Distributed Port Group - Edit Settings page, navigate to Teaming and failover in the left-hand menu and click on uplink2 to move it down to Unused uplinks.

-

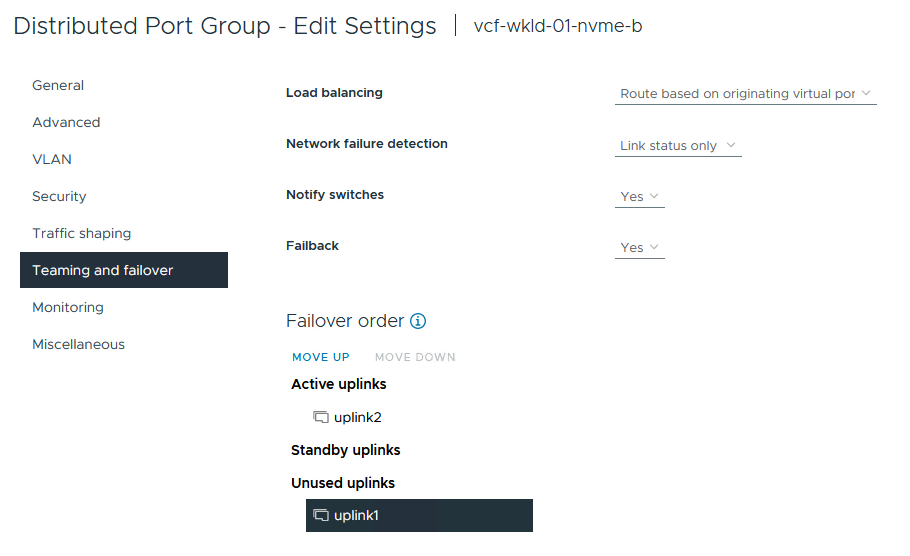

Repeat this step for the second NVMe/TCP port group. However, this time move uplink1 down to Unused uplinks.

Create VMkernel adapters on each ESXi host

Repeat this process on each ESXi host in the workload domain.

-

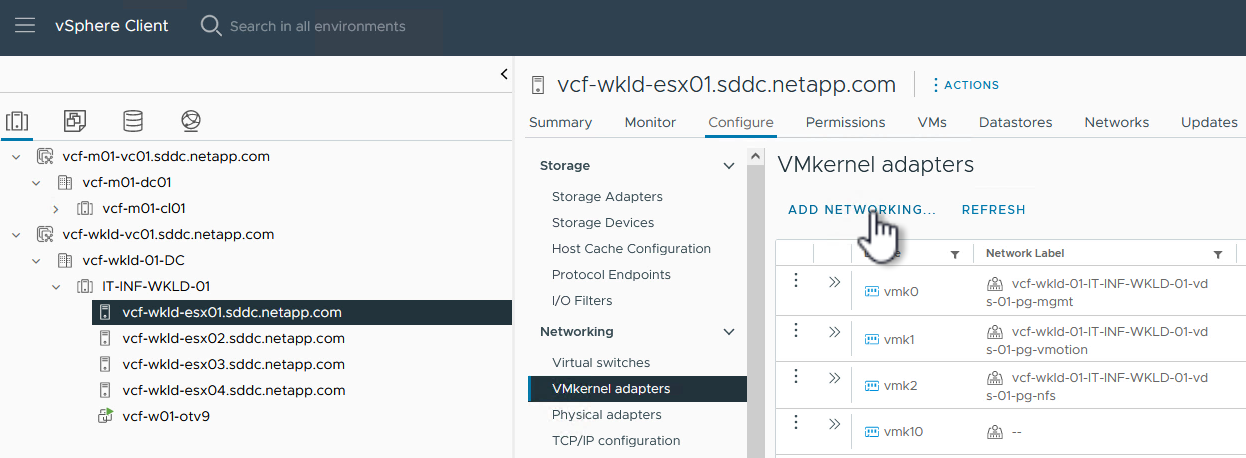

From the vSphere client navigate to one of the ESXi hosts in the workload domain inventory. From the Configure tab select VMkernel adapters and click on Add Networking… to start.

-

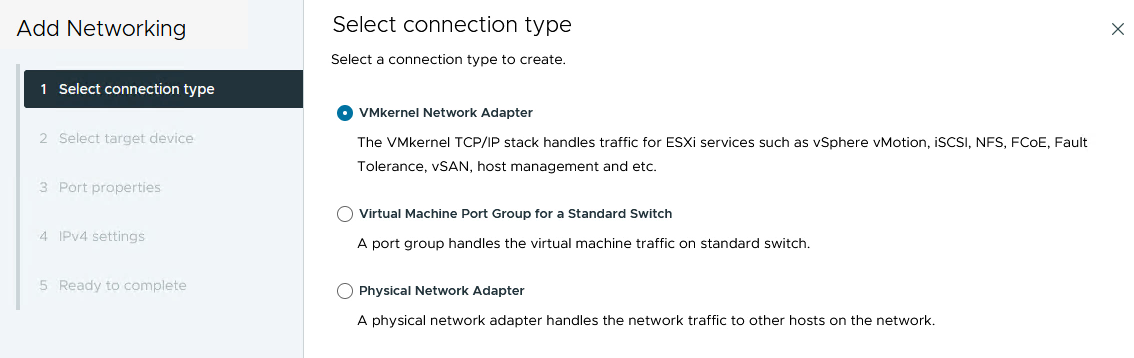

On the Select connection type window choose VMkernel Network Adapter and click on Next to continue.

-

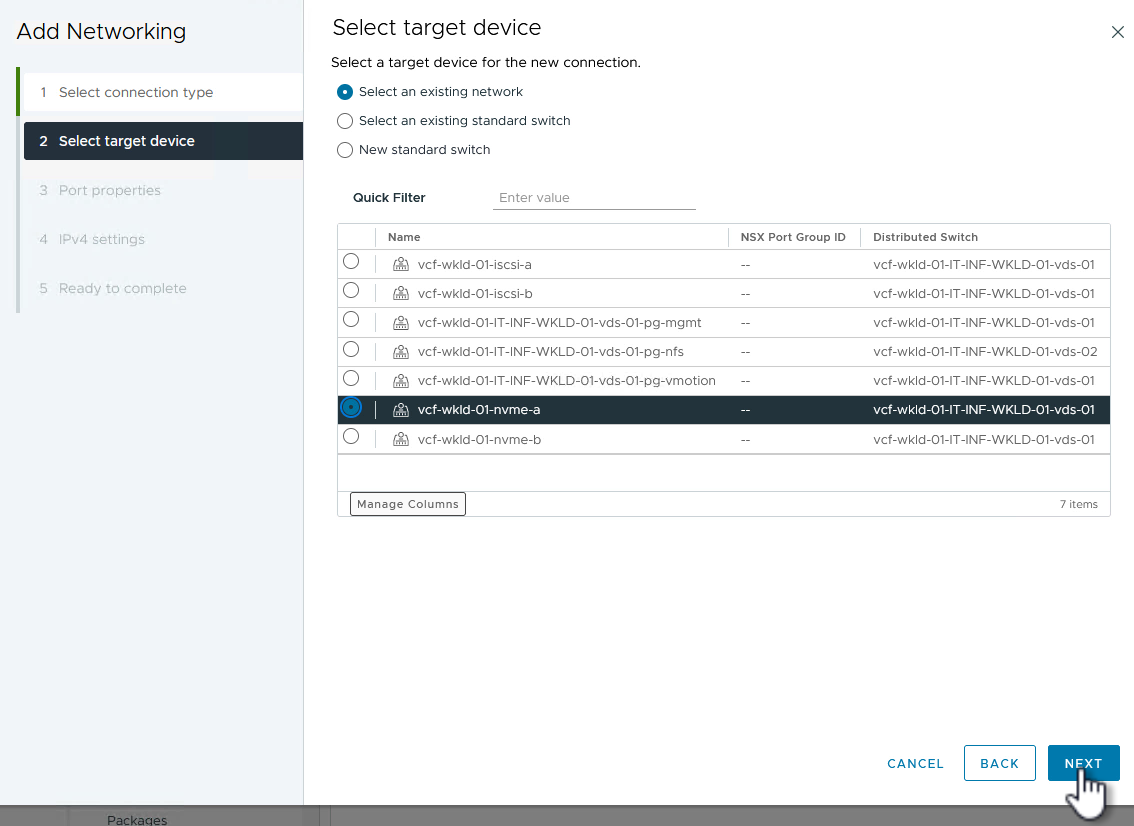

On the Select target device page, choose one of the distributed port groups for iSCSI that was created previously.

-

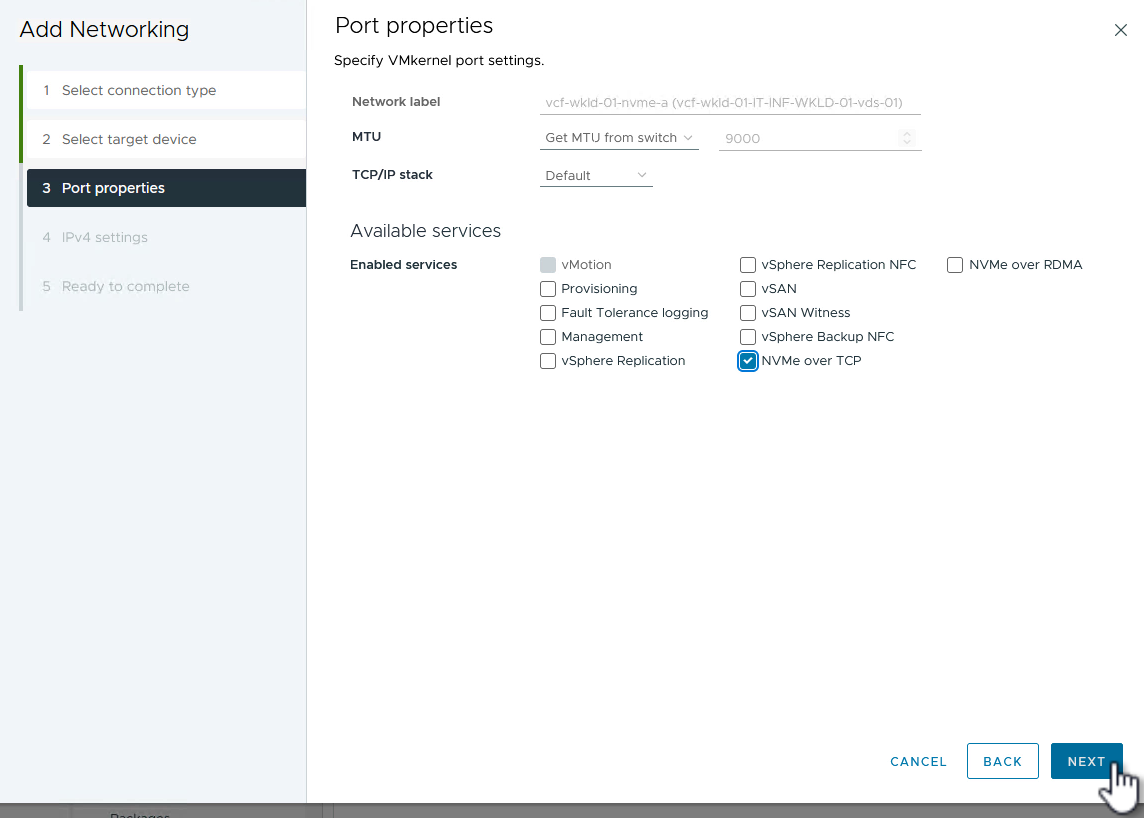

On the Port properties page click the box for NVMe over TCP and click on Next to continue.

-

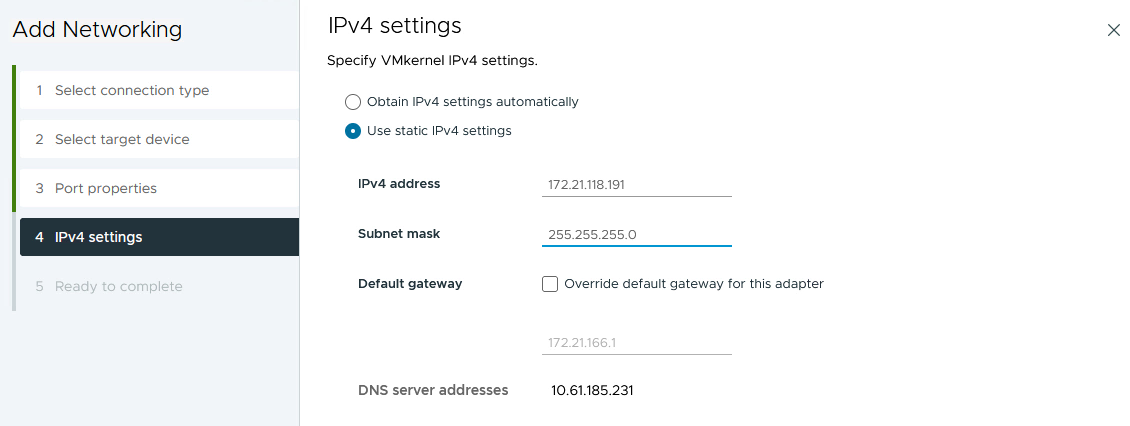

On the IPv4 settings page fill in the IP address, Subnet mask, and provide a new Gateway IP address (only if required). Click on Next to continue.

-

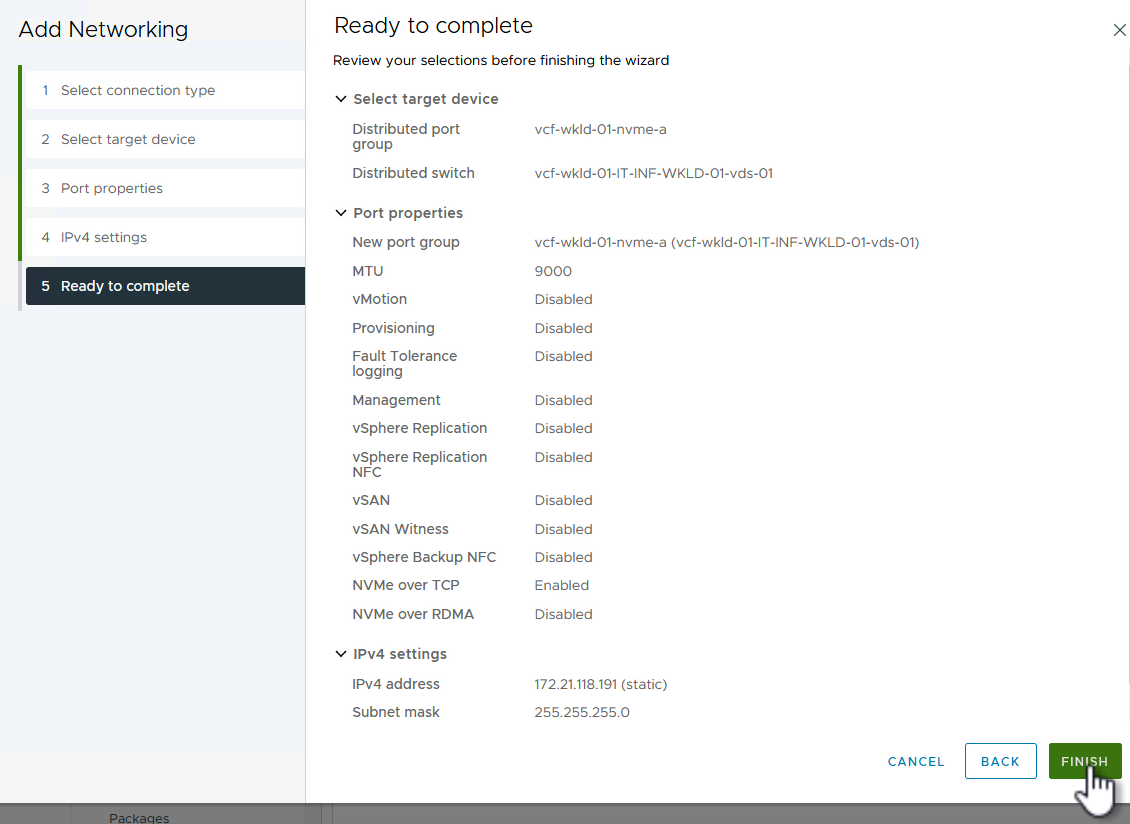

Review the your selections on the Ready to complete page and click on Finish to create the VMkernel adapter.

-

Repeat this process to create a VMkernel adapter for the second iSCSI network.

Add NVMe over TCP adapter

Each ESXi host in the workload domain cluster must have an NVMe over TCP software adapter installed for every established NVMe/TCP network dedicated to storage traffic.

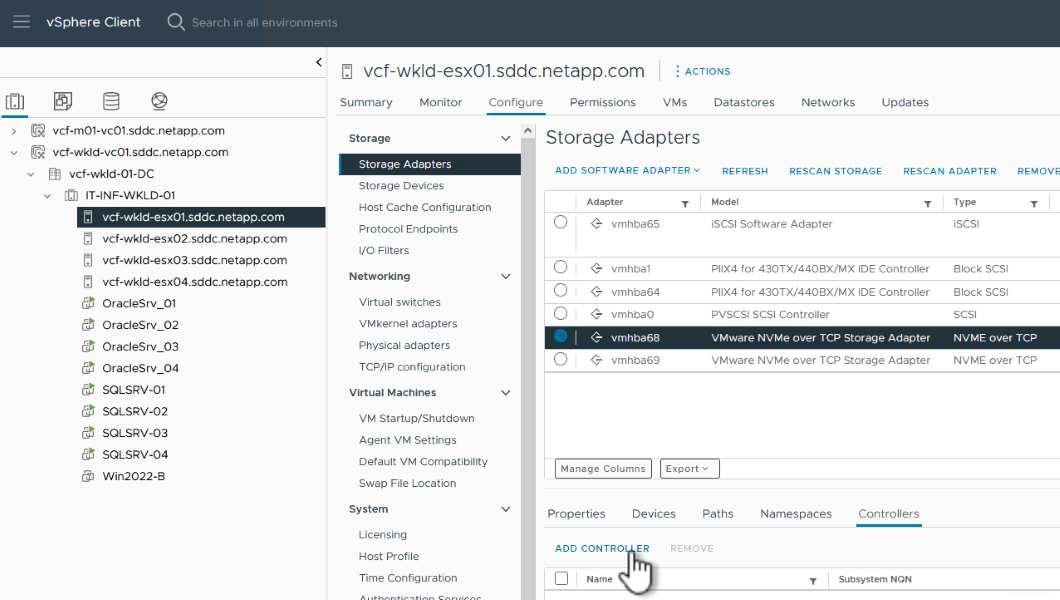

To install NVMe over TCP adapters and discover the NVMe controllers, complete the following steps:

-

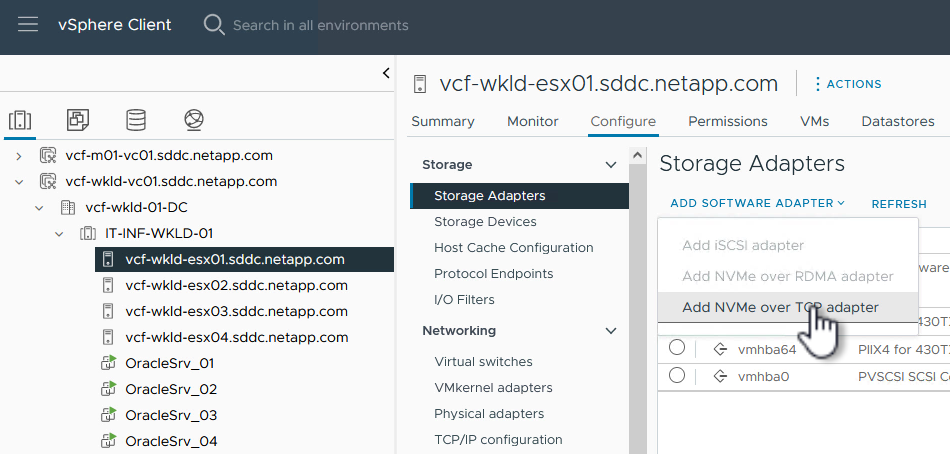

In the vSphere client navigate to one of the ESXi hosts in the workload domain cluster. From the Configure tab click on Storage Adapters in the menu and then, from the Add Software Adapter drop-down menu, select Add NVMe over TCP adapter.

-

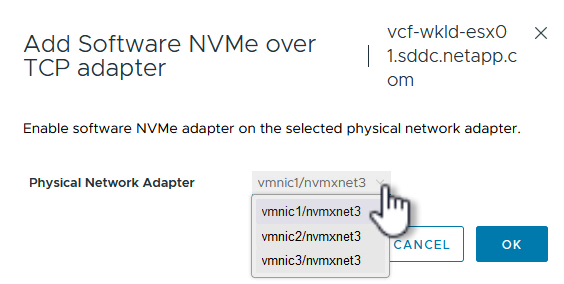

In the Add Software NVMe over TCP adapter window, access the Physical Network Adapter drop-down menu and select the correct physical network adapter on which to enable the NVMe adapter.

-

Repeat this process for the second network assigned to NVMe over TCP traffic, assigning the correct physical adapter.

-

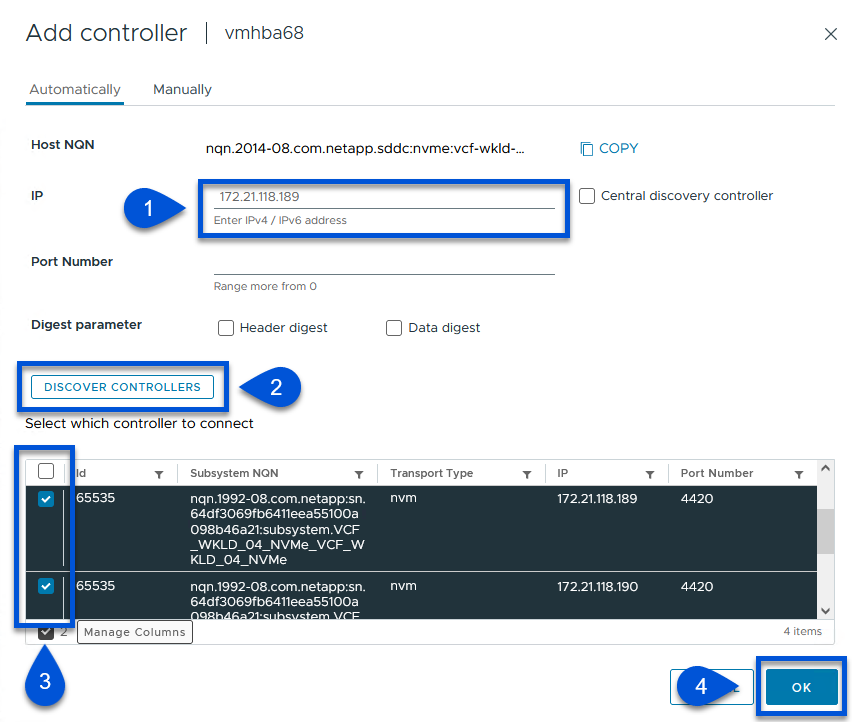

Select one of the newly installed NVMe over TCP adapters and, on the Controllers tab, select Add Controller.

-

In the Add controller window, select the Automatically tab and complete the following steps.

-

Fill in an IP addresses for one of the SVM logical interfaces on the same network as the physical adapter assigned to this NVMe over TCP adapter.

-

Click on the Discover Controllers button.

-

From the list of discovered controllers, click the check box for the two controllers with network addresses aligned with this NVMe over TCP adapter.

-

Click on the OK button to add the selected controllers.

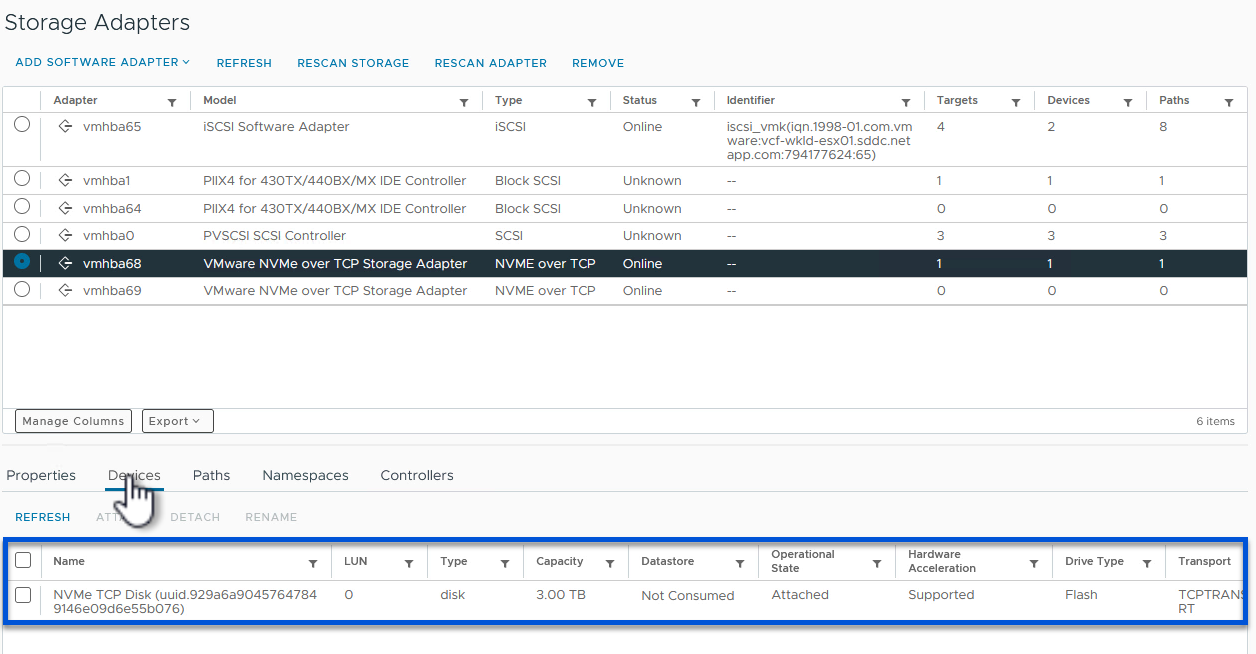

-

-

After a few seconds you should see the NVMe namespace appear on the Devices tab.

-

Repeat this procedure to create an NVMe over TCP adapter for the second network established for NVMe/TCP traffic.

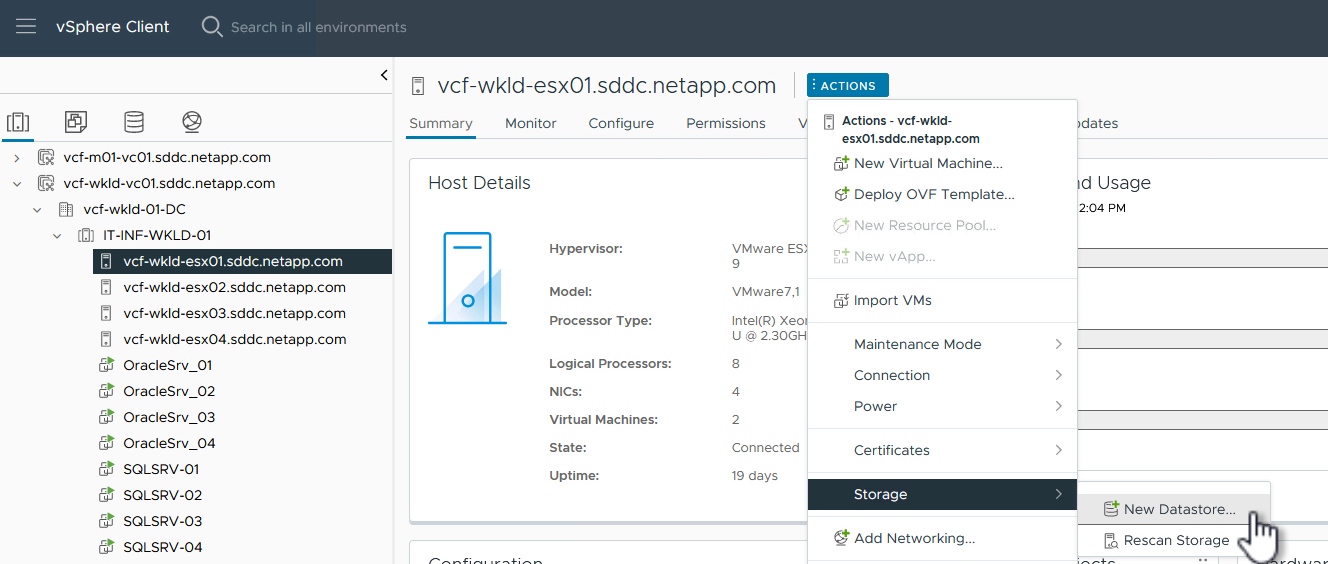

Deploy NVMe over TCP datastore

To create a VMFS datastore on the NVMe namespace, complete the following steps:

-

In the vSphere client navigate to one of the ESXi hosts in the workload domain cluster. From the Actions menu select Storage > New Datastore….

-

In the New Datastore wizard, select VMFS as the type. Click on Next to continue.

-

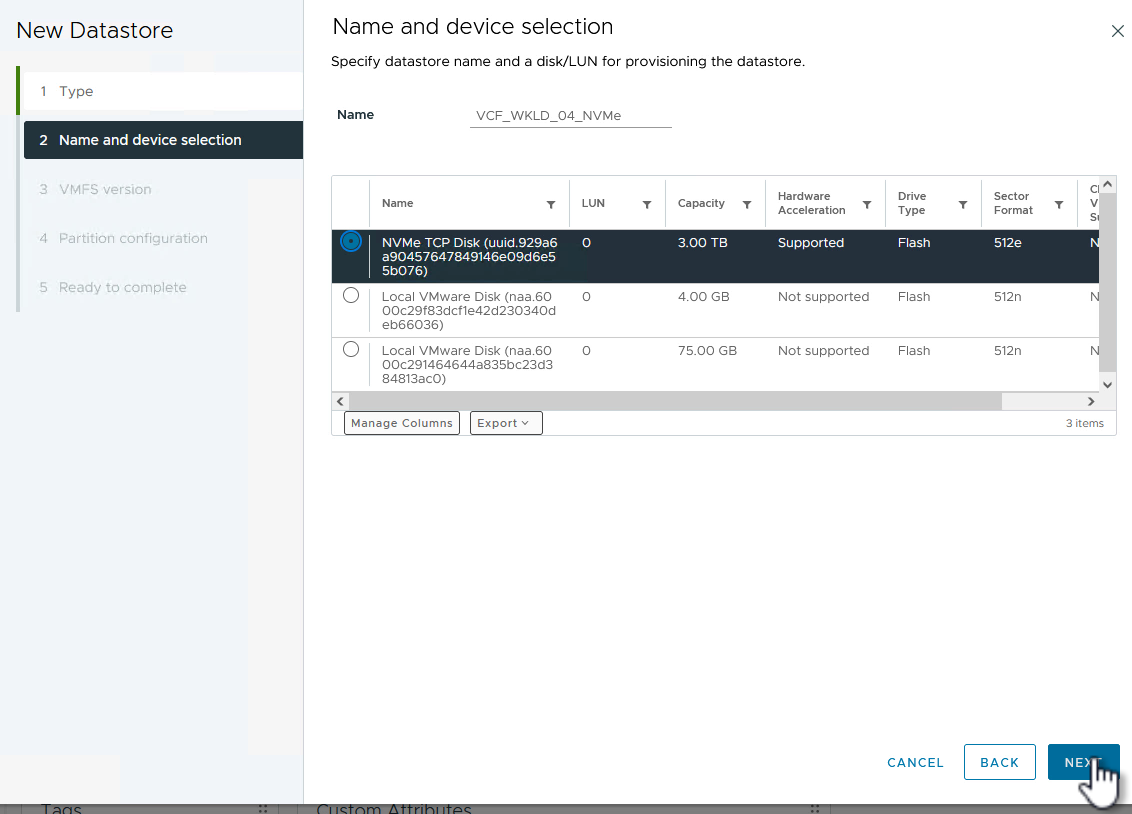

On the Name and device selection page, provide a name for the datastore and select the NVMe namespace from the list of available devices.

-

On the VMFS version page select the version of VMFS for the datastore.

-

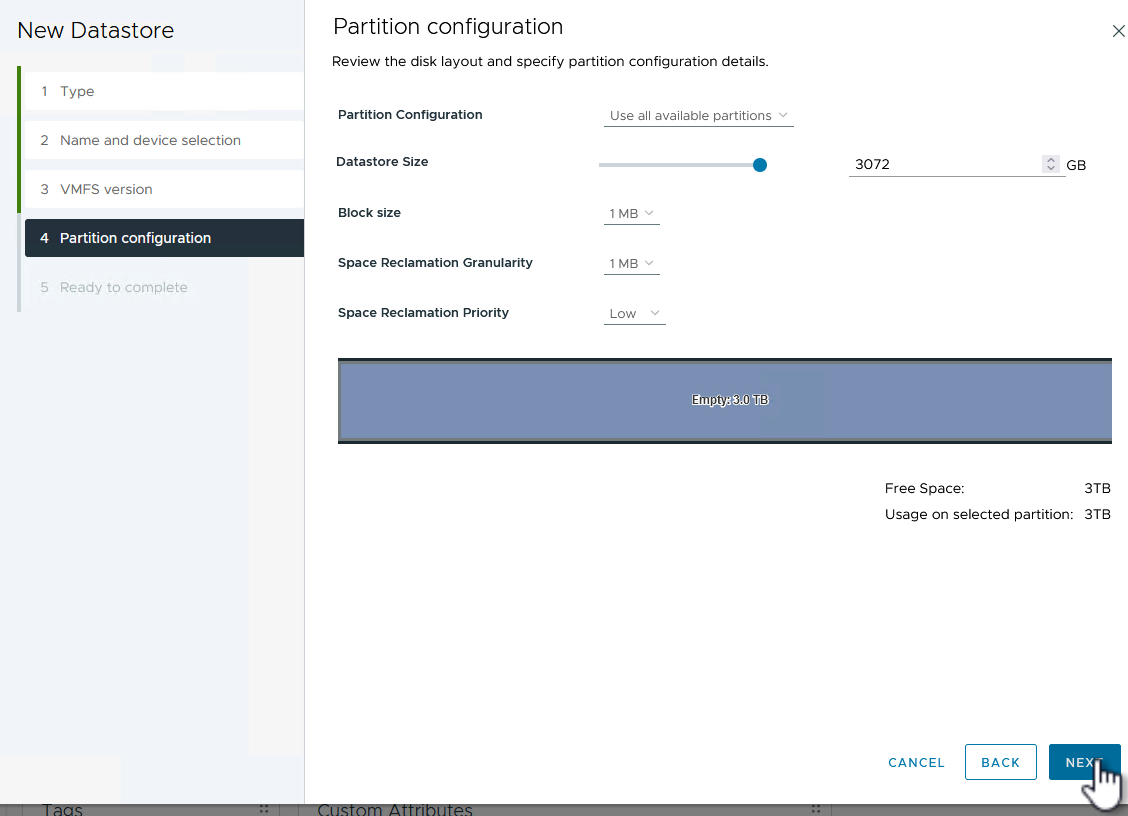

On the Partition configuration page, make any desired changes to the default partition scheme. Click on Next to continue.

-

On the Ready to complete page, review the summary and click on Finish to create the datastore.

-

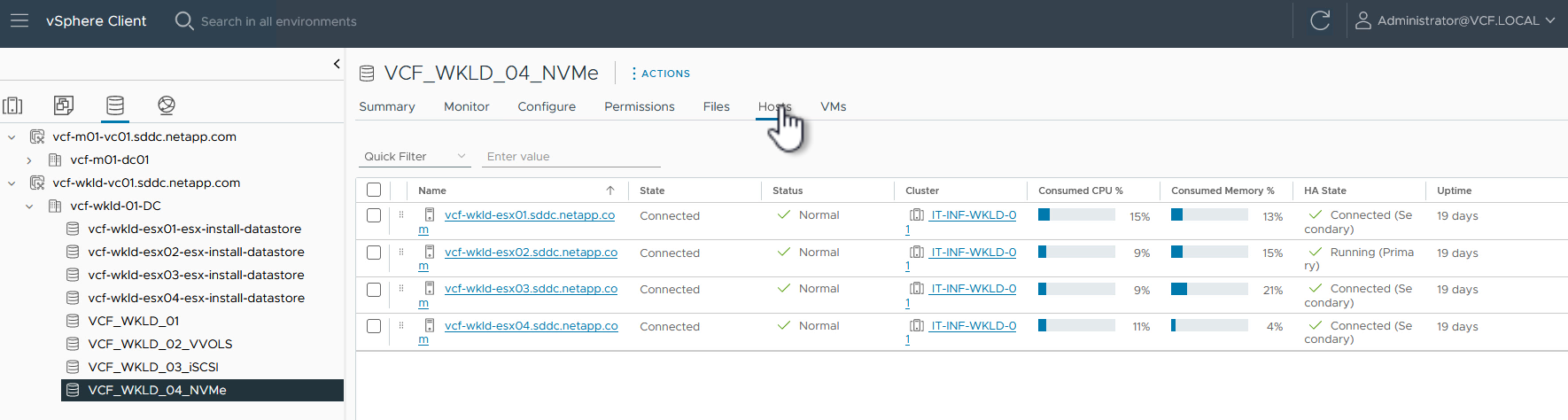

Navigate to the new datastore in inventory and click on the Hosts tab. If configured correctly, all ESXi hosts in the cluster should be listed and have access to the new datastore.

Additional information

For information on configuring ONTAP storage systems refer to the ONTAP 9 Documentation center.

For information on configuring VCF refer to VMware Cloud Foundation Documentation.