NetApp storage and Windows Server environment

Suggest changes

Suggest changes

As mentioned in the Overview, NetApp storage controllers provide a truly unified architecture that supports file, block, and object protocols. This includes SMB/CIFS, NFS, NVMe/TCP, NVMe/FC, iSCSI, FC(FCP) and S3, and they create unified client and host access. The same storage controller can concurrently deliver block storage service in the form of SAN LUNs and file service as NFS and SMB/CIFS. ONTAP is also available as an All SAN Array (ASA) that optimizes host access though symmetric active-active multipathing with iSCSI and FCP, whereas the unified ONTAP systems use asymmetric active-active multipathing. In both modes, ONTAP uses ANA for NVMe over Fabrics (NVMe-oF) multipath management.

A NetApp storage controller running ONTAP can support the following workloads in a Windows Server environment:

-

VMs hosted on continuously available SMB 3.0 shares

-

VMs hosted on Cluster Shared Volume (CSV) LUNs running on iSCSI or FC

-

SQL Server databases on SMB 3.0 shares

-

SQL Server databases on NVMe-oF, iSCSI or FC

-

Other application workloads

In addition, NetApp storage efficiency features such as deduplication, NetApp FlexClone® copies, NetApp snapshot technology, thin provisioning, compression, and storage tiering provide significant value for workloads running on Windows Server.

ONTAP data management

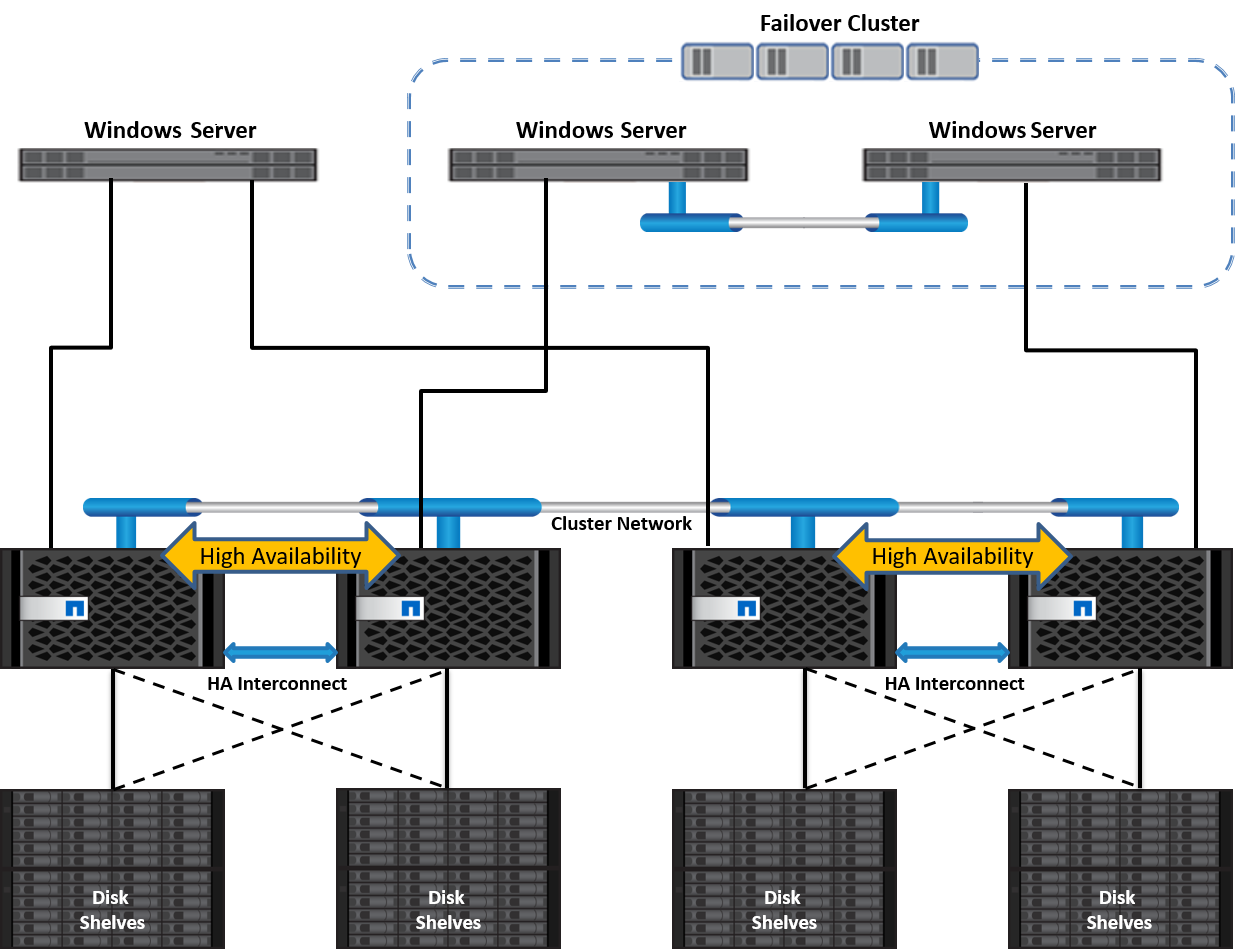

ONTAP is management software that runs on a NetApp storage controller. Referred to as a node, a NetApp storage controller is a hardware device with a processor, RAM, and NVRAM. The node can be connected to SATA, SAS, or SSD disk drives or a combination of those drives.

Multiple nodes are aggregated into a clustered system. The nodes in the cluster communicate with each other continuously to coordinate cluster activities. The nodes can also move data transparently from node to node by using redundant paths to a dedicated cluster interconnect consisting of two 10Gb Ethernet switches. The nodes in the cluster can take over one another to provide high availability during any failover scenarios. Clusters are administered on a whole-cluster rather than a per-node basis, and data is served from one or more storage virtual machines (SVMs). A cluster must have at least one SVM to serve data.

The basic unit of a cluster is the node, and nodes are added to the cluster as part of a high-availability (HA) pair. HA pairs enable high availability by communicating with each other over an HA interconnect (separate from the dedicated cluster interconnect) and by maintaining redundant connections to the HA pair's disks. Disks are not shared between HA pairs, although shelves might contain disks that belong to either member of an HA pair. The following figure depicts a NetApp storage deployment in a Windows Server environment.

Storage Virtual Machines

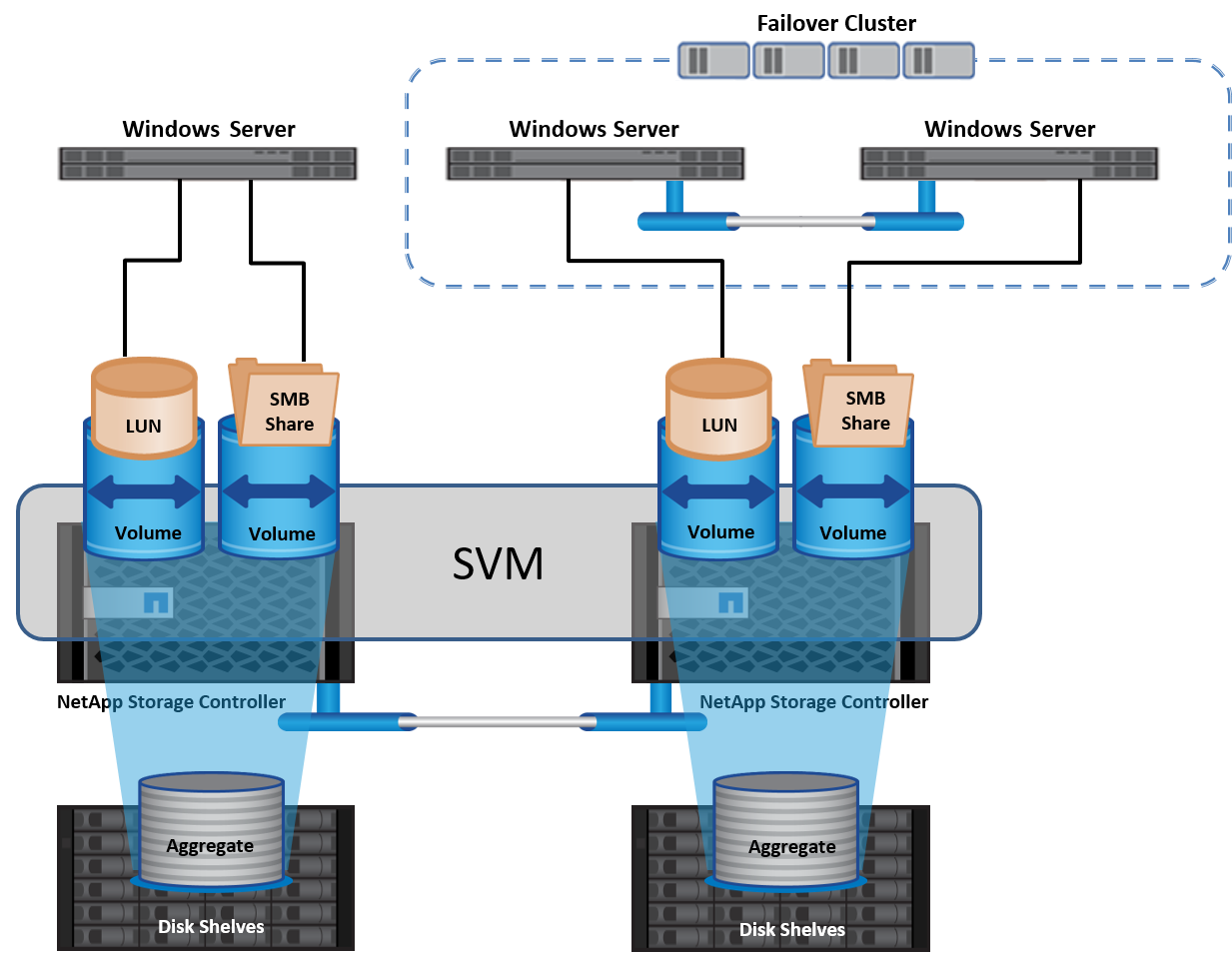

An ONTAP SVM is a logical storage server that provides data access to LUNs and/or a NAS namespace from one or more logical interfaces (LIFs). The SVM is thus the basic unit of storage segmentation that enables secure multitenancy in ONTAP. Each SVM is configured to own storage volumes provisioned from a physical aggregate and logical interfaces (LIFs) assigned either to a physical Ethernet network or to FC target ports.

Logical disks (LUNs) or CIFS shares are created inside an SVM's volumes and are mapped to Windows hosts and clusters to provide them with storage space, as shown in the following figure. SVMs are node independent and cluster based; they can use physical resources such as volumes or network ports anywhere in the cluster.

Provisioning NetApp storage for Windows Server

Storage can be provisioned to Windows Server in both SAN and NAS environments. In a SAN environment, the storage is provided as disks from LUNs on NetApp volume as block storage. In a NAS environment, the storage is provided as CIFS/SMB shares on NetApp volumes as file storage. These disks and shares can be applied in Windows Server as follows:

-

Storage for Windows Server hosts for application workloads

-

Storage for Nano Server and containers

-

Storage for individual Hyper-V hosts to store VMs

-

Shared storage for Hyper-V clusters in the form of CSVs to store VMs

-

Storage for SQL Server databases

Managing NetApp storage

To connect, configure, and manage NetApp storage from Windows Server 2016, use one of the following methods:

-

Secure Shell (SSH). Use any SSH client on Windows Server to run NetApp CLI commands.

-

System Manager. This is NetApp's GUI-based manageability product.

-

NetApp PowerShell Toolkit. This is the NetApp PowerShell Toolkit for automating and implementing custom scripts and workflows.

NetApp PowerShell Toolkit

NetApp PowerShell Toolkit (PSTK) is a PowerShell module that provides end-to-end automation and enables storage administration of ONTAP. The ONTAP module contains over 2,000 cmdlets and helps with the administration of NetApp AFF, FAS, commodity hardware, and cloud resources.

Things to remember

-

NetApp does not support Windows Server storage spaces. Storage spaces are used only for JBOD (just a bunch of disks) and does not work with any type of RAID (direct-attached storage [DAS] or SAN).

-

Clustered storage pools in Windows Server are not supported by ONTAP.

-

NetApp supports the shared virtual hard disk format (VHDX) for guest clustering in Windows SAN environments.

-

Windows Server does not support creating storage pools using iSCSI or FC LUNs.

Further reading

-

For more information about the NetApp PowerShell Toolkit, visit the NetApp Support Site.

-

For information about NetApp PowerShell Toolkit best practices, see TR-4475: NetApp PowerShell Toolkit Best Practices Guide.

Networking best practices

Ethernet networks can be broadly segregated into the following groups:

-

A client network for the VMs

-

One more more storage networks (iSCSI or SMB connecting to the storage systems)

-

A cluster communication network (heartbeat and other communication between the nodes of the cluster)

-

A management network (to monitor and troubleshoot the system)

-

A migration network (for host live migration)

-

VM replication (a Hyper-V Replica)

Best practices

-

NetApp recommends having dedicated physical ports for each of the preceding functionalities for network isolation and performance.

-

For each of the preceding network requirements (except for the storage requirements), multiple physical network ports can be aggregated to distribute load or provide fault tolerance.

-

NetApp recommends having a dedicated virtual switch created on the Hyper-V host for guest storage connection within the VM.

-

Make sure that the Hyper-V host and guest iSCSI data paths use different physical ports and virtual switches for secure isolation between the guest and the host.

-

NetApp recommends avoiding NIC teaming for iSCSI NICs.

-

NetApp recommends using ONTAP multipath input/output (MPIO) configured on the host for storage purposes..

-

NetApp recommends using MPIO within a guest VM if using guest iSCSI initiators. MPIO usage must be avoided within the guest if you use pass-through disks. In this case, installing MPIO on the host should suffice.

-

NetApp recommends not applying QoS policies to the virtual switch assigned for the storage network.

-

NetApp recommends not using automatic private IP addressing (APIPA) on physical NICs because APIPA is nonroutable and not registered in the DNS.

-

NetApp recommends turning on jumbo frames for CSV, iSCSI, and live migration networks to increase the throughput and reduce CPU cycles.

-

NetApp recommends unchecking the option Allow Management Operating System to Share This Network Adapter for the Hyper-V virtual switch to create a dedicated network for the VMs.

-

NetApp recommends creating redundant network paths (multiple switches) for live migration and the iSCSI network to provide resiliency and QoS.