Learn about pNFS architecture in ONTAP

Suggest changes

Suggest changes

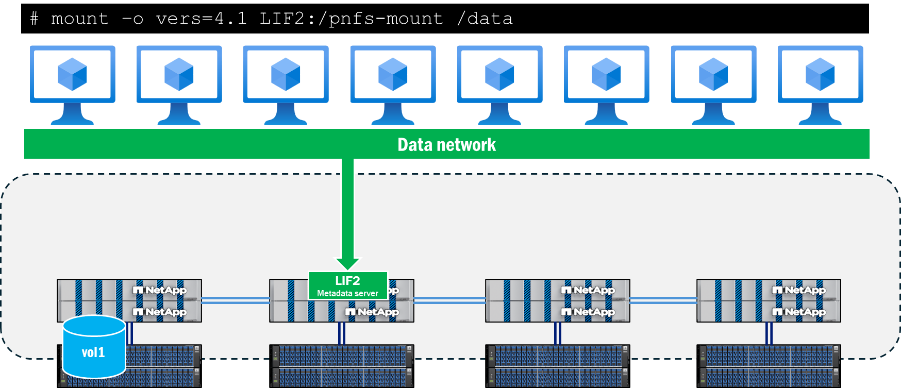

The pNFS architecture is comprised of three main components: an NFS client that supports pNFS, a metadata server that provides a dedicated path for metadata operations, and a data server that provides localized paths to files.

Client access to pNFS needs network connectivity to data and metadata paths available on the NFS server. If the NFS server contains network interfaces that are not reachable by the clients, then the server might advertise data paths to the client that are inaccessible, which can cause outages.

Metadata server

The metadata server in pNFS is established when a client initiates a mount using NFSv4.1 or later when pNFS is enabled on the NFS server. When this is done, all metadata traffic is sent over this connection and remains on this connection for the duration of the mount, even if the interface is migrated to another node.

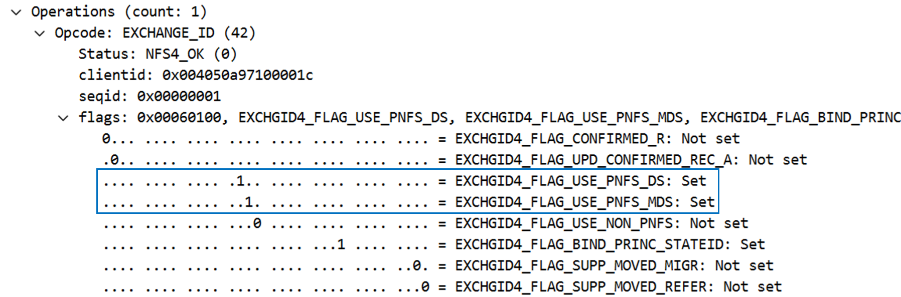

pNFS support is determined during the mount call, specifically in the EXCHANGE_ID calls. This can be seen in a packet capture below the NFS operations as a flag. When the pNFS flags EXCHGID4_FLAG_USE_PNFS_DS and EXCHGID4_FLAG_USE_PNFS_MDS are set to 1, then the interface is eligible for both data and metadata operations in pNFS.

Metadata in NFS generally consists of file and folder attributes, such as file handles, permissions, access and modification times, and ownership information. Metadata can also include create and delete calls, link and unlink calls, and renames.

In pNFS, there is also a subset of metadata calls specific to the pNFS feature and are covered in further detail in RFC 5661. These calls are used to help determine pNFS-eligible devices, mappings of devices to datasets, and other required information. The following table shows a list of these pNFS-specific metadata operations.

| Operation | Description |

|---|---|

LAYOUTGET |

Obtains the data server map from the metadata server. |

LAYOUTCOMMIT |

Servers commit the layout and update the metadata maps. |

LAYOUTRETURN |

Returns the layout or the new layout if the data is modified. |

GETDEVICEINFO |

Client gets updated information on a data server in the storage cluster. |

GETDEVICELIST |

Client requests the list of all data servers participating in the storage cluster. |

CB_LAYOUTRECALL |

Server recalls the data layout from a client if conflicts are detected. |

CB_RECALL_ANY |

Returns any layouts to the metadata server. |

CB_NOTIFY_DEVICEID |

Notifies of any device ID changes. |

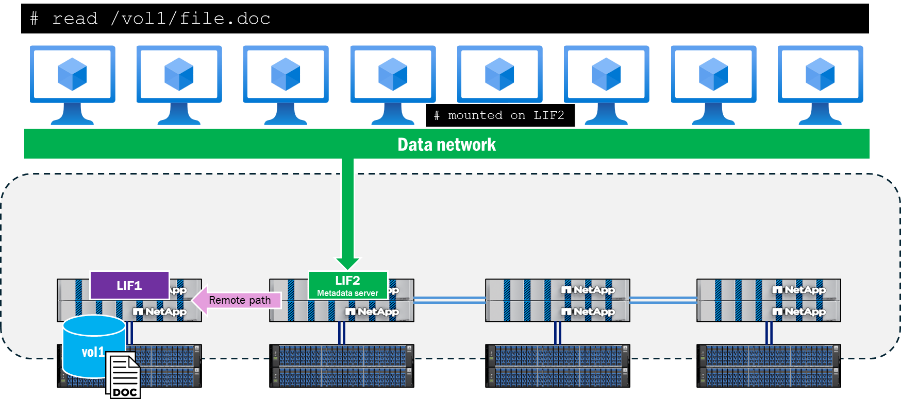

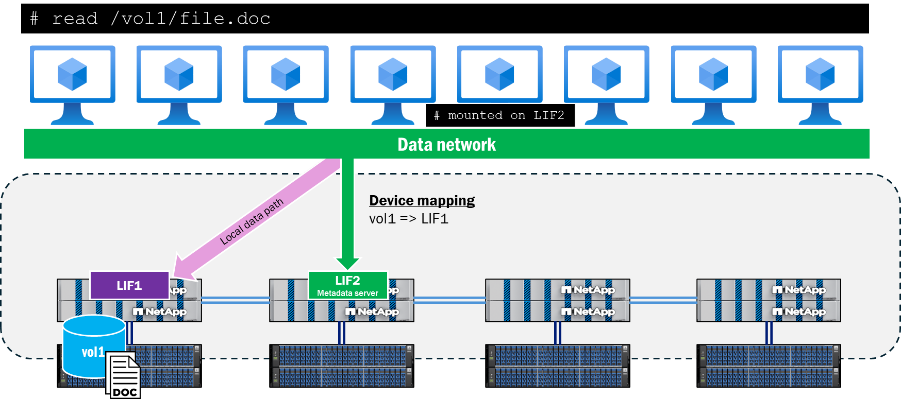

Data path information

After the metadata server is established and data operations begin, ONTAP begins to track the device IDs eligible for pNFS read and write operations, as well as the device mappings, which associate the volumes in the cluster with the local network interfaces. This process occurs when a read or write operation is performed in the mount. Metadata calls, such as GETATTR. will not trigger these device mappings. As such, running an ls command inside of the mount point will not update the mappings.

Devices and mappings can be seen using the ONTAP CLI in advanced privilege, as shown below.

::*> pnfs devices show -vserver DEMO (vserver nfs pnfs devices show) Vserver Name Mapping ID Volume MSID Mapping Status Generation --------------- --------------- --------------- --------------- ------------- DEMO 16 2157024470 available 1 ::*> pnfs devices mappings show -vserver SVM (vserver nfs pnfs devices mappings show) Vserver Name Mapping ID Dsid LIF IP -------------- --------------- --------------- -------------------- DEMO 16 2488 10.193.67.211

|

In these commands, the volume names are not present. Instead, the numeric IDs associated with those volumes are used: the master set ID (MSID) and the data set ID (DSID). To find the volumes associated with the mappings, you can use volume show -dsid [dsid_numeric] or volume show -msid [msid_numeric] in advanced privilege of the ONTAP CLI.

|

When a client attempts to read or write to a file located on a node that is remote to the metadata server connection, pNFS will negotiate the appropriate access paths to ensure data locality for those operations and the client will redirect to the advertised pNFS device rather than attempting to traverse the cluster network to access the file. This helps reduce CPU overhead and network latency.

pNFS control path

In addition to the metadata and data portions of pNFS, there is also a pNFS control path. The control path is used by the NFS server to synchronize file system information. In an ONTAP cluster, the backend cluster network replicates periodically to ensure all pNFS devices and device mappings are in sync.

pNFS device population workflow

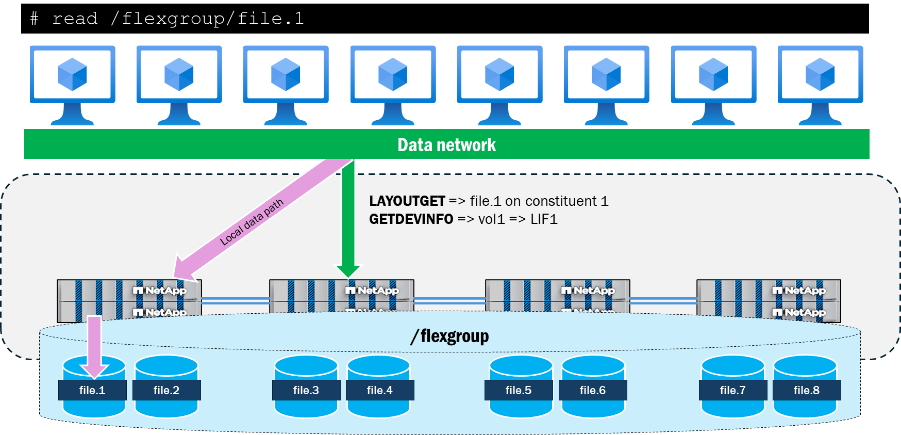

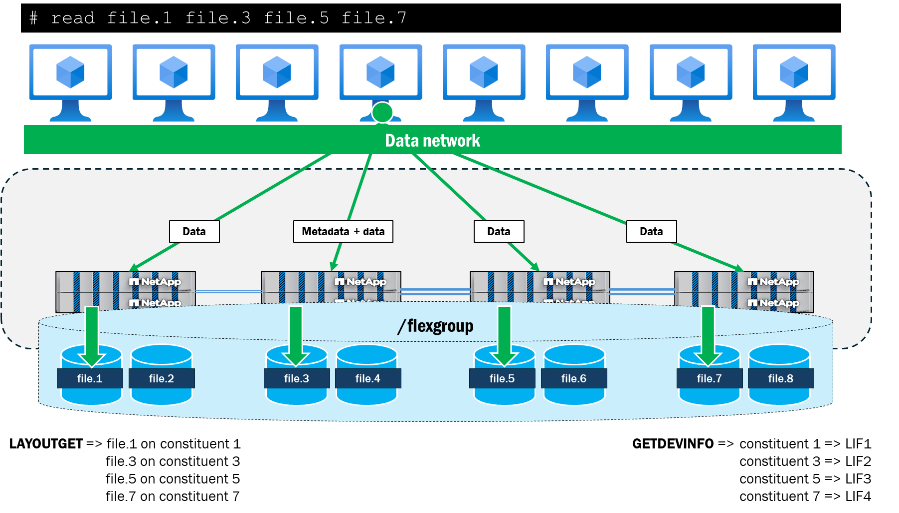

The following describes how a pNFS device populates in ONTAP after a client makes a request to read or write a file in a volume.

-

Client requests read or write; an OPEN is performed and the file handle is retrieved.

-

Once the OPEN is performed, the client sends the file handle to the storage in a LAYOUTGET call over the metadata server connection.

-

LAYOUTGET returns information about the layout of the file, such as the state ID, the stripe size, file segment, and device ID, to the client.

-

The client then takes the device ID and sends a GETDEVINFO call to the server to retrieve the associated IP address with the device.

-

The storage sends a reply with the list of associated IP addresses for local access to the device.

-

The client continues the NFS conversation over the local IP address sent back from the storage.

Interaction of pNFS with FlexGroup volumes

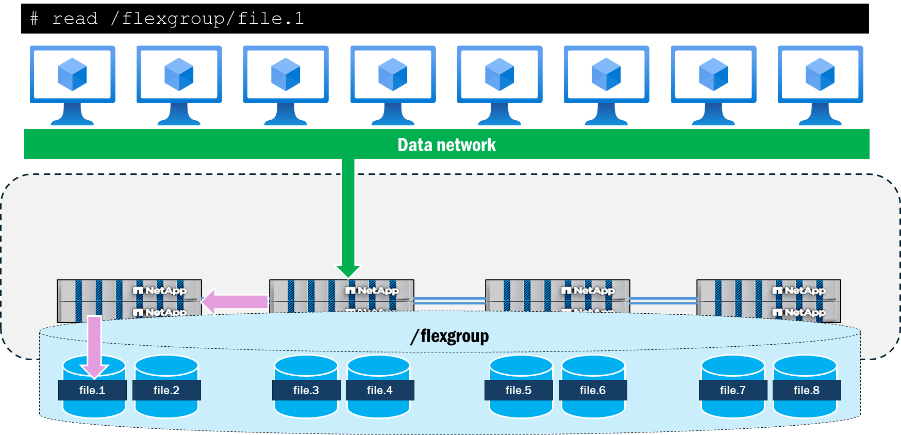

FlexGroup volumes in ONTAP present storage as FlexVol volume constituents that span multiple nodes in a cluster, which allows a workload to leverage multiple hardware resources while maintaining a single mountpoint. Because multiple nodes with multiple network interfaces interact with the workload, it's a natural result to see remote traffic traverse the backend cluster network in ONTAP.

When utilizing pNFS, ONTAP keeps track of the file and volume layouts of the FlexGroup volume and maps them to the local data interfaces in the cluster. For example, if a constituent volume that contains a file being accessed resides on node 1, then ONTAP will notify the client to redirect the data traffic to the data interface on node 1.

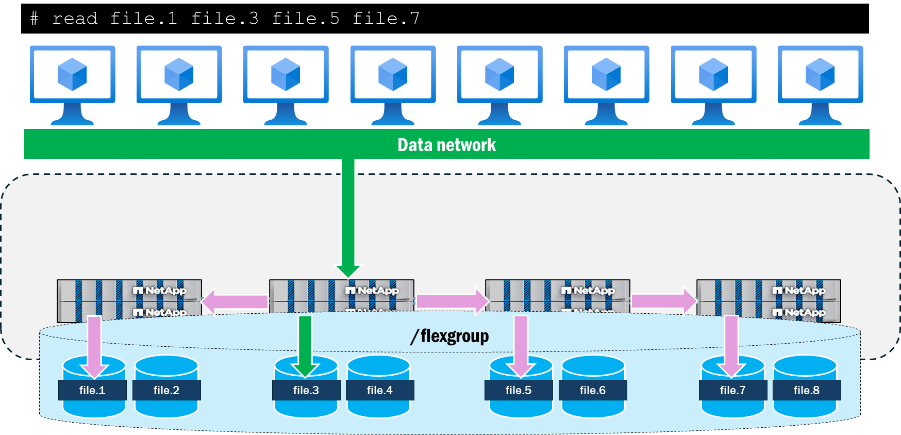

pNFS also provides for the presentation of parallel network paths to files from a single client that NFSv4.1 without pNFS does not provide. For example, if a client wants to access four files at the same time from the same mount using NFSv4.1 without pNFS, the same network path would be utilized for all files and the ONTAP cluster would instead send remote requests to those files. The mount path can become a bottleneck for the operations, as they all follow a single path and arrive at a single node and is also servicing metadata operations along with the data operations.

When pNFS is used to access the same four files simultaneously from a single client, the client and server negotiate local paths to each node with the files and uses multiple TCP connections for the data operations, while the mount path acts as the location for all metadata operations. This provides latency benefits by using local paths to the files but also can add throughput benefits by way of multiple network interfaces being used, provided the clients can send enough data to saturate the network.

The following shows results from a simple test run on a single RHEL 9.5 client where four 10GB files (all residing on different constituent volumes across two ONTAP cluster nodes) are read in parallel using dd. For each file, the overall throughput and completion time was improved when using pNFS. When using NFSv4.1 without pNFS, the performance delta between files that were local to the mount point and remote was greater than with pNFS.

| Test | Throughput per file (MB/s) | Completion time per file |

|---|---|---|

NFSv4.1: no pNFS |

|

|

NFSv4.1: with pNFS |

|

|