pNFS use cases in ONTAP

Suggest changes

Suggest changes

pNFS can be used with various ONTAP features to improve performance and provide additional flexibility for NFS workloads.

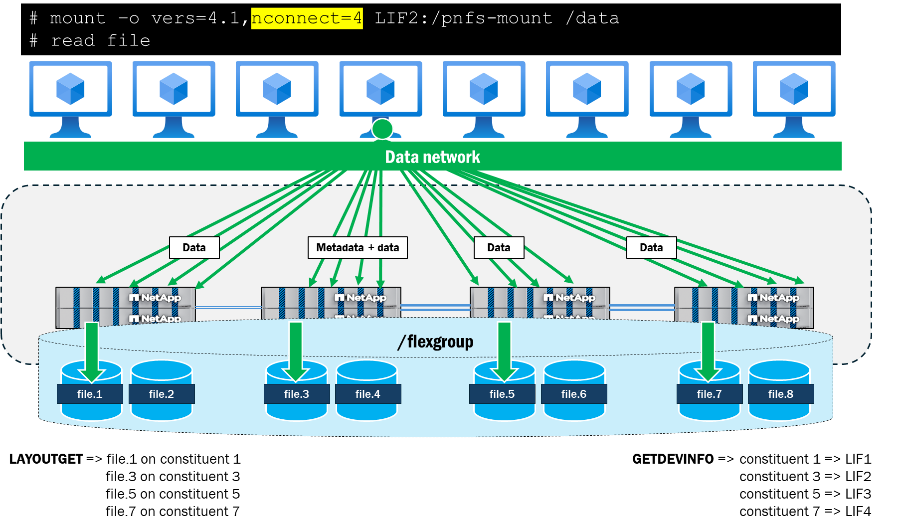

pNFS with nconnect

NFS introduced a new mount option with some more recent clients and servers that provides a way to deliver multiple TCP connections while mounting a single IP address. This provides a mechanism to better parallelize operations, work around NFS server and client limitations, and potentially provide greater overall performance to certain workloads. nconnect is supported in ONTAP 9.8 and later, provided the client supports nconnect.

When using nconnect with pNFS, connections will parallelize using the nconnect option over each pNFS device advertised by the NFS server. For instance, if nconnect is set to four and there are four eligible interfaces for pNFS, then the total number of connections created will be up to 16 per mount point (4 nconnect x 4 IP addresses).

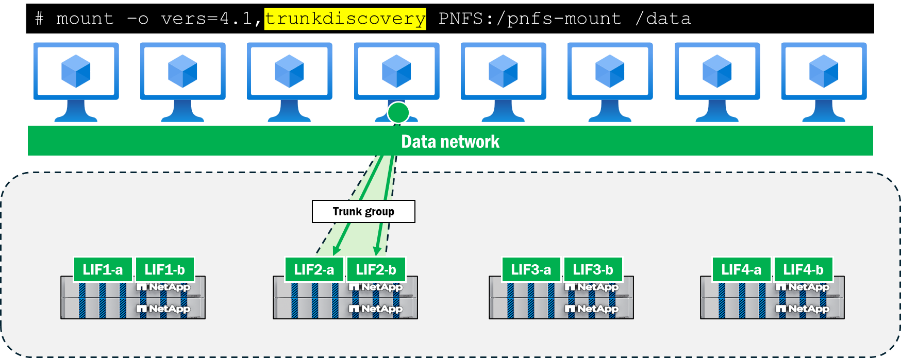

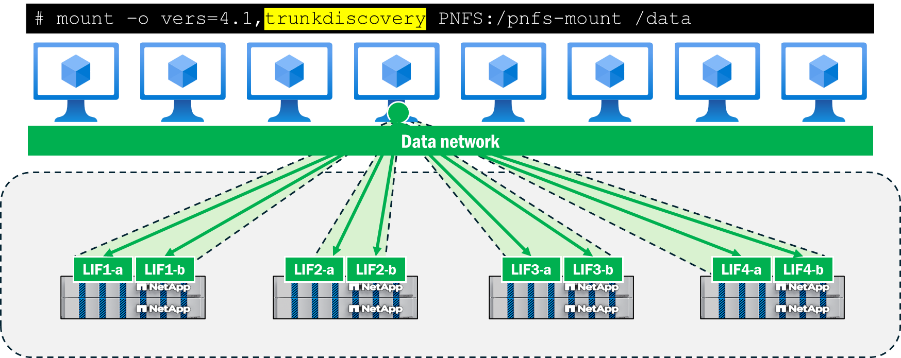

pNFS with NFSv4.1 session trunking

NFSv4.1 session trunking (RFC 5661, section 2.10.5) is the use of multiple TCP connections between a client and server in order to increase the speed of data transfer. Support for NFSv4.1 session trunking was added to ONTAP 9.14.1 and must be used with clients that also support session trunking.

In ONTAP, session trunking can be used across multiple nodes in a cluster to provide extra throughput and redundancy across connections.

Session trunking can be established in multiple ways:

-

Discover automatically via mount options: Session trunking in most modern NFS clients can be established via mount options (check your OS vendor's documentation) that signal to the NFS server to send information back to the client about session trunks. This information appears via an NFS packet as an

fs_location4call.The mount option in use depends on the client's OS version. For instance, Ubuntu Linux flavors generally use

max_connect=nto signal a session trunk is to be used. In RHEL Linux distros, thetrunkdiscoverymount option is used.Ubuntu examplemount -o vers=4.1,max_connect=8 10.10.10.10:/pNFS /mnt/pNFS

RHEL examplemount -o vers=4.1,trunkdiscovery 10.10.10.10:/pNFS /mnt/pNFS

If you attempt to use max_connecton RHEL distros, it will be treated as nconnect instead and session trunking will not work as expected. -

Establish manually: You can establish session trunking manually by mounting each individual IP address to the same export path and mount point. For example, if you have two IP addresses on the same node (10.10.10.10 and 10.10.10.11) for an export path of

/pNFS, you run the mount command twice:mount -o vers=4.1 10.10.10.10:/pNFS /mnt/pNFS mount -o vers=4.1 10.10.10.11:/pNFS /mnt/pNFS

Repeat this process across all interfaces you want to participate in the trunk.

|

Each node gets its own session trunk. Trunks do not traverse nodes. |

|

When using pNFS, use only session trunking or nconnect. Using both will result in undesirable behavior, such as only the metadata server connection getting the benefits of nconnect with the data servers using a single connection. |

pNFS can provide a local path to each participating node in a cluster, and when used with session trunking, pNFS can leverage a session trunk per node to maximize throughput for the entire cluster.

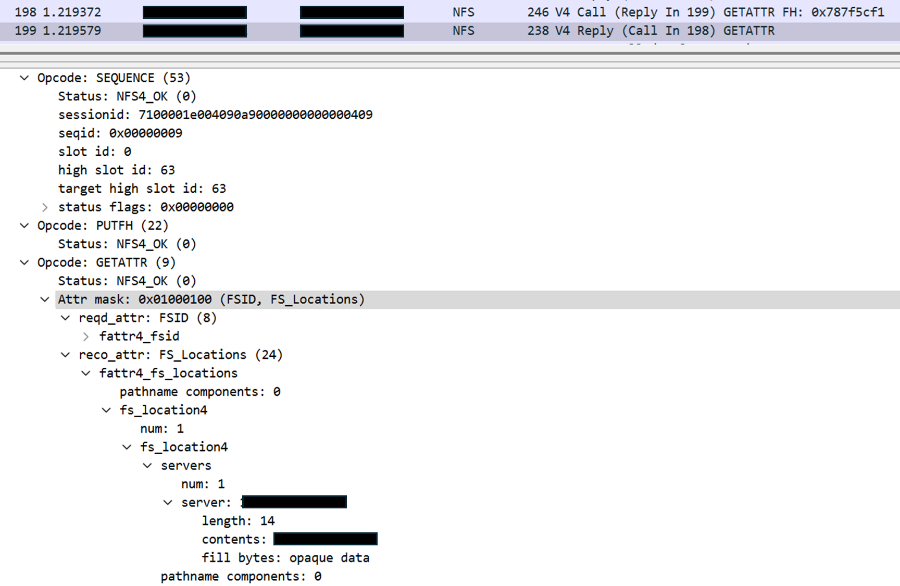

When trunkdiscovery is used, an added GETATTR call (FS_Locations) is leveraged for the listed session trunk interfaces on the NFS server node where the mount interface is located. Once those are returned, subsequent mounts are made to the returned addresses. This can be seen in a packet capture during mount.

pNFS versus NFSv4.1 referrals

NFSv4.1 referrals provide a mode of initial mount path redirection that directs a client to the location of the volumes upon a mount request. NFSv4.1 referrals work within a single SVM. This feature attempts to localize the NFS mount to a network interface residing on the same node as the data volume. If that interface or volume moves to another node while mounted to a client, then the data path is no longer localized until a new mount is established.

pNFS does not attempt to localize a mount path. Instead, it establishes a metadata server using a mount path and then localizes the data path dynamically as needed.

NFSv4.1 referrals can be used with pNFS, but the functionality is unnecessary. Enabling referrals with pNFS will not show noticeable results.

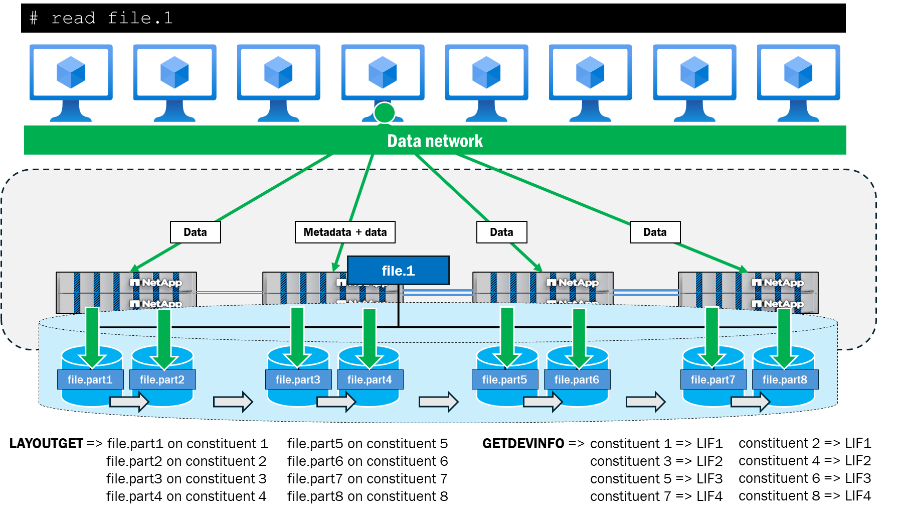

Interaction of pNFS with advanced capacity balancing

Advanced capacity balancing in ONTAP writes portions of file data across constituent volumes of a FlexGroup volume (not supported with single FlexVol volumes). As a file grows, ONTAP decides to begin writing data to a new multipart inode on a different constituent volume which might be on the same node or a different node. Writes, reads, and metadata operations to these multi-inode files are transparent and non-disruptive to clients. Advanced capacity balancing improves space management among the FlexGroup constituent volumes which provides for more consistent performance.

pNFS can redirect data IO to a localized network path depending on the file layout information stored in the NFS server. When a single large file is created in parts across multiple constituent volumes that can potentially span multiple nodes in the cluster, pNFS in ONTAP can still provide localized traffic to each file part because ONTAP maintains the file layout information for all of the file parts as well. When a file is read, the data path locality will change as needed.