Learn about Cloud Volumes ONTAP HA pairs in Google Cloud

Suggest changes

Suggest changes

A Cloud Volumes ONTAP high-availability (HA) configuration provides nondisruptive operations and fault tolerance. In Google Cloud, data is synchronously mirrored between the two nodes.

HA components

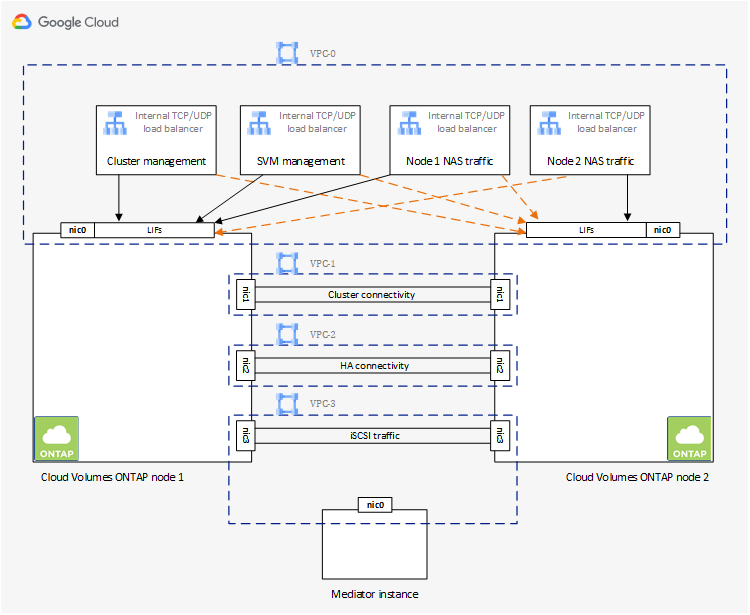

Cloud Volumes ONTAP HA configurations in Google Cloud include the following components:

-

Two Cloud Volumes ONTAP nodes whose data is synchronously mirrored between each other.

-

A mediator instance that provides a communication channel between the nodes to assist in storage takeover and giveback processes.

-

One zone or three zones (recommended).

If you choose three zones, the two nodes and mediator are in separate Google Cloud zones.

-

Four Virtual Private Clouds (VPCs).

The configuration uses four VPCs because GCP requires that each network interface resides in a separate VPC network.

-

Four Google Cloud internal load balancers (TCP/UDP) that manage incoming traffic to the Cloud Volumes ONTAP HA pair.

Learn about networking requirements, including more details about load balancers, VPCs, internal IP addresses, subnets, and more.

The following conceptual image shows a Cloud Volumes ONTAP HA pair and its components:

Mediator

Here are some key details about the mediator instance in Google Cloud:

- Instance type

-

e2-micro (an f1-micro instance was previously used)

- Disks

-

Two standard persistent disks that are 10 GiB each

- Operating system

-

Debian 11

For Cloud Volumes ONTAP 9.10.0 and earlier, Debian 10 was installed on the mediator. - Upgrades

-

When you upgrade Cloud Volumes ONTAP, the NetApp Console also updates the mediator instance as needed.

- Access to the instance

-

For Debian, the default cloud user is

admin. Google Cloud creates and adds a certificates for theadminuser when SSH access is requested through the Google Cloud Console or gcloud command line. You can specifysudoto gain root privileges. - Third-party agents

-

Third-party agents or VM extensions are not supported on the mediator instance.

Storage takeover and giveback

If a node goes down, the other node can serve data for its partner to provide continued data service. Clients can access the same data from the partner node because the data was synchronously mirrored to the partner.

After the node reboots, the partner must resync data before it can return the storage. The time that it takes to resync data depends on how much data was changed while the node was down.

Storage takeover, resync, and giveback are all automatic by default. No user action is required.

RPO and RTO

An HA configuration maintains high availability of your data as follows:

-

The recovery point objective (RPO) is 0 seconds.

Your data is transactionally consistent with no data loss.

-

The recovery time objective (RTO) is 120 seconds.

In the event of an outage, data should be available in 120 seconds or less.

HA deployment models

You can ensure the high availability of your data by deploying an HA configuration in multiple zones or in a single zone.

- Multiple zones (recommended)

-

Deploying an HA configuration across three zones ensures continuous data availability if a failure occurs within a zone. Note that write performance is slightly lower compared to using a single zone, but it's minimal.

- Single zone

-

When deployed in a single zone, a Cloud Volumes ONTAP HA configuration uses a spread placement policy. This policy ensures that an HA configuration is protected from a single point of failure within the zone, without having to use separate zones to achieve fault isolation.

This deployment model does lower your costs because there are no data egress charges between zones.

How storage works in an HA pair

Unlike an ONTAP cluster, storage in a Cloud Volumes ONTAP HA pair in GCP is not shared between nodes. Instead, data is synchronously mirrored between the nodes so that the data is available in the event of failure.

Storage allocation

When you create a new volume and additional disks are required, the Console allocates the same number of disks to both nodes, creates a mirrored aggregate, and then creates the new volume. For example, if two disks are required for the volume, the Console allocates two disks per node for a total of four disks.

Storage configurations

You can use an HA pair as an active-active configuration, in which both nodes serve data to clients, or as an active-passive configuration, in which the passive node responds to data requests only if it has taken over storage for the active node.

Performance expectations for an HA configuration

A Cloud Volumes ONTAP HA configuration synchronously replicates data between nodes, which consumes network bandwidth. As a result, you can expect the following performance in comparison to a single-node Cloud Volumes ONTAP configuration:

-

For HA configurations that serve data from only one node, read performance is comparable to the read performance of a single-node configuration, whereas write performance is lower.

-

For HA configurations that serve data from both nodes, read performance is higher than the read performance of a single-node configuration, and write performance is the same or higher.

For more details about Cloud Volumes ONTAP performance, refer to Performance.

Client access to storage

Clients should access NFS and CIFS volumes by using the data IP address of the node on which the volume resides. If NAS clients access a volume by using the IP address of the partner node, traffic goes between both nodes, which reduces performance.

|

If you move a volume between nodes in an HA pair, you should remount the volume by using the IP address of the other node. Otherwise, you can experience reduced performance. If clients support NFSv4 referrals or folder redirection for CIFS, you can enable those features on the Cloud Volumes ONTAP systems to avoid remounting the volume. For details, refer to ONTAP documentation. |

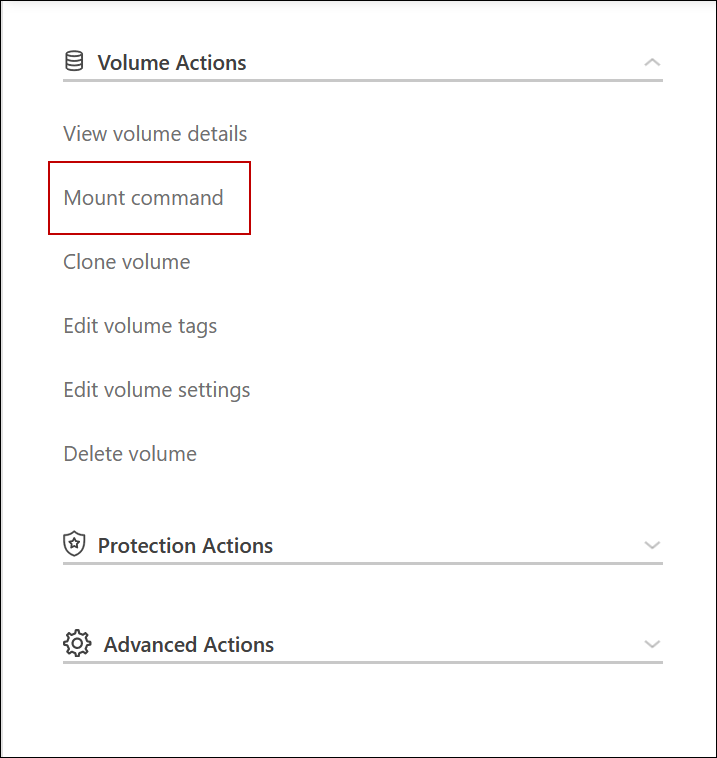

You can locate the correct IP address from the Console by selecting the volume and clicking Mount Command.