Deploy a Kubernetes Cluster with NVIDIA DeepOps Automated Deployment

Suggest changes

Suggest changes

To deploy and configure the Kubernetes Cluster with NVIDIA DeepOps, complete the following steps:

-

Make sure that the same user account is present on all the Kubernetes master and worker nodes.

-

Clone the DeepOps repository.

git clone https://github.com/NVIDIA/deepops.git

-

Check out a recent release tag.

cd deepops git checkout tags/20.08

If this step is skipped, the latest development code is used, not an official release.

-

Prepare the Deployment Jump by installing the necessary prerequisites.

./scripts/setup.sh

-

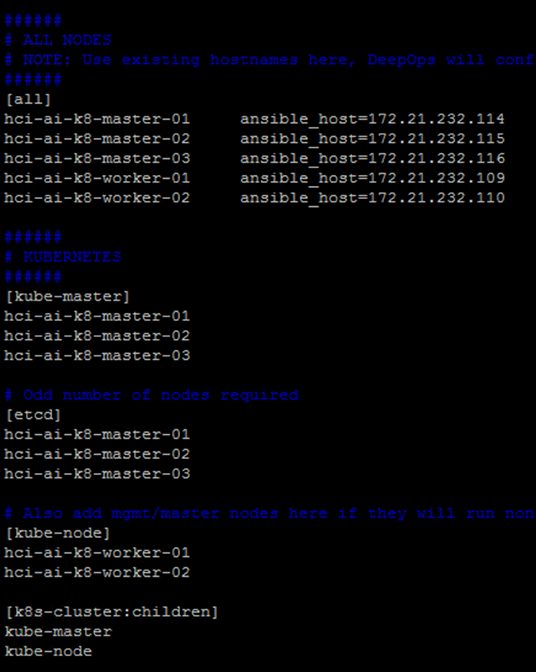

Create and edit the Ansible inventory by opening a VI editor to

deepops/config/inventory.-

List all the master and worker nodes under [all].

-

List all the master nodes under [kube-master]

-

List all the master nodes under [etcd]

-

List all the worker nodes under [kube-node]

-

-

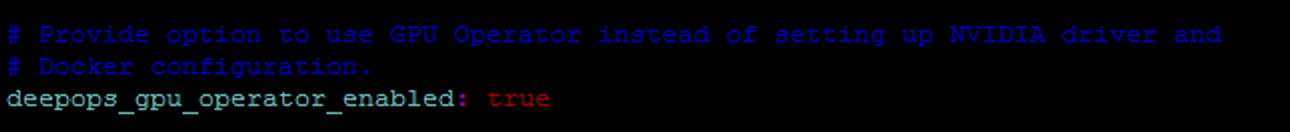

Enable GPUOperator by opening a VI editor to

deepops/config/group_vars/k8s-cluster.yml.

-

Set the value of

deepops_gpu_operator_enabledto true. -

Verify the permissions and network configuration.

ansible all -m raw -a "hostname" -k -K

-

If SSH to the remote hosts requires a password, use -k.

-

If sudo on the remote hosts requires a password, use -K.

-

-

If the previous step passed without any issues, proceed with the setup of Kubernetes.

ansible-playbook --limit k8s-cluster playbooks/k8s-cluster.yml -k -K

-

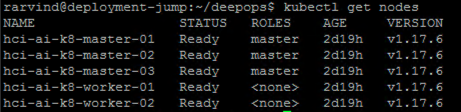

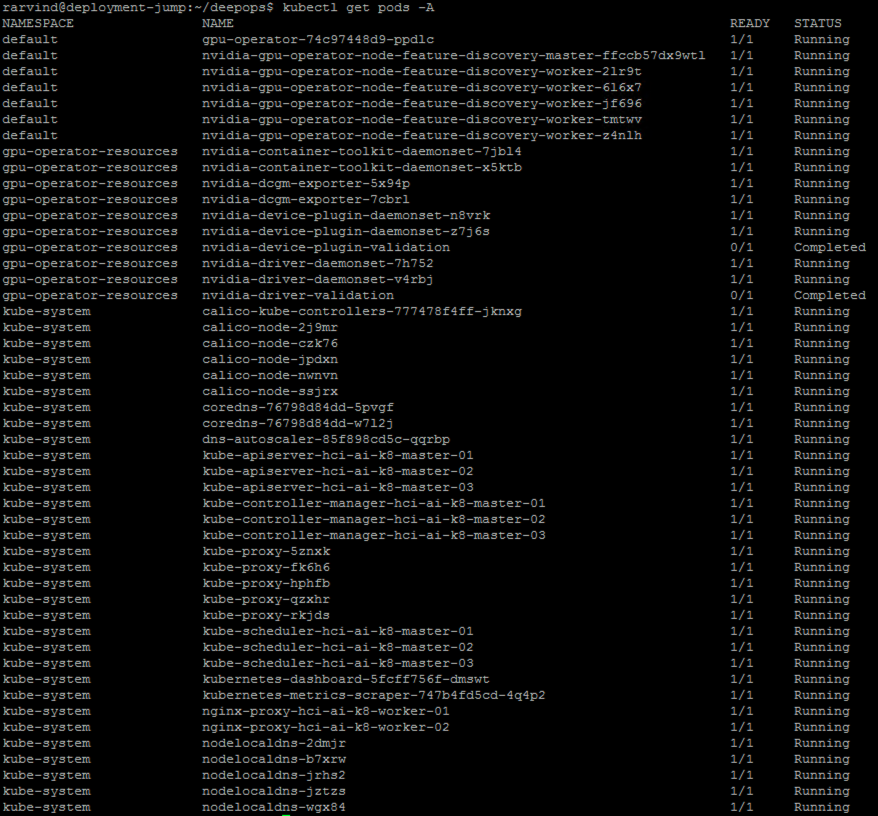

To verify the status of the Kubernetes nodes and the pods, run the following commands:

kubectl get nodes

kubectl get pods -A

It can take a few minutes for all the pods to run.

-

Verify that the Kubernetes setup can access and use the GPUs.

./scripts/k8s_verify_gpu.sh

Expected sample output:

rarvind@deployment-jump:~/deepops$ ./scripts/k8s_verify_gpu.sh job_name=cluster-gpu-tests Node found with 3 GPUs Node found with 3 GPUs total_gpus=6 Creating/Deleting sandbox Namespace updating test yml downloading containers ... job.batch/cluster-gpu-tests condition met executing ... Mon Aug 17 16:02:45 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:18:00.0 Off | 0 | | N/A 38C P8 10W / 70W | 0MiB / 15109MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ Mon Aug 17 16:02:45 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:18:00.0 Off | 0 | | N/A 38C P8 10W / 70W | 0MiB / 15109MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ Mon Aug 17 16:02:45 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:18:00.0 Off | 0 | | N/A 38C P8 10W / 70W | 0MiB / 15109MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ Mon Aug 17 16:02:45 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:18:00.0 Off | 0 | | N/A 38C P8 10W / 70W | 0MiB / 15109MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ Mon Aug 17 16:02:45 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:18:00.0 Off | 0 | | N/A 38C P8 10W / 70W | 0MiB / 15109MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ Mon Aug 17 16:02:45 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:18:00.0 Off | 0 | | N/A 38C P8 10W / 70W | 0MiB / 15109MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ Number of Nodes: 2 Number of GPUs: 6 6 / 6 GPU Jobs COMPLETED job.batch "cluster-gpu-tests" deleted namespace "cluster-gpu-verify" deleted

-

Install Helm on the Deployment Jump.

./scripts/install_helm.sh

-

Remove the taints on the master nodes.

kubectl taint nodes --all node-role.kubernetes.io/master-

This step is required to run the LoadBalancer pods.

-

Deploy LoadBalancer.

-

Edit the

config/helm/metallb.ymlfile and provide a range of IP ddresses in theApplication Networkto be used as LoadBalancer.--- # Default address range matches private network for the virtual cluster # defined in virtual/. # You should set this address range based on your site's infrastructure. configInline: address-pools: - name: default protocol: layer2 addresses: - 172.21.231.130-172.21.231.140#Application Network controller: nodeSelector: node-role.kubernetes.io/master: "" -

Run a script to deploy LoadBalancer.

./scripts/k8s_deploy_loadbalancer.sh

-

Deploy an Ingress Controller.

./scripts/k8s_deploy_ingress.sh