Quality of service (QoS)

Suggest changes

Suggest changes

Throughput limits are useful in controlling service levels, managing unknown workloads, or to test applications before deployment to make sure they don't affect other workloads in production. They can also be used to constrain a bully workload after it is identified.

ONTAP QoS policy support

Systems running ONTAP can use the storage QoS feature to limit throughput in MBps and/or I/Os per second (IOPS) for different storage objects such as files, LUNs, volumes, or entire SVMs.

Minimum levels of service based on IOPS are also supported to provide consistent performance for SAN objects in ONTAP 9.2 and for NAS objects in ONTAP 9.3.

The QoS maximum throughput limit on an object can be set in MBps and/or IOPS. If both are used, the first limit reached is enforced by ONTAP. A workload can contain multiple objects, and a QoS policy can be applied to one or more workloads. When a policy is applied to multiple workloads, the workloads share the total limit of the policy. Nested objects are not supported (for example, files within a volume cannot each have their own policy). QoS minimums can only be set in IOPS.

The following tools are currently available for managing ONTAP QoS policies and applying them to objects:

-

ONTAP CLI

-

ONTAP System Manager

-

OnCommand Workflow Automation

-

Active IQ Unified Manager

-

NetApp PowerShell Toolkit for ONTAP

-

ONTAP tools for VMware vSphere VASA Provider

To assign a QoS policy to a LUN, including VMFS and RDM, the ONTAP SVM (displayed as Vserver), LUN path, and serial number can be obtained from the Storage Systems menu on the ONTAP tools for VMware vSphere home page. Select the storage system (SVM), and then Related Objects > SAN. Use this approach when specifying QoS using one of the ONTAP tools.

Refer to Performance monitoring and management overview for more information.

Non-vVols NFS datastores

An ONTAP QoS policy can be applied to the entire datastore or individual VMDK files within it. However, it is important to understand that all VMs on a traditional (non-vVols) NFS datastore share a common I/O queue from a given host. If any VM is throttled by an ONTAP QoS policy then this will in practice result in all I/O for that datastore appearing to be throttled for that host.

Example:

* You configure a QoS limit on vm1.vmdk for a volume that is mounted as a traditional NFS datastore by host esxi-01.

* The same host (esxi-01) is using vm2.vmdk and it is on the same volume.

* If vm1.vmdk gets throttled, then vm2.vmdk will also appear to be throttled since it shares the same IO queue with vm1.vmdk.

|

This does not apply to vVols. |

Beginning in vSphere 6.5 you can manage file-granular limits on non-vVols datastores by leveraging Storage Policy-Based Management (SPBM) with Storage I/O Control (SIOC) v2.

Refer to the following links for more information on managing performance with SIOC and SPBM policies.

To assign a QoS policy to a VMDK on NFS, note the following guidelines:

-

The policy must be applied to the

vmname-flat.vmdkthat contains the actual virtual disk image, not thevmname.vmdk(virtual disk descriptor file) orvmname.vmx(VM descriptor file). -

Do not apply policies to other VM files such as virtual swap files (

vmname.vswp). -

When using the vSphere web client to find file paths (Datastore > Files), be aware that it combines the information of the

- flat.vmdkand. vmdkand simply shows one file with the name of the. vmdkbut the size of the- flat.vmdk. Add-flatinto the file name to get the correct path.

FlexGroup datastores offer enhanced QoS capabilities when using ONTAP tools for VMware vSphere 9.8 and later. You can easily set QoS on all VMs in a datastore or on specific VMs. See the FlexGroup section of this report for more information. Be aware that the previously mentioned limitations of QoS with traditional NFS datastores still apply.

VMFS datastores

Using ONTAP LUNs, the QoS policies can be applied to the FlexVol volume that contains the LUNs or individual LUNs, but not individual VMDK files because ONTAP has no awareness of the VMFS file system.

vVols datastores

Minimum and/or maximum QoS can be easily set on individual VMs or VMDKs without impacting any other VM or VMDK using the Storage Policy-Based Management and vVols.

When creating the storage capability profile for the vVol container, specify a max and/or min IOPS value under the performance capability and then reference this SCP with the VM's storage policy. Use this policy when creating the VM or apply the policy to an existing VM.

|

vVols requires the use ONTAP tools for VMware vSphere which functions as the VASA Provider for ONTAP. Refer to VMware vSphere Virtual Volumes (vVols) with ONTAP for vVols best practices. |

ONTAP QoS and VMware SIOC

ONTAP QoS and VMware vSphere Storage I/O Control (SIOC) are complementary technologies that vSphere and storage administrators can use together to manage performance of vSphere VMs hosted on systems running ONTAP. Each tool has its own strengths, as shown in the following table. Because of the different scopes of VMware vCenter and ONTAP, some objects can be seen and managed by one system and not the other.

| Property | ONTAP QoS | VMware SIOC |

|---|---|---|

When active |

Policy is always active |

Active when contention exists (datastore latency over threshold) |

Type of units |

IOPS, MBps |

IOPS, shares |

vCenter or application scope |

Multiple vCenter environments, other hypervisors and applications |

Single vCenter server |

Set QoS on VM? |

VMDK on NFS only |

VMDK on NFS or VMFS |

Set QoS on LUN (RDM)? |

Yes |

No |

Set QoS on LUN (VMFS)? |

Yes |

Yes (the datastore can be throttled) |

Set QoS on volume (NFS datastore)? |

Yes |

Yes (the datastore can be throttled) |

Set QoS on SVM (tenant)? |

Yes |

No |

Policy based approach? |

Yes; can be shared by all workloads in the policy or applied in full to each workload in the policy. |

Yes, with vSphere 6.5 and later. |

License required |

Included with ONTAP |

Enterprise Plus |

VMware Storage Distributed Resource Scheduler

VMware Storage Distributed Resource Scheduler (SDRS) is a vSphere feature that places VMs on storage based on the current I/O latency and space usage. It then moves the VM or VMDKs nondisruptively between the datastores in a datastore cluster (also referred to as a pod), selecting the best datastore in which to place the VM or VMDKs in the datastore cluster. A datastore cluster is a collection of similar datastores that are aggregated into a single unit of consumption from the vSphere administrator's perspective.

When using SDRS with ONTAP tools for VMware vSphere, you must first create a datastore with the plug-in, use vCenter to create the datastore cluster, and then add the datastore to it. After the datastore cluster is created, additional datastores can be added to the datastore cluster directly from the provisioning wizard on the Details page.

Other ONTAP best practices for SDRS include the following:

-

All datastores in the cluster should use the same type of storage (such as SAS, SATA, or SSD), be either all VMFS or NFS datastores, and have the same replication and protection settings.

-

Consider using SDRS in default (manual) mode. This approach allows you to review the recommendations and decide whether to apply them or not. Be aware of these effects of VMDK migrations:

-

When SDRS moves VMDKs between datastores, any space savings from ONTAP cloning or deduplication are lost. You can rerun deduplication to regain these savings.

-

After SDRS moves VMDKs, NetApp recommends recreating the snapshots at the source datastore because space is otherwise locked by the VM that was moved.

-

Moving VMDKs between datastores on the same aggregate has little benefit, and SDRS does not have visibility into other workloads that might share the aggregate.

-

Storage policy based management and vVols

VMware vSphere APIs for Storage Awareness (VASA) make it easy for a storage administrator to configure datastores with well-defined capabilities and enable the VM administrator to use those whenever needed to provision VMs without having to interact with each other. It's worth taking a look at this approach to see how it can streamline your virtualization storage operations and avoid a lot of trivial work.

Before VASA, VM administrators could define VM storage policies, but they had to work with the storage administrator to identify appropriate datastores, often by using documentation or naming conventions. With VASA, the storage administrator can define a range of storage capabilities, including performance, tiering, encryption, and replication. A set of capabilities for a volume or a set of volumes is called a storage capability profile (SCP).

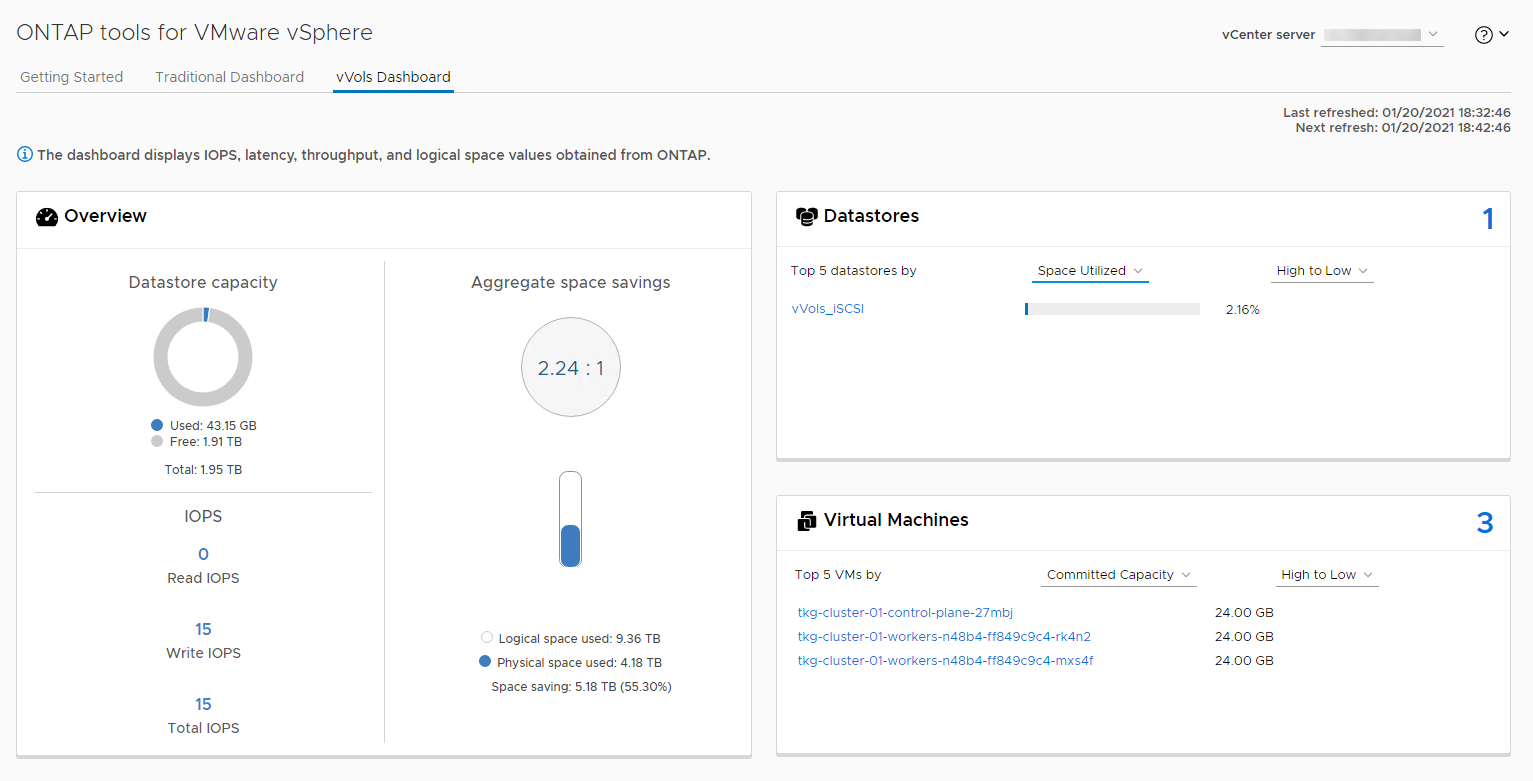

The SCP supports minimum and/or maximum QoS for a VM's data vVols. Minimum QoS is supported only on AFF systems. ONTAP tools for VMware vSphere includes a dashboard that displays VM granular performance and logical capacity for vVols on ONTAP systems.

The following figure depicts ONTAP tools for VMware vSphere 9.8 vVols dashboard.

After the storage capability profile is defined, it can be used to provision VMs using the storage policy that identifies its requirements. The mapping between the VM storage policy and the datastore storage capability profile allows vCenter to display a list of compatible datastores for selection. This approach is known as storage policy based management.

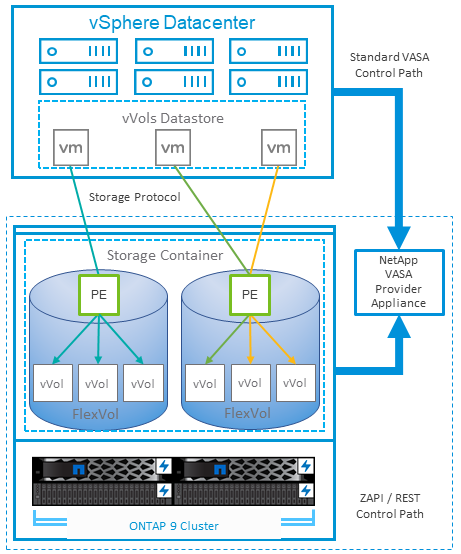

VASA provides the technology to query storage and return a set of storage capabilities to vCenter. VASA vendor providers supply the translation between the storage system APIs and constructs and the VMware APIs that are understood by vCenter. NetApp's VASA Provider for ONTAP is offered as part of the ONTAP tools for VMware vSphere appliance VM, and the vCenter plug-in provides the interface to provision and manage vVol datastores, as well as the ability to define storage capability profiles (SCPs).

ONTAP supports both VMFS and NFS vVol datastores. Using vVols with SAN datastores brings some of the benefits of NFS such as VM-level granularity. Here are some best practices to consider, and you can find additional information in TR-4400:

-

A vVol datastore can consist of multiple FlexVol volumes on multiple cluster nodes. The simplest approach is a single datastore, even when the volumes have different capabilities. SPBM makes sure that a compatible volume is used for the VM. However, the volumes must all be part of a single ONTAP SVM and accessed using a single protocol. One LIF per node for each protocol is sufficient. Avoid using multiple ONTAP releases within a single vVol datastore because the storage capabilities might vary across releases.

-

Use the ONTAP tools for VMware vSphere plug-in to create and manage vVol datastores. In addition to managing the datastore and its profile, it automatically creates a protocol endpoint to access the vVols if needed. If LUNs are used, note that LUN PEs are mapped using LUN IDs 300 and higher. Verify that the ESXi host advanced system setting

Disk.MaxLUNallows a LUN ID number that is higher than 300 (the default is 1,024). Do this step by selecting the ESXi host in vCenter, then the Configure tab, and findDisk.MaxLUNin the list of Advanced System Settings. -

Do not install or migrate VASA Provider, vCenter Server (appliance or Windows based), or ONTAP tools for VMware vSphere itself onto a vVols datastore, because they are then mutually dependent, limiting your ability to manage them in the event of a power outage or other data center disruption.

-

Back up the VASA Provider VM regularly. At a minimum, create hourly snapshots of the traditional datastore that contains VASA Provider. For more about protecting and recovering the VASA Provider, see this KB article.

The following figure shows vVols components.