Manage a single-node ONTAP cluster

Suggest changes

Suggest changes

A single-node cluster is a special implementation of a cluster running on a standalone node. Single-node clusters are not recommended because they do not provide redundancy. If the node goes down, data access is lost.

|

For fault tolerance and nondisruptive operations, it is highly recommended that you configure your cluster with high-availability (HA pairs). |

If you choose to configure or upgrade a single-node cluster, you should be aware of the following:

-

Root volume encryption is not supported on single-node clusters.

-

If you remove nodes to have a single-node cluster, you should modify the cluster ports to serve data traffic by modifying the cluster ports to be data ports, and then creating data LIFs on the data ports.

-

For single-node clusters, you can specify the configuration backup destination during software setup. After setup, those settings can be modified using ONTAP commands.

-

If there are multiple hosts connecting to the node, each host can be configured with a different operating system such as Windows or Linux. If there are multiple paths from the host to the controller, then ALUA must be enabled on the host.

Ways to configure iSCSI SAN hosts with single nodes

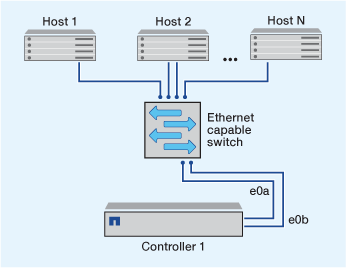

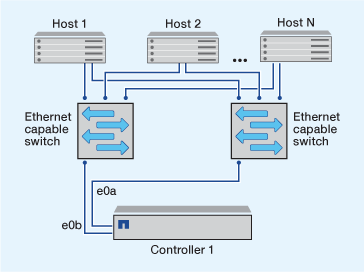

You can configure iSCSI SAN hosts to connect directly to a single node or to connect through one or more IP switches. The node can have multiple iSCSI connections to the switch.

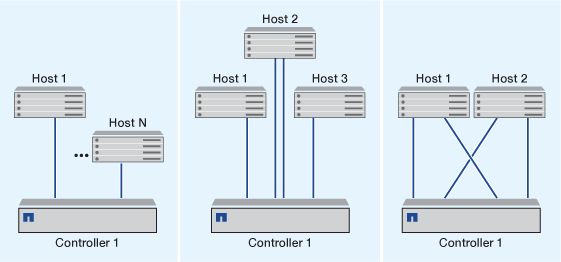

In direct-attached single-node configurations, one or more hosts are directly connected to the node.

In single-network single-node configurations, one switch connects a single node to one or more hosts. Because there is a single switch, this configuration is not fully redundant.

In multi-network single-node configurations, two or more switches connect a single node to one or more hosts. Because there are multiple switches, this configuration is fully redundant.

Ways to configure FC and FC-NVMe SAN hosts with single nodes

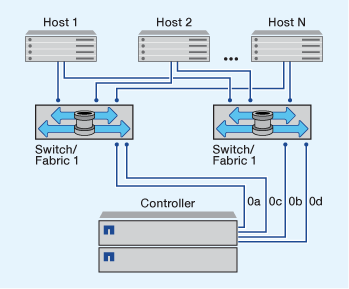

You can configure FC and FC-NVMe SAN hosts with single nodes through one or more fabrics. N-Port ID Virtualization (NPIV) is required and must be enabled on all FC switches in the fabric. You cannot directly attach FC or FC-NMVE SAN hosts to single nodes without using an FC switch.

In single-fabric single-node configurations, there is one switch connecting a single node to one or more hosts. Because there is a single switch, this configuration is not fully redundant.

In single-fabric single-node configurations, multipathing software is not required if you only have a single path from the host to the node.

In multifabric single-node configurations, there are two or more switches connecting a single node to one or more hosts. For simplicity, the following figure shows a multifabric single-node configuration with only two fabrics, but you can have two or more fabrics in any multifabric configuration. In this figure, the storage controller is mounted in the top chassis and the bottom chassis can be empty or can have an IOMX module, as it does in this example.

The FC target ports (0a, 0c, 0b, 0d) in the illustrations are examples. The actual port numbers vary depending on the model of your storage node and whether you are using expansion adapters.

ONTAP upgrade for single-node cluster

You can use the ONTAP CLI to perform an automated update of a single-node cluster. Single-node clusters lack redundancy, this means that updates are always disruptive. You can't perform disruptive upgrades using System Manager.

You must complete upgrade preparation steps.

-

Delete the previous ONTAP software package:

cluster image package delete -version <previous_package_version> -

Download the target ONTAP software package:

cluster image package get -url locationcluster1::> cluster image package get -url http://www.example.com/software/9.7/image.tgz Package download completed. Package processing completed.

-

Verify that the software package is available in the cluster package repository:

cluster image package show-repositorycluster1::> cluster image package show-repository Package Version Package Build Time ---------------- ------------------ 9.7 M/DD/YYYY 10:32:15

-

Verify that the cluster is ready to be upgraded:

cluster image validate -version <package_version_number>cluster1::> cluster image validate -version 9.7 WARNING: There are additional manual upgrade validation checks that must be performed after these automated validation checks have completed...

-

Monitor the progress of the validation:

cluster image show-update-progress -

Complete all required actions identified by the validation.

-

Optionally, generate a software upgrade estimate:

cluster image update -version <package_version_number> -estimate-onlyThe software upgrade estimate displays details about each component to be updated, and the estimated duration of the upgrade.

-

Perform the software upgrade:

cluster image update -version <package_version_number>If an issue is encountered, the update pauses and prompts you to take corrective action. You can use the cluster image show-update-progress command to view details about any issues and the progress of the update. After correcting the issue, you can resume the update by using the cluster image resume-update command. -

Display the cluster update progress:

cluster image show-update-progressThe node is rebooted as part of the update and cannot be accessed while rebooting.

-

Trigger a notification:

autosupport invoke -node * -type all -message "Finishing_Upgrade"If your cluster is not configured to send messages, a copy of the notification is saved locally.