ONTAP NAS configuration options and examples

Suggest changes

Suggest changes

Learn to create and use ONTAP NAS drivers with your Trident installation. This section provides backend configuration examples and details for mapping backends to StorageClasses.

Beginning with the 25.10 release, NetApp Trident supports NetApp AFX storage systems. NetApp AFX storage systems differ from other ONTAP-based systems (ASA, AFF, and FAS) in the implementation of their storage layer.

|

Only the ontap-nas driver (with NFS protocol) is supported for NetApp AFX systems; SMB protocol is not supported.

|

In the Trident backend configuration, you need not specify that your system is an NetApp AFX storage system. When you select ontap-nas as the storageDriverName, Trident detects automatically the AFX storage system. Some backend configuration parameters are not applicable to AFX storage systems as noted in the table below.

Backend configuration options

See the following table for the backend configuration options:

| Parameter | Description | Default | ||

|---|---|---|---|---|

|

Always 1 |

|||

|

Name of the storage driver

|

|

||

|

Custom name or the storage backend |

Driver name + "_" + dataLIF |

||

|

IP address of a cluster or SVM management LIF |

"10.0.0.1", "[2001:1234:abcd::fefe]" |

||

|

IP address of protocol LIF. |

Specified address or derived from SVM, if not specified (not recommended) |

||

|

Storage virtual machine to use |

Derived if an SVM |

||

|

Enable automatic export policy creation and updating [Boolean]. |

false |

||

|

List of CIDRs to filter Kubernetes' node IPs against when |

["0.0.0.0/0", "::/0"]` |

||

|

Set of arbitrary JSON-formatted labels to apply on volumes |

"" |

||

|

Base64-encoded value of client certificate. Used for certificate-based auth |

"" |

||

|

Base64-encoded value of client private key. Used for certificate-based auth |

"" |

||

|

Base64-encoded value of trusted CA certificate. Optional. Used for certificate-based auth |

"" |

||

|

Username to connect to the cluster/SVM. Used for credential-based auth. |

|||

|

Password to connect to the cluster/SVM. Used for credential-based auth. |

|||

|

Prefix used when provisioning new volumes in the SVM. Cannot be updated after you set it

|

"trident" |

||

|

Aggregate for provisioning (optional; if set, must be assigned to the SVM). For the

Do not specify for AFX storage systems. |

"" |

||

|

Fail provisioning if usage is above this percentage. |

"" (not enforced by default) |

||

flexgroupAggregateList |

List of aggregates for provisioning (optional; if set, must be assigned to the SVM). All aggregates assigned to the SVM are used to provision a FlexGroup volume. Supported for the ontap-nas-flexgroup storage driver.

|

"" |

||

|

Fail provisioning if requested volume size is above this value. |

"" (not enforced by default) |

||

|

Debug flags to use when troubleshooting. Example, {"api":false, "method":true} |

null |

||

|

Configure NFS or SMB volumes creation. |

|

||

|

Comma-separated list of NFS mount options. |

"" |

||

|

Maximum Qtrees per FlexVol, must be in range [50, 300] |

"200" |

||

|

You can specify one of the following: the name of an SMB share created using the Microsoft Management Console or ONTAP CLI; a name to allow Trident to create the SMB share; or you can leave the parameter blank to prevent common share access to volumes. |

|

||

|

Boolean parameter to use ONTAP REST APIs. |

|

||

|

Maximum requestable FlexVol size when using Qtrees in ontap-nas-economy backend. |

"" (not enforced by default) |

||

|

Restricts |

|||

|

Active Directory admin user or user group with full access to SMB shares. Use this parameter to provide admin rights to the SMB share with full control. |

Backend configuration options for provisioning volumes

You can control default provisioning using these options in the defaults section of the configuration. For an example, see the configuration examples below.

| Parameter | Description | Default |

|---|---|---|

|

Space-allocation for Qtrees |

"true" |

|

Space reservation mode; "none" (thin) or "volume" (thick) |

"none" |

|

Snapshot policy to use |

"none" |

|

QoS policy group to assign for volumes created. Choose one of qosPolicy or adaptiveQosPolicy per storage pool/backend |

"" |

|

Adaptive QoS policy group to assign for volumes created. Choose one of qosPolicy or adaptiveQosPolicy per storage pool/backend. |

"" |

|

Percentage of volume reserved for snapshots |

"0" if |

|

Split a clone from its parent upon creation |

"false" |

|

Enable NetApp Volume Encryption (NVE) on the new volume; defaults to |

"false" |

|

Tiering policy to use "none" |

|

|

Mode for new volumes |

"777" for NFS volumes; empty (not applicable) for SMB volumes |

|

Controls access to the |

"true" for NFSv4 |

|

Export policy to use |

"default" |

|

Security style for new volumes. |

NFS default is |

|

Template to create custom volume names. |

"" |

|

Using QoS policy groups with Trident requires ONTAP 9.8 or later. You should use a non-shared QoS policy group and ensure the policy group is applied to each constituent individually. A shared QoS policy group enforces the ceiling for the total throughput of all workloads. |

Volume provisioning examples

Here's an example with defaults defined:

---

version: 1

storageDriverName: ontap-nas

backendName: customBackendName

managementLIF: 10.0.0.1

dataLIF: 10.0.0.2

labels:

k8scluster: dev1

backend: dev1-nasbackend

svm: trident_svm

username: cluster-admin

password: <password>

limitAggregateUsage: 80%

limitVolumeSize: 50Gi

nfsMountOptions: nfsvers=4

debugTraceFlags:

api: false

method: true

defaults:

spaceReserve: volume

qosPolicy: premium

exportPolicy: myk8scluster

snapshotPolicy: default

snapshotReserve: "10"For ontap-nas and ontap-nas-flexgroups, Trident now uses a new calculation to ensure that the FlexVol is sized correctly with the snapshotReserve percentage and PVC. When the user requests a PVC, Trident creates the original FlexVol with more space by using the new calculation. This calculation ensures that the user receives the writable space they requested for in the PVC, and not less space than what they requested. Before v21.07, when the user requests a PVC (for example, 5 GiB), with the snapshotReserve to 50 percent, they get only 2.5 GiB of writeable space. This is because what the user requested for is the whole volume and snapshotReserve is a percentage of that. With Trident 21.07, what the user requests for is the writeable space and Trident defines the snapshotReserve number as the percentage of the whole volume. This does not apply to ontap-nas-economy. See the following example to see how this works:

The calculation is as follows:

Total volume size = <PVC requested size> / (1 - (<snapshotReserve percentage> / 100))

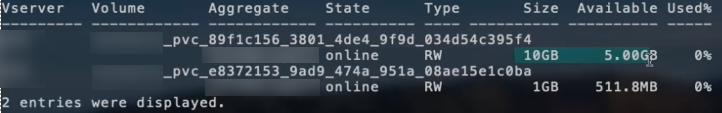

For snapshotReserve = 50%, and PVC request = 5 GiB, the total volume size is 5/.5 = 10 GiB and the available size is 5 GiB, which is what the user requested in the PVC request. The volume show command should show results similar to this example:

Existing backends from previous installs will provision volumes as explained above when upgrading Trident. For volumes that you created before upgrading, you should resize their volumes for the change to be observed. For example, a 2 GiB PVC with snapshotReserve=50 earlier resulted in a volume that provides 1 GiB of writable space. Resizing the volume to 3 GiB, for example, provides the application with 3 GiB of writable space on a 6 GiB volume.

Minimal configuration examples

The following examples show basic configurations that leave most parameters to default. This is the easiest way to define a backend.

|

If you are using Amazon FSx on NetApp ONTAP with Trident, the recommendation is to specify DNS names for LIFs instead of IP addresses. |

ONTAP NAS economy example

---

version: 1

storageDriverName: ontap-nas-economy

managementLIF: 10.0.0.1

dataLIF: 10.0.0.2

svm: svm_nfs

username: vsadmin

password: passwordONTAP NAS Flexgroup example

---

version: 1

storageDriverName: ontap-nas-flexgroup

managementLIF: 10.0.0.1

dataLIF: 10.0.0.2

svm: svm_nfs

username: vsadmin

password: passwordMetroCluster example

You can configure the backend to avoid having to manually update the backend definition after switchover and switchback during SVM replication and recovery.

For seamless switchover and switchback, specify the SVM using managementLIF and omit the dataLIF and svm parameters. For example:

---

version: 1

storageDriverName: ontap-nas

managementLIF: 192.168.1.66

username: vsadmin

password: passwordSMB volumes example

---

version: 1

backendName: ExampleBackend

storageDriverName: ontap-nas

managementLIF: 10.0.0.1

nasType: smb

securityStyle: ntfs

unixPermissions: ""

dataLIF: 10.0.0.2

svm: svm_nfs

username: vsadmin

password: passwordCertificate-based authentication example

This is a minimal backend configuration example. clientCertificate, clientPrivateKey, and trustedCACertificate (optional, if using trusted CA) are populated in backend.json and take the base64-encoded values of the client certificate, private key, and trusted CA certificate, respectively.

---

version: 1

backendName: DefaultNASBackend

storageDriverName: ontap-nas

managementLIF: 10.0.0.1

dataLIF: 10.0.0.15

svm: nfs_svm

clientCertificate: ZXR0ZXJwYXB...ICMgJ3BhcGVyc2

clientPrivateKey: vciwKIyAgZG...0cnksIGRlc2NyaX

trustedCACertificate: zcyBbaG...b3Igb3duIGNsYXNz

storagePrefix: myPrefix_Auto export policy example

This example shows you how you can instruct Trident to use dynamic export policies to create and manage the export policy automatically. This works the same for the ontap-nas-economy and ontap-nas-flexgroup drivers.

---

version: 1

storageDriverName: ontap-nas

managementLIF: 10.0.0.1

dataLIF: 10.0.0.2

svm: svm_nfs

labels:

k8scluster: test-cluster-east-1a

backend: test1-nasbackend

autoExportPolicy: true

autoExportCIDRs:

- 10.0.0.0/24

username: admin

password: password

nfsMountOptions: nfsvers=4IPv6 addresses example

This example shows managementLIF using an IPv6 address.

---

version: 1

storageDriverName: ontap-nas

backendName: nas_ipv6_backend

managementLIF: "[5c5d:5edf:8f:7657:bef8:109b:1b41:d491]"

labels:

k8scluster: test-cluster-east-1a

backend: test1-ontap-ipv6

svm: nas_ipv6_svm

username: vsadmin

password: passwordAmazon FSx for ONTAP using SMB volumes example

The smbShare parameter is required for FSx for ONTAP using SMB volumes.

---

version: 1

backendName: SMBBackend

storageDriverName: ontap-nas

managementLIF: example.mgmt.fqdn.aws.com

nasType: smb

dataLIF: 10.0.0.15

svm: nfs_svm

smbShare: smb-share

clientCertificate: ZXR0ZXJwYXB...ICMgJ3BhcGVyc2

clientPrivateKey: vciwKIyAgZG...0cnksIGRlc2NyaX

trustedCACertificate: zcyBbaG...b3Igb3duIGNsYXNz

storagePrefix: myPrefix_Backend configuration example with nameTemplate

---

version: 1

storageDriverName: ontap-nas

backendName: ontap-nas-backend

managementLIF: <ip address>

svm: svm0

username: <admin>

password: <password>

defaults:

nameTemplate: "{{.volume.Name}}_{{.labels.cluster}}_{{.volume.Namespace}}_{{.vo\

lume.RequestName}}"

labels:

cluster: ClusterA

PVC: "{{.volume.Namespace}}_{{.volume.RequestName}}"Examples of backends with virtual pools

In the sample backend definition files shown below, specific defaults are set for all storage pools, such as spaceReserve at none, spaceAllocation at false, and encryption at false. The virtual pools are defined in the storage section.

Trident sets provisioning labels in the "Comments" field. Comments are set on FlexVol for ontap-nas or FlexGroup for ontap-nas-flexgroup. Trident copies all labels present on a virtual pool to the storage volume at provisioning. For convenience, storage administrators can define labels per virtual pool and group volumes by label.

In these examples, some of the storage pools set their own spaceReserve, spaceAllocation, and encryption values, and some pools override the default values.

ONTAP NAS example

---

version: 1

storageDriverName: ontap-nas

managementLIF: 10.0.0.1

svm: svm_nfs

username: admin

password: <password>

nfsMountOptions: nfsvers=4

defaults:

spaceReserve: none

encryption: "false"

qosPolicy: standard

labels:

store: nas_store

k8scluster: prod-cluster-1

region: us_east_1

storage:

- labels:

app: msoffice

cost: "100"

zone: us_east_1a

defaults:

spaceReserve: volume

encryption: "true"

unixPermissions: "0755"

adaptiveQosPolicy: adaptive-premium

- labels:

app: slack

cost: "75"

zone: us_east_1b

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0755"

- labels:

department: legal

creditpoints: "5000"

zone: us_east_1b

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0755"

- labels:

app: wordpress

cost: "50"

zone: us_east_1c

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0775"

- labels:

app: mysqldb

cost: "25"

zone: us_east_1d

defaults:

spaceReserve: volume

encryption: "false"

unixPermissions: "0775"ONTAP NAS FlexGroup example

---

version: 1

storageDriverName: ontap-nas-flexgroup

managementLIF: 10.0.0.1

svm: svm_nfs

username: vsadmin

password: <password>

defaults:

spaceReserve: none

encryption: "false"

labels:

store: flexgroup_store

k8scluster: prod-cluster-1

region: us_east_1

storage:

- labels:

protection: gold

creditpoints: "50000"

zone: us_east_1a

defaults:

spaceReserve: volume

encryption: "true"

unixPermissions: "0755"

- labels:

protection: gold

creditpoints: "30000"

zone: us_east_1b

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0755"

- labels:

protection: silver

creditpoints: "20000"

zone: us_east_1c

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0775"

- labels:

protection: bronze

creditpoints: "10000"

zone: us_east_1d

defaults:

spaceReserve: volume

encryption: "false"

unixPermissions: "0775"ONTAP NAS economy example

---

version: 1

storageDriverName: ontap-nas-economy

managementLIF: 10.0.0.1

svm: svm_nfs

username: vsadmin

password: <password>

defaults:

spaceReserve: none

encryption: "false"

labels:

store: nas_economy_store

region: us_east_1

storage:

- labels:

department: finance

creditpoints: "6000"

zone: us_east_1a

defaults:

spaceReserve: volume

encryption: "true"

unixPermissions: "0755"

- labels:

protection: bronze

creditpoints: "5000"

zone: us_east_1b

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0755"

- labels:

department: engineering

creditpoints: "3000"

zone: us_east_1c

defaults:

spaceReserve: none

encryption: "true"

unixPermissions: "0775"

- labels:

department: humanresource

creditpoints: "2000"

zone: us_east_1d

defaults:

spaceReserve: volume

encryption: "false"

unixPermissions: "0775"Map backends to StorageClasses

The following StorageClass definitions refer to Examples of backends with virtual pools. Using the parameters.selector field, each StorageClass calls out which virtual pools can be used to host a volume. The volume will have the aspects defined in the chosen virtual pool.

-

The

protection-goldStorageClass will map to the first and second virtual pool in theontap-nas-flexgroupbackend. These are the only pools offering gold level protection.apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: protection-gold provisioner: csi.trident.netapp.io parameters: selector: "protection=gold" fsType: "ext4" -

The

protection-not-goldStorageClass will map to the third and fourth virtual pool in theontap-nas-flexgroupbackend. These are the only pools offering protection level other than gold.apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: protection-not-gold provisioner: csi.trident.netapp.io parameters: selector: "protection!=gold" fsType: "ext4" -

The

app-mysqldbStorageClass will map to the fourth virtual pool in theontap-nasbackend. This is the only pool offering storage pool configuration for mysqldb type app.apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: app-mysqldb provisioner: csi.trident.netapp.io parameters: selector: "app=mysqldb" fsType: "ext4" -

TThe

protection-silver-creditpoints-20kStorageClass will map to the third virtual pool in theontap-nas-flexgroupbackend. This is the only pool offering silver-level protection and 20000 creditpoints.apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: protection-silver-creditpoints-20k provisioner: csi.trident.netapp.io parameters: selector: "protection=silver; creditpoints=20000" fsType: "ext4" -

The

creditpoints-5kStorageClass will map to the third virtual pool in theontap-nasbackend and the second virtual pool in theontap-nas-economybackend. These are the only pool offerings with 5000 creditpoints.apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: creditpoints-5k provisioner: csi.trident.netapp.io parameters: selector: "creditpoints=5000" fsType: "ext4"

Trident will decide which virtual pool is selected and ensures the storage requirement is met.

Update dataLIF after initial configuration

You can change the dataLIF after initial configuration by running the following command to provide the new backend JSON file with updated dataLIF.

tridentctl update backend <backend-name> -f <path-to-backend-json-file-with-updated-dataLIF>

|

If PVCs are attached to one or multiple pods, you must bring down all corresponding pods and then bring them back up in order to for the new dataLIF to take effect. |

Secure SMB examples

Backend configuration with ontap-nas driver

apiVersion: trident.netapp.io/v1

kind: TridentBackendConfig

metadata:

name: backend-tbc-ontap-nas

namespace: trident

spec:

version: 1

storageDriverName: ontap-nas

managementLIF: 10.0.0.1

svm: svm2

nasType: smb

defaults:

adAdminUser: tridentADtest

credentials:

name: backend-tbc-ontap-invest-secretBackend configuration with ontap-nas-economy driver

apiVersion: trident.netapp.io/v1

kind: TridentBackendConfig

metadata:

name: backend-tbc-ontap-nas

namespace: trident

spec:

version: 1

storageDriverName: ontap-nas-economy

managementLIF: 10.0.0.1

svm: svm2

nasType: smb

defaults:

adAdminUser: tridentADtest

credentials:

name: backend-tbc-ontap-invest-secretBackend configuration with storage pool

apiVersion: trident.netapp.io/v1

kind: TridentBackendConfig

metadata:

name: backend-tbc-ontap-nas

namespace: trident

spec:

version: 1

storageDriverName: ontap-nas

managementLIF: 10.0.0.1

svm: svm0

useREST: false

storage:

- labels:

app: msoffice

defaults:

adAdminUser: tridentADuser

nasType: smb

credentials:

name: backend-tbc-ontap-invest-secretStorage class example with ontap-nas driver

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ontap-smb-sc

annotations:

trident.netapp.io/smbShareAdUserPermission: change

trident.netapp.io/smbShareAdUser: tridentADtest

parameters:

backendType: ontap-nas

csi.storage.k8s.io/node-stage-secret-name: smbcreds

csi.storage.k8s.io/node-stage-secret-namespace: trident

trident.netapp.io/nasType: smb

provisioner: csi.trident.netapp.io

reclaimPolicy: Delete

volumeBindingMode: Immediate

|

Ensure that you add annotations to enable secure SMB. Secure SMB does not work without the annotations, irrespective of configurations set in the Backend or PVC.

|

Storage class example with ontap-nas-economy driver

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ontap-smb-sc

annotations:

trident.netapp.io/smbShareAdUserPermission: change

trident.netapp.io/smbShareAdUser: tridentADuser3

parameters:

backendType: ontap-nas-economy

csi.storage.k8s.io/node-stage-secret-name: smbcreds

csi.storage.k8s.io/node-stage-secret-namespace: trident

trident.netapp.io/nasType: smb

provisioner: csi.trident.netapp.io

reclaimPolicy: Delete

volumeBindingMode: ImmediatePVC example with a single AD user

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc4

namespace: trident

annotations:

trident.netapp.io/smbShareAccessControl: |

change:

- tridentADtest

read:

- tridentADuser

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: ontap-smb-scPVC example with multiple AD users

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-test-pvc

annotations:

trident.netapp.io/smbShareAccessControl: |

full_control:

- tridentTestuser

- tridentuser

- tridentTestuser1

- tridentuser1

change:

- tridentADuser

- tridentADuser1

- tridentADuser4

- tridentTestuser2

read:

- tridentTestuser2

- tridentTestuser3

- tridentADuser2

- tridentADuser3

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi