Vertica Eon mode database using NetApp StorageGRID as communal storage

Suggest changes

Suggest changes

By Angela Cheng

This guide describes the procedure to create a Vertica Eon Mode database with communal storage on NetApp StorageGRID.

Introduction

Vertica is an analytic database management software. It is a columnar storage platform designed to handle large volumes of data, which enables very fast query performance in a traditionally intensive scenario.

A Vertica database runs in one of the two modes: Eon or Enterprise. You can deploy both modes on-premises or in the cloud.

Eon and Enterprise modes primarily differ in where they store data:

-

Eon Mode databases use communal storage for their data. This is recommended by Vertica.

-

Enterprise Mode databases store data locally in the file system of nodes that make up the database.

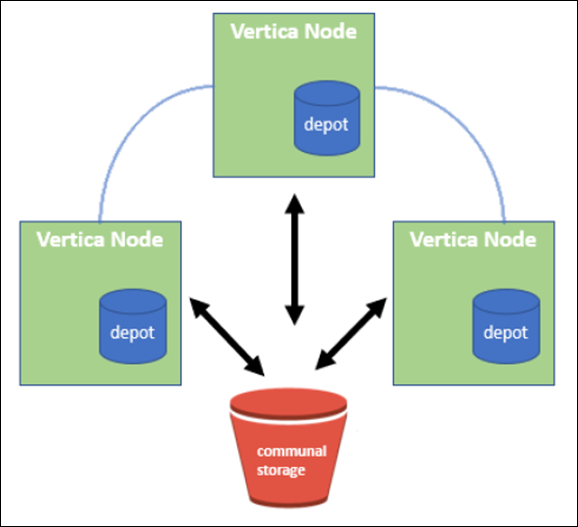

Eon Mode architecture

Eon Mode separates the computational resources from the communal storage layer of the database, which allows the compute and storage to scale separately. Vertica in Eon Mode is optimized to address variable workloads and isolate them from one another by using separate compute and storage resources.

Eon Mode stores data in a shared object store called communal storage—an S3 bucket, either hosted on premises or on Amazon S3.

Communal storage

Instead of storing data locally, Eon Mode uses a single communal storage location for all data and the catalog (metadata). Communal storage is the database's centralized storage location, shared among the database nodes.

Communal storage has the following properties:

-

Communal storage in the cloud or on-premises object storage is more resilient and less susceptible to data loss due to storage failures than storage on disk on individual machines.

-

Any data can be read by any node using the same path.

-

Capacity is not limited by disk space on nodes.

-

Because data is stored communally, you can elastically scale your cluster to meet changing demands. If the data were stored locally on the nodes, adding or removing nodes would require moving significant amounts of data between nodes to either move it off nodes that are being removed, or onto newly created nodes.

The depot

One drawback of communal storage is its speed. Accessing data from a shared cloud location is slower than reading it from local disk. Also, the connection to communal storage can become a bottleneck if many nodes are reading data from it at once. To improve data access speed, the nodes in an Eon Mode database maintain a local disk cache of data called the depot. When executing a query, the nodes first check whether the data it needs is in the depot. If it is, then it finishes the query by using the local copy of the data. If the data is not in the depot, the node fetches the data from communal storage, and saves a copy in the depot.

NetApp StorageGRID recommendations

Vertica stores database data to object storage as thousands (or millions) of compressed objects (observed size is 200 to 500MB per object. When a user runs database queries, Vertica retrieves the selected range of data from these compressed objects in parallel using the byte-range GET call. Each byte-range GET is approximately 8KB.

During the 10TB database depot off user queries test, 4,000 to 10,000 GET (byte-range GET) requests per second were sent to the grid. When running this test using SG6060 appliances, though the CPU% utilization % per appliance node is low (around 20% to 30%), 2/3 of CPU time is waiting for I/O. A very small percentage (0% to 0.5%) of I/O wait is observed on the SGF6024.

Due to the high demand of small IOPS with very low latency requirements (the average should be less than 0.01 seconds), NetApp recommends using the SFG6024 for object storage services. If the SG6060 is needed for very large database sizes, the customer should work with the Vertica account team on depot sizing to support the actively queried dataset.

For the Admin Node and API Gateway Node, the customer can use the SG100 or SG1000. The choice depends on the number of users’ query requests in parallel and database size. If the customer prefers to use a third-party load balancer, NetApp recommends a dedicated load balancer for high performance demand workload. For StorageGRID sizing, consult the NetApp account team.

Other StorageGRID configuration recommendations include:

-

Grid topology. Do not mix the SGF6024 with other storage appliance models on the same grid site. If you prefer to use the SG6060 for long term archive protection, keep the SGF6024 with a dedicated grid load balancer in its own grid site (either physical or logical site) for an active database to enhance performance. Mixing different models of appliance on same site reduces the overall performance at the site.

-

Data protection. Use replicate copies for protection. Do not use erasure coding for an active database. The customer can use erasure coding for long term protection of inactive databases.

-

Do not enable grid compression. Vertica compresses objects before storing to object storage. Enabling grid compression does not further save storage usage and significantly reduces byte-range GET performance.

-

HTTP versus HTTPs S3 endpoint connection. During the benchmark test, we observed about 5% performance improvement when using an HTTP S3 connection from the Vertica cluster to the StorageGRID load balancer endpoint. This choice should be based on customer security requirements.

Recommendations for a Vertica configuration include:

-

Vertica database default depot settings are enabled (value = 1) for read and write operations. NetApp strongly recommends keeping these depot settings enabled to enhance performance.

-

Disable streaming limitations. For configuration details, see the section Disabling streaming limitations.

Installing Eon Mode on-premises with communal storage on StorageGRID

The following sections describe the procedure, in order, to install Eon Mode on-premises with communal storage on StorageGRID. The procedure to configure on-premises Simple Storage Service (S3) compatible object storage is similar to the procedure in the Vertica guide, Install an Eon Mode Database on-premises.

The following setup was used for the functional test:

-

StorageGRID 11.4.0.4

-

Vertica 10.1.0

-

Three virtual machines (VMs) with Centos 7.x OS for Vertica nodes to form a cluster. This setup is for the functional test only, not for the Vertica production database cluster.

These three nodes are set up with a Secure Shell (SSH) key to allow SSH without a password between the nodes within the cluster.

Information required from NetApp StorageGRID

To install Eon Mode on-premises with communal storage on StorageGRID, you must have the following prerequisite information.

-

IP address or fully qualified domain name (FQDN) and port number of the StorageGRID S3 endpoint. If

you are using HTTPS, use a custom certificate authority (CA) or self-signed SSL certificate

implemented on the StorageGRID S3 endpoint. -

Bucket name. It must pre-exist and be empty.

-

Access key ID and secret access key with read and write access to the bucket.

Creating an authorization file to access the S3 endpoint

The following prerequisites apply when creating an authorization file to access the S3 endpoint:

-

Vertica is installed.

-

A cluster is set up, configured, and ready for database creation.

To create an authorization file to access the S3 endpoint, follow these steps:

-

Log in to the Vertica node where you will run

admintoolsto create the Eon Mode database.The default user is

dbadmin, created during the Vertica cluster installation. -

Use a text editor to create a file under the

/home/dbadmindirectory.

The file name can be anything you want, for example,sg_auth.conf. -

If the S3 endpoint is using a standard HTTP port 80 or HTTPS port 443, skip the port number. To use

HTTPS, set the following values:-

awsenablehttps = 1, otherwise set the value to0. -

awsauth = <s3 access key ID>:<secret access key> -

awsendpoint = <StorageGRID s3 endpoint>:<port>To use a custom CA or self-signed SSL certificate for the StorageGRID S3 endpoint HTTPS connection, specify the full file path and filename of the certificate. This file must be at the same location on each Vertica node and have read permission for all users. Skip this step if StorageGRID

S3 Endpoint SSL certificate is signed by publicly known CA.− awscafile = <filepath/filename>For example, see the following sample file:

awsauth = MNVU4OYFAY2xyz123:03vuO4M4KmdfwffT8nqnBmnMVTr78Gu9wANabcxyz awsendpoint = s3.england.connectlab.io:10443 awsenablehttps = 1 awscafile = /etc/custom-cert/grid.pem

In a production environment, the customer should implement a server certificate signed by a publicly known CA on a StorageGRID S3 load balancer endpoint.

-

Choosing a depot path on all Vertica nodes

Choose or create a directory on each node for the depot storage path.

The directory you supply for the depot storage path parameter must have the following:

-

The same path on all nodes in the cluster (for example,

/home/dbadmin/depot) -

Be readable and writable by the dbadmin user

-

Sufficient storage

By default, Vertica uses 60% of the file system space containing the directory for depot storage. You can limit the size of the depot by using the

--depot-sizeargument in thecreate_dbcommand. See Sizing Your Vertica Cluster for an Eon Mode Database article for general Vertica sizing guidelines or consult with your Vertica account manager.The

admintools create_dbtool attempts to create the depot path for you if one does not exist.

Creating the Eon on-premises database

To create the Eon on-premises database, follow these steps:

-

To create the database, use the

admintools create_dbtool.The following list provides a brief explanation of arguments used in this example. See the Vertica document for a detailed explanation of all required and optional arguments.

-

-x <path/filename of authorization file created in “Creating an authorization file to access the S3 endpoint” >.

The authorization details are stored inside database after successful creation. You can remove this file to avoid exposing the S3 secret key.

-

--communal-storage-location <s3://storagegrid bucketname>

-

-s <comma-separated list of Vertica nodes to be used for this database>

-

-d <name of database to be created>

-

-p <password to be set for this new database>.

For example, see the following sample command:admintools -t create_db -x sg_auth.conf --communal-storage-location=s3://vertica --depot-path=/home/dbadmin/depot --shard-count=6 -s vertica-vm1,vertica-vm2,vertica-vm3 -d vmart -p '<password>'

Creating a new database takes several minutes duration depending on number of nodes for the database. When creating database for the first time, you will be prompted to accept the License Agreement.

-

For example, see the following sample authorization file and create db command:

[dbadmin@vertica-vm1 ~]$ cat sg_auth.conf

awsauth = MNVU4OYFAY2CPKVXVxxxx:03vuO4M4KmdfwffT8nqnBmnMVTr78Gu9wAN+xxxx

awsendpoint = s3.england.connectlab.io:10445

awsenablehttps = 1

[dbadmin@vertica-vm1 ~]$ admintools -t create_db -x sg_auth.conf --communal-storage-location=s3://vertica --depot-path=/home/dbadmin/depot --shard-count=6 -s vertica-vm1,vertica-vm2,vertica-vm3 -d vmart -p 'xxxxxxxx'

Default depot size in use

Distributing changes to cluster.

Creating database vmart

Starting bootstrap node v_vmart_node0007 (10.45.74.19)

Starting nodes:

v_vmart_node0007 (10.45.74.19)

Starting Vertica on all nodes. Please wait, databases with a large catalog may take a while to initialize.

Node Status: v_vmart_node0007: (DOWN)

Node Status: v_vmart_node0007: (DOWN)

Node Status: v_vmart_node0007: (DOWN)

Node Status: v_vmart_node0007: (UP)

Creating database nodes

Creating node v_vmart_node0008 (host 10.45.74.29)

Creating node v_vmart_node0009 (host 10.45.74.39)

Generating new configuration information

Stopping single node db before adding additional nodes.

Database shutdown complete

Starting all nodes

Start hosts = ['10.45.74.19', '10.45.74.29', '10.45.74.39']

Starting nodes:

v_vmart_node0007 (10.45.74.19)

v_vmart_node0008 (10.45.74.29)

v_vmart_node0009 (10.45.74.39)

Starting Vertica on all nodes. Please wait, databases with a large catalog may take a while to initialize.

Node Status: v_vmart_node0007: (DOWN) v_vmart_node0008: (DOWN) v_vmart_node0009: (DOWN)

Node Status: v_vmart_node0007: (DOWN) v_vmart_node0008: (DOWN) v_vmart_node0009: (DOWN)

Node Status: v_vmart_node0007: (DOWN) v_vmart_node0008: (DOWN) v_vmart_node0009: (DOWN)

Node Status: v_vmart_node0007: (DOWN) v_vmart_node0008: (DOWN) v_vmart_node0009: (DOWN)

Node Status: v_vmart_node0007: (UP) v_vmart_node0008: (UP) v_vmart_node0009: (UP)

Creating depot locations for 3 nodes

Communal storage detected: rebalancing shards

Waiting for rebalance shards. We will wait for at most 36000 seconds.

Installing AWS package

Success: package AWS installed

Installing ComplexTypes package

Success: package ComplexTypes installed

Installing MachineLearning package

Success: package MachineLearning installed

Installing ParquetExport package

Success: package ParquetExport installed

Installing VFunctions package

Success: package VFunctions installed

Installing approximate package

Success: package approximate installed

Installing flextable package

Success: package flextable installed

Installing kafka package

Success: package kafka installed

Installing logsearch package

Success: package logsearch installed

Installing place package

Success: package place installed

Installing txtindex package

Success: package txtindex installed

Installing voltagesecure package

Success: package voltagesecure installed

Syncing catalog on vmart with 2000 attempts.

Database creation SQL tasks completed successfully. Database vmart created successfully.

| Object size (byte) | Bucket/object key full path |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Disabling streaming limitations

This procedure is based on the Vertica guide for other on-premises object storage and should be applicable to StorageGRID.

-

After creating the database, disable the

AWSStreamingConnectionPercentageconfiguration parameter by setting it to0.

This setting is unnecessary for an Eon Mode on-premises installation with communal storage. This configuration parameter controls the number of connections to the object store that Vertica uses for streaming reads. In a cloud environment, this setting helps avoid having streaming data from the object store use up all the available file handles. It leaves some file handles available for other object store operations. Due to the low latency of on-premises object stores, this option is unnecessary. -

Use a

vsqlstatement to update the parameter value.

The password is the database password that you set in “Creating the Eon on-premises database”.

For example, see the following sample output:

[dbadmin@vertica-vm1 ~]$ vsql

Password:

Welcome to vsql, the Vertica Analytic Database interactive terminal.

Type: \h or \? for help with vsql commands

\g or terminate with semicolon to execute query

\q to quit

dbadmin=> ALTER DATABASE DEFAULT SET PARAMETER AWSStreamingConnectionPercentage = 0; ALTER DATABASE

dbadmin=> \q

Verifying depot settings

Vertica database default depot settings are enabled (value = 1) for read and write operations. NetApp strongly recommends keeping these depot settings enabled to enhance performance.

vsql -c 'show current all;' | grep -i UseDepot DATABASE | UseDepotForReads | 1 DATABASE | UseDepotForWrites | 1

Loading sample data (optional)

If this database is for testing and will be removed, you can load sample data to this database for testing. Vertica comes with sample dataset, VMart, found under /opt/vertica/examples/VMart_Schema/ on each Vertica node.

You can find more information about this sample dataset here.

Follow these steps to load the sample data:

-

Log in as dbadmin to one of the Vertica nodes: cd /opt/vertica/examples/VMart_Schema/

-

Load sample data to the database and enter the database password when prompted in substeps c and d:

-

cd /opt/vertica/examples/VMart_Schema -

./vmart_gen -

vsql < vmart_define_schema.sql -

vsql < vmart_load_data.sql

-

-

There are multiple predefined SQL queries, you can run some of them to confirm test data are loaded successfully into the database.

For example:vsql < vmart_queries1.sql

Where to find additional information

To learn more about the information that is described in this document, review the following documents and/or websites:

Version history

| Version | Date | Document version history |

|---|---|---|

Version 1.0 |

September 2021 |

Initial release. |

By Angela Cheng