Validation Results

Suggest changes

Suggest changes

To run a sample inference request, complete the following steps:

-

Get a shell to the client container/pod.

kubectl exec --stdin --tty <<client_pod_name>> -- /bin/bash

-

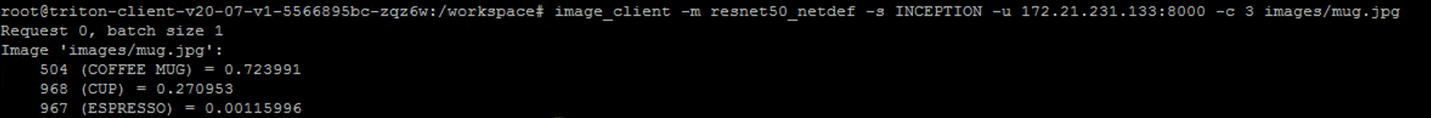

Run a sample inference request.

image_client -m resnet50_netdef -s INCEPTION -u <<LoadBalancer_IP_recorded earlier>>:8000 -c 3 images/mug.jpg

This inferencing request calls the

resnet50_netdefmodel that is used for image recognition. Other clients can also send inferencing requests concurrently by following a similar approach and calling out the appropriate model.