Fractional GPU Allocation for Less Demanding or Interactive Workloads

Suggest changes

Suggest changes

-

PDF of this doc site

PDF of this doc site

-

Artificial Intelligence

Artificial Intelligence

-

Public and Hybrid Cloud

Public and Hybrid Cloud

-

Virtualization

Virtualization

-

Containers

Containers

-

Red Hat OpenShift with NetApp

Red Hat OpenShift with NetApp

-

-

Collection of separate PDF docs

Creating your file...

When researchers and developers are working on their models, whether in the development, hyperparameter tuning, or debugging stages, such workloads usually require fewer computational resources. It is therefore more efficient to provision fractional GPU and memory such that the same GPU can simultaneously be allocated to other workloads. Run:AI’s orchestration solution provides a fractional GPU sharing system for containerized workloads on Kubernetes. The system supports workloads running CUDA programs and is especially suited for lightweight AI tasks such as inference and model building. The fractional GPU system transparently gives data science and AI engineering teams the ability to run multiple workloads simultaneously on a single GPU. This enables companies to run more workloads, such as computer vision, voice recognition, and natural language processing on the same hardware, thus lowering costs.

Run:AI’s fractional GPU system effectively creates virtualized logical GPUs with their own memory and computing space that containers can use and access as if they were self-contained processors. This enables several workloads to run in containers side-by-side on the same GPU without interfering with each other. The solution is transparent, simple, and portable and it requires no changes to the containers themselves.

A typical usecase could see two to eight jobs running on the same GPU, meaning that you could do eight times the work with the same hardware.

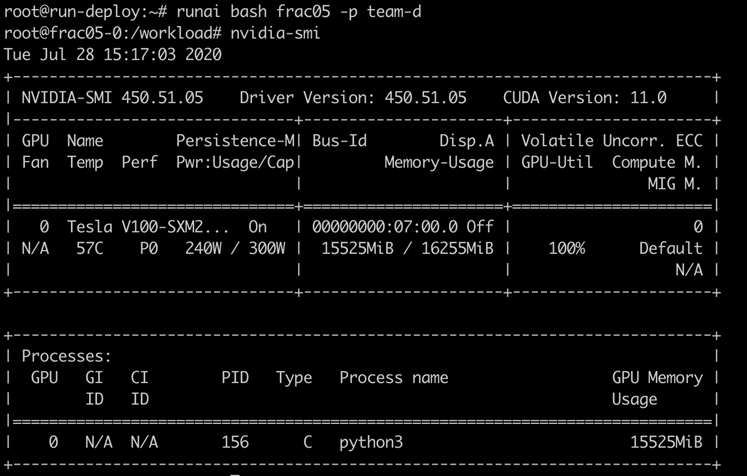

For the job frac05 belonging to project team-d in the following figure, we can see that the number of GPUs allocated was 0.50. This is further verified by the nvidia-smi command, which shows that the GPU memory available to the container was 16,255MB: half of the 32GB per V100 GPU in the DGX-1 node.

Artificial Intelligence

Artificial Intelligence